My Patreon : https://www.patreon.com/user?u=49277905

From playlist Statistical Regression

10 Machine Learning: Ridge Regression

Lecture on ridge regression with a focus on variance and bias trade-off and hyper parameter tuning. Follow along with the demonstration workflow in Python's scikit-learn package: https://github.com/GeostatsGuy/PythonNumericalDemos/blob/master/SubsurfaceDataAnalytics_RidgeRegression.ipynb

From playlist Machine Learning

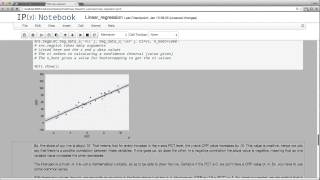

Linear regression is used to compare sets or pairs of numerical data points. We use it to find a correlation between variables.

From playlist Learning medical statistics with python and Jupyter notebooks

Regularization Part 1: Ridge (L2) Regression

Ridge Regression is a neat little way to ensure you don't overfit your training data - essentially, you are desensitizing your model to the training data. It can also help you solve unsolvable equations, and if that isn't bad to the bone, I don't know what is. This StatQuest follows up on

From playlist StatQuest

How to calculate Linear Regression using R. http://www.MyBookSucks.Com/R/Linear_Regression.R http://www.MyBookSucks.Com/R Playlist http://www.youtube.com/playlist?list=PLF596A4043DBEAE9C

From playlist Linear Regression.

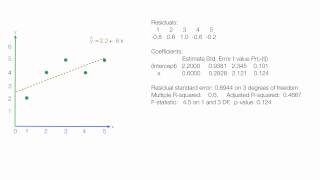

An Introduction to Linear Regression Analysis

Tutorial introducing the idea of linear regression analysis and the least square method. Typically used in a statistics class. Playlist on Linear Regression http://www.youtube.com/course?list=ECF596A4043DBEAE9C Like us on: http://www.facebook.com/PartyMoreStudyLess Created by David Lon

From playlist Linear Regression.

Ridge vs Lasso Regression, Visualized!!!

People often ask why Lasso Regression can make parameter values equal 0, but Ridge Regression can not. This StatQuest shows you why. NOTE: This StatQuest assumes that you are already familiar with Ridge and Lasso Regression. If not, check out the 'Quests. Ridge: https://youtu.be/Q81RR3yKn

From playlist StatQuest

Data Science - Part XII - Ridge Regression, LASSO, and Elastic Nets

For downloadable versions of these lectures, please go to the following link: http://www.slideshare.net/DerekKane/presentations https://github.com/DerekKane/YouTube-Tutorials This lecture provides an overview of some modern regression techniques including a discussion of the bias varianc

From playlist Data Science

Regularization Part 2: Lasso (L1) Regression

Lasso Regression is super similar to Ridge Regression, but there is one big, huge difference between the two. In this video, I start by talking about all of the similarities, and then show you the cool thing that Lasso Regression can do that Ridge Regression can't. NOTE: This StatQuest fo

From playlist StatQuest

Regularization Part 3: Elastic Net Regression

Elastic-Net Regression is combines Lasso Regression with Ridge Regression to give you the best of both worlds. It works well when there are lots of useless variables that need to be removed from the equation and it works well when there are lots of useful variables that need to be retained

From playlist StatQuest

Ridge, Lasso and Elastic-Net Regression in R

The code in this video can be found on the StatQuest GitHub: https://github.com/StatQuest/ridge_lasso_elastic_net_demo/blob/master/ridge_lass_elastic_net_demo.R This video is assumes you already know about Ridge, Lasso and Elastic-Net Regression, if not, here are the links to the Quests..

From playlist Statistics and Machine Learning in R

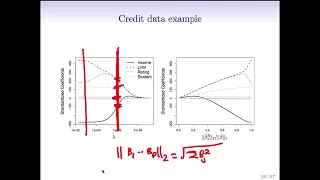

Statistical Learning: 6.7 The Lasso

Statistical Learning, featuring Deep Learning, Survival Analysis and Multiple Testing You are able to take Statistical Learning as an online course on EdX, and you are able to choose a verified path and get a certificate for its completion: https://www.edx.org/course/statistical-learning

From playlist Statistical Learning

Statistical Learning: 6.6 Shrinkage methods and ridge regression

Statistical Learning, featuring Deep Learning, Survival Analysis and Multiple Testing You are able to take Statistical Learning as an online course on EdX, and you are able to choose a verified path and get a certificate for its completion: https://www.edx.org/course/statistical-learning

From playlist Statistical Learning

My Patreon : https://www.patreon.com/user?u=49277905

From playlist Statistical Regression

Regularization In Machine Learning | Regularization Example | Machine Learning Tutorial |Simplilearn

🔥Artificial Intelligence Engineer Program (Discount Coupon: YTBE15): https://www.simplilearn.com/masters-in-artificial-intelligence?utm_campaign=RegularizationinMachineLearning&utm_medium=Descriptionff&utm_source=youtube 🔥Professional Certificate Program In AI And Machine Learning: https:/