Maximum and Minimum Values (Closed interval method)

A review of techniques for finding local and absolute extremes, including an application of the closed interval method

From playlist 241Fall13Ex3

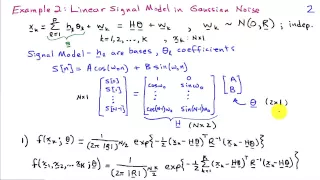

Maximum Likelihood Estimation Examples

http://AllSignalProcessing.com for more great signal processing content, including concept/screenshot files, quizzes, MATLAB and data files. Three examples of applying the maximum likelihood criterion to find an estimator: 1) Mean and variance of an iid Gaussian, 2) Linear signal model in

From playlist Estimation and Detection Theory

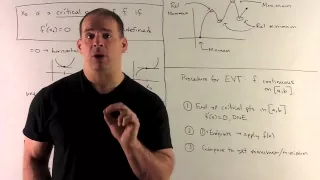

Extreme Value Theorem Using Critical Points

Calculus: The Extreme Value Theorem for a continuous function f(x) on a closed interval [a, b] is given. Relative maximum and minimum values are defined, and a procedure is given for finding maximums and minimums. Examples given are f(x) = x^2 - 4x on the interval [-1, 3], and f(x) =

From playlist Calculus Pt 1: Limits and Derivatives

Extreme Value Statistics: Peak over Threshold methods

From playlist Extreme Value Statistics

What is the max and min of a horizontal line on a closed interval

👉 Learn how to find the extreme values of a function using the extreme value theorem. The extreme values of a function are the points/intervals where the graph is decreasing, increasing, or has an inflection point. A theorem which guarantees the existence of the maximum and minimum points

From playlist Extreme Value Theorem of Functions

Maximum Likelihood For the Normal Distribution, step-by-step!!!

Calculating the maximum likelihood estimates for the normal distribution shows you why we use the mean and standard deviation define the shape of the curve. NOTE: This is another follow up to the StatQuests on Probability vs Likelihood https://youtu.be/pYxNSUDSFH4 and Maximum Likelihood: h

From playlist StatQuest

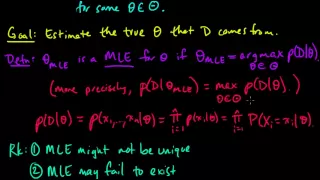

(ML 4.1) Maximum Likelihood Estimation (MLE) (part 1)

Definition of maximum likelihood estimates (MLEs), and a discussion of pros/cons. A playlist of these Machine Learning videos is available here: http://www.youtube.com/my_playlists?p=D0F06AA0D2E8FFBA

From playlist Machine Learning

EXTRA MATH 11C: The LS estimates are also Maximum Likelihood Estimates

Forelæsning med Per B. Brockhoff. Kapitler:

From playlist DTU: Introduction to Statistics | CosmoLearning.org

Lecture 14.5 — RBMs are infinite sigmoid belief nets [Neural Networks for Machine Learning]

Lecture from the course Neural Networks for Machine Learning, as taught by Geoffrey Hinton (University of Toronto) on Coursera in 2012. Link to the course (login required): https://class.coursera.org/neuralnets-2012-001

From playlist [Coursera] Neural Networks for Machine Learning — Geoffrey Hinton

Lecture 14E : RBMs are Infinite Sigmoid Belief Nets

Neural Networks for Machine Learning by Geoffrey Hinton [Coursera 2013] Lecture 14E : RBMs are Infinite Sigmoid Belief Nets

From playlist Neural Networks for Machine Learning by Professor Geoffrey Hinton [Complete]

DeepMind x UCL | Deep Learning Lectures | 11/12 | Modern Latent Variable Models

This lecture, by DeepMind Research Scientist Andriy Mnih, explores latent variable models, a powerful and flexible framework for generative modelling. After introducing this framework along with the concept of inference, which is central to it, Andriy focuses on two types of modern latent

From playlist Learning resources

Lecture 14/16 : Deep neural nets with generative pre-training

Neural Networks for Machine Learning by Geoffrey Hinton [Coursera 2013] 14A Learning layers of features by stacking RBMs 14B Discriminative fine-tuning for DBNs 14C What happens during discriminative fine-tuning? 14D Modeling real-valued data with an RBM 14E RBMs are Infinite Sigmoid Beli

From playlist Neural Networks for Machine Learning by Professor Geoffrey Hinton [Complete]

Lecture 15F : Shallow autoencoders for pre-training

Neural Networks for Machine Learning by Geoffrey Hinton [Coursera 2013] Lecture 15F : Shallow autoencoders for pre-training

From playlist Neural Networks for Machine Learning by Professor Geoffrey Hinton [Complete]

Lecture 15.6 — Shallow autoencoders for pre-training [Neural Networks for Machine Learning]

Lecture from the course Neural Networks for Machine Learning, as taught by Geoffrey Hinton (University of Toronto) on Coursera in 2012. Link to the course (login required): https://class.coursera.org/neuralnets-2012-001

From playlist [Coursera] Neural Networks for Machine Learning — Geoffrey Hinton

Statistical modeling and missing data - Rod Little

Virtual Workshop on Missing Data Challenges in Computation, Statistics and Applications Topic: Statistical modeling and missing data Speaker: Rod Little Date: September 8, 2020 For more video please visit http://video.ias.edu

From playlist Mathematics

Find the max and min from a quadratic on a closed interval

👉 Learn how to find the extreme values of a function using the extreme value theorem. The extreme values of a function are the points/intervals where the graph is decreasing, increasing, or has an inflection point. A theorem which guarantees the existence of the maximum and minimum points

From playlist Extreme Value Theorem of Functions

Howard Bondell - Bayesian inference using estimating equations via empirical likelihood

Professor Howard Bondell (University of Melbourne) presents "Do you have a moment? Bayesian inference using estimating equations via empirical likelihood", 22 October 2021.

From playlist Statistics Across Campuses