Determine the Product of a Matrix and Vector using the Diagonalization of the Matrix

This video explains how to use the diagonalization of a 2 by 2 matrix to find the product of a matrix and a vector given matrix P and D.

From playlist The Diagonalization of Matrices

The Diagonalization of Matrices

This video explains the process of diagonalization of a matrix.

From playlist The Diagonalization of Matrices

In this very easy and short tutorial I explain the concept of the transpose of matrices, where we exchange rows for columns. The matrices have some properties that you should be aware of. These include how to the the transpose of the product of matrices and in the transpose of the invers

From playlist Introducing linear algebra

Linear Algebra for Computer Scientists. 12. Introducing the Matrix

This computer science video is one of a series of lessons about linear algebra for computer scientists. This video introduces the concept of a matrix. A matrix is a rectangular or square, two dimensional array of numbers, symbols, or expressions. A matrix is also classed a second order

From playlist Linear Algebra for Computer Scientists

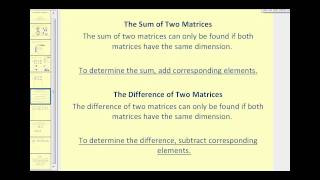

How do we add matrices. A matrix is an abstract object that exists in its own right, and in this sense, it is similar to a natural number, or a complex number, or even a polynomial. Each element in a matrix has an address by way of the row in which it is and the column in which it is. Y

From playlist Introducing linear algebra

We have already looked at the column view of a matrix. In this video lecture I want to expand on this topic to show you that each matrix has a column space. If a matrix is part of a linear system then a linear combination of the columns creates a column space. The vector created by the

From playlist Introducing linear algebra

matrix choose a matrix. Calculating the number of matrix combinations of a matrix, using techniques from linear algebra like diagonalization, eigenvalues, eigenvectors. Special appearance by simultaneous diagonalizability and commuting matrices. In the end, I mention the general case using

From playlist Eigenvalues

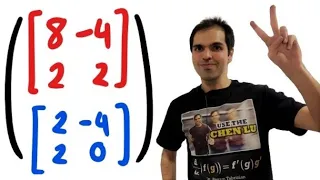

Matrix Addition, Subtraction, and Scalar Multiplication

This video shows how to add, subtract and perform scalar multiplication with matrices. http://mathispower4u.yolasite.com/ http://mathispower4u.wordpress.com/

From playlist Introduction to Matrices and Matrix Operations

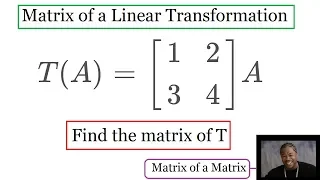

Calculating the matrix of a linear transformation with respect to a basis B. Here is the case where the input basis is the same as the output basis. Check out my Vector Space playlist: https://www.youtube.com/watch?v=mU7DHh6KNzI&list=PLJb1qAQIrmmClZt_Jr192Dc_5I2J3vtYB Subscribe to my ch

From playlist Linear Transformations

Stanford EE104: Introduction to Machine Learning | 2020 | Lecture 6 - empirical risk minimization

Professor Sanjay Lall Electrical Engineering To follow along with the course schedule and syllabus, visit: http://ee104.stanford.edu To view all online courses and programs offered by Stanford, visit: https://online.stanford.edu/ 0:00 Introduction 0:26 Parametrized predictors 3:09 Tra

From playlist Stanford EE104: Introduction to Machine Learning Full Course

Nadav Cohen: "Implicit Regularization in Deep Learning: Lessons Learned from Matrix & Tensor Fac..."

Tensor Methods and Emerging Applications to the Physical and Data Sciences 2021 Workshop I: Tensor Methods and their Applications in the Physical and Data Sciences "Implicit Regularization in Deep Learning: Lessons Learned from Matrix and Tensor Factorization" Nadav Cohen - Tel Aviv Unive

From playlist Tensor Methods and Emerging Applications to the Physical and Data Sciences 2021

Lecture 0310 Regularized linear regression

Machine Learning by Andrew Ng [Coursera] 03-02 Regularization

From playlist Machine Learning by Professor Andrew Ng

Thanks to all of you who support me on Patreon. You da real mvps! $1 per month helps!! :) https://www.patreon.com/patrickjmt !! Part 5 http://www.youtube.com/watch?v=-kwnnNSGFMc Markov Chains , Part 4. Here we begin looking at regular matrices and regular Markov chains. I examine 3 mat

From playlist All Videos - Part 1

Understanding the inductive bias due to dropout - Raman Arora

Workshop on Theory of Deep Learning: Where next? Topic: Understanding the inductive bias due to dropout Speaker: Raman Arora Affiliation: Johns Hopkins University; Member, School of Mathematics Date: October 17, 2019 For more video please visit http://video.ias.edu

From playlist Mathematics

Prob & Stats - Markov Chains (9 of 38) What is a Regular Matrix?

Visit http://ilectureonline.com for more math and science lectures! In this video I will explain what is a regular matrix. Next video in the Markov Chains series: http://youtu.be/loBUEME5chQ

From playlist iLecturesOnline: Probability & Stats 3: Markov Chains & Stochastic Processes

[WeightWatcher] Self-Regularization in Deep Neural Networks: Evidence from Random Matrix Theory

For slides and more information on the paper, visit https://aisc.ai.science/events/2019-11-06 Discussion lead & author: Charles Martin Abstract: Random Matrix Theory (RMT) is applied to analyze weight matrices of Deep Neural Networks (DNNs), including both production quality, pre-train

From playlist Math and Foundations

Marina Poulet, Université Claude Bernard Lyon 1

December 9, Marina Poulet, Université Claude Bernard Lyon 1 Zariski-dense subgroups of Galois groups for Mahler equations

From playlist Fall 2021 Online Kolchin Seminar in Differential Algebra

4.4.4 Matrix-matrix multiplication: Special Shapes

4.4.1 Matrix-matrix multiplication: Motivation

From playlist LAFF - Week 4

Nijenhuis geometry for ECRs: Pre-recorded Lecture 2 Part A

Pre-recorded Lecture 2 Part A: Nijenhuis geometry for ECRs Date: 9 February 2022 Lecture slides: https://mathematical-research-institute.sydney.edu.au/wp-content/uploads/2022/02/Prerecorded_Lecture2.pdf ---------------------------------------------------------------------------------------

From playlist MATRIX-SMRI Symposium: Nijenhuis Geometry and integrable systems