Reproducing kernel Hilbert space

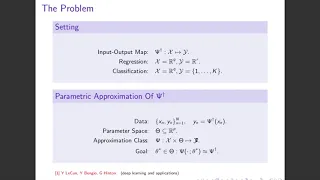

In functional analysis (a branch of mathematics), a reproducing kernel Hilbert space (RKHS) is a Hilbert space of functions in which point evaluation is a continuous linear functional. Roughly speaking, this means that if two functions and in the RKHS are close in norm, i.e., is small, then and are also pointwise close, i.e., is small for all . The converse does not need to be true. It is not entirely straightforward to construct a Hilbert space of functions which is not an RKHS. Some examples, however, have been found. Note that L2 spaces are not Hilbert spaces of functions (and hence not RKHSs), but rather Hilbert spaces of equivalence classes of functions (for example, the functions and defined by and are equivalent in L2). However, there are RKHSs in which the norm is an L2-norm, such as the space of band-limited functions (see the example below). An RKHS is associated with a kernel that reproduces every function in the space in the sense that for every in the set on which the functions are defined, "evaluation at " can be performed by taking an inner product with a function determined by the kernel. Such a reproducing kernel exists if and only if every evaluation functional is continuous. The reproducing kernel was first introduced in the 1907 work of Stanisław Zaremba concerning boundary value problems for harmonic and biharmonic functions. James Mercer simultaneously examined functions which satisfy the reproducing property in the theory of integral equations. The idea of the reproducing kernel remained untouched for nearly twenty years until it appeared in the dissertations of Gábor Szegő, Stefan Bergman, and Salomon Bochner. The subject was eventually systematically developed in the early 1950s by Nachman Aronszajn and Stefan Bergman. These spaces have wide applications, including complex analysis, harmonic analysis, and quantum mechanics. Reproducing kernel Hilbert spaces are particularly important in the field of statistical learning theory because of the celebrated representer theorem which states that every function in an RKHS that minimises an empirical risk functional can be written as a linear combination of the kernel function evaluated at the training points. This is a practically useful result as it effectively simplifies the empirical risk minimization problem from an infinite dimensional to a finite dimensional optimization problem. For ease of understanding, we provide the framework for real-valued Hilbert spaces. The theory can be easily extended to spaces of complex-valued functions and hence include the many important examples of reproducing kernel Hilbert spaces that are spaces of analytic functions. (Wikipedia).