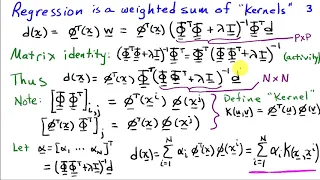

A dynamic granular layering of mixed kernel empirical distribution

From playlist Prob and Stats

Kernel Recipes 2022 - Checking your work: validating the kernel by building and testing in CI

The Linux kernel is one of the most complex pieces of software ever written. Being in ring 0, bugs in the kernel are a big problem, so having confidence in the correctness and robustness of the kernel is incredibly important. This is difficult enough for a single version and configuration

From playlist Kernel Recipes 2022

Custom Install of SUSE Linux Enterprise 11

More videos like this at http://www.theurbanpenguin.com : So in this video we look at: -Installing from the Network -Adding in Additonal Products -LVM Partition for root file-system

From playlist Linux

Kernel Recipes 2014 : kGraft: Live Patching of the Linux Kernel

The talk introduces the need of live kernel patching. Further, it explains what is kGraft, how it works, what are its limitations, and our plans with the implementation in the future. The presentation includes also a live demo if stars constellation allows.

From playlist Kernel Recipes 2014

Kernel Recipes 2018 - Overview of SD/eMMC... - Grégory Clément

SD and eMMC devices are widely present on Linux systems and became on some products the primary storage medium. One of the key feature for storage is the speed of the bus accessing the data. Since the introduction of the original “default” (DS) and “high speed” (HS) modes, the SD card sta

From playlist Kernel Recipes 2018

Kernel Recipes 2019 - What To Do When Your Device Depends on Another One

Contemporary computer systems are quite complicated. There may be multiple connections between various components in them and the components may depend on each other in various ways. At the same time, however, in many cases it is practical to use a separate device driver for each sufficien

From playlist Kernel Recipes 2019

Kernel Recipes 2018 - Live (Kernel) Patching: status quo and status futurus - Jiri Kosina

The purpose of this talk is to provide a short overview of the current live patching facility inside the linux kernel (with a brief history excursion), describe the features it currently provides, and most importantly things that still need to be implemented / designed.

From playlist Kernel Recipes 2018

Kernel Recipes 2014 - Quick state of the art of clang

Working on clang for a while now, I will propose a review of my work on debian rebuild and comment results.

From playlist Kernel Recipes 2014

Unsupervised state embedding and aggregation towards scalable reinforcement learning - Mengdi Wang

Workshop on New Directions in Reinforcement Learning and Control Topic: Unsupervised state embedding and aggregation towards scalable reinforcement learning Speaker: Mengdi Wang Affiliation: Princeton University Date: November 7, 2019 For more video please visit http://video.ias.edu

From playlist Mathematics

Mathieu Carrière (2/19/19): On the metric distortion of embedding persistence diagrams into RKHS

Title: On the metric distortion of embedding persistence diagrams into reproducing kernel Hilbert spaces Abstract: Persistence Diagrams (PDs) are important feature descriptors in Topological Data Analysis. Due to the nonlinearity of the space of PDs equipped with their diagram distances,

From playlist AATRN 2019

Embedded Recipes 2017 - Developing an embedded video application... - Christian Charreyre

Embedded video tends to be an increasing subject in embedded Linux developments. Even if ARM SOCs provide great resources for video treatment with dedicated IPU, GPU …, a dual approach based on FPGA + general purpose processor is an interesting alternative. In this presentation Christian

From playlist Embedded Recipes 2017

Boumediene Hamzi: "Machine Learning and Dynamical Systems meet in Reproducing Kernel Hilbert Spaces"

Machine Learning for Physics and the Physics of Learning 2019 Workshop III: Validation and Guarantees in Learning Physical Models: from Patterns to Governing Equations to Laws of Nature "Machine Learning and Dynamical Systems meet in Reproducing Kernel Hilbert Spaces" Boumediene Hamzi - I

From playlist Machine Learning for Physics and the Physics of Learning 2019

Stanford Seminar - Distributional Representations and Scalable Simulations for Real-to-Sim-to-Real

Distributional Representations and Scalable Simulations for Real-to-Sim-to-Real with Derformables Rika Antonova April 22, 2022 This talk will give an overview of: - the challenges with representing deformable objects - a distributional approach to state representation for deformables - Re

From playlist Stanford AA289 - Robotics and Autonomous Systems Seminar

Kaggle Live Coding: Identifying the most important words in a cluster | Kaggle

This week we'll continue with our clustering project and look into how to determine which words are most important in each cluster. Saliency script: https://www.kaggle.com/rebeccaturner/get-frequency-saliency-of-kaggle-lexicon Notebook: https://www.kaggle.com/rtatman/forum-post-embeddings

From playlist Kaggle Live Coding | Kaggle

Embedded Recipes 2017 - Long-Term Maintenance for Embedded Systems for 10+ Years - Marc Kleine-Budde

The technical side of how to build embedded Linux systems solved by now: Take the kernel, a build system, add some patches, integrate your application and you’re done! In reality though, most of the embedded systems we build are connected to the Internet and run most of the same software

From playlist Embedded Recipes 2017

Successes and Challenges in Neural Models for Speech and Language - Michael Collins

Deep Learning: Alchemy or Science? Topic: Successes and Challenges in Neural Models for Speech and Language Speaker: Michael Collins Affiliation: Google Research/Columbia University Date: February 22, 2019 For more video please visit http://video.ias.edu

From playlist Mathematics

Embedded Recipes 2018 - Using yocto to generate container images for yocto - Jérémy Rosen

Containerisation is a new player in the embedded world. Provisionning and rapid deployment doesn’t really make sense for embedded devices, but the extra security that container partitionning brings to the table is quickly becoming a “must have” for every embedded device. However, the embe

From playlist Embedded Recipes 2018

Kernel Recipes 2015 - Porting Linux to a new processor architecture - by Joël Porquet

Getting the Linux kernel running on a new processor architecture is a difficult process. Worse still, there is not much documentation available describing the porting process. After spending countless hours becoming almost fluent in many of the supported architectures, I discovered that a

From playlist Kernel Recipes 2015

Speaker: Felix Fietkau OpenWrt is a Linux distribution for embedded Wireless LAN routers. In this lecture I'm going to introduce OpenWrt and show you how you can use and customize it for your own projects. For more information visit: http://bit.ly/22c3_information To download the video

From playlist 22C3: Private Investigations