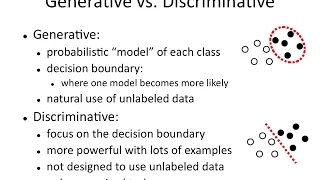

generative model vs discriminative model

understanding difference between generative model and discriminative model with simple example. all machine learning youtube videos from me, https://www.youtube.com/playlist?list=PLVNY1HnUlO26x597OgAN8TCgGTiE-38D6

From playlist Machine Learning

Set Distribution Networks: a Generative Model for Sets of Images (Paper Explained)

We've become very good at making generative models for images and classes of images, but not yet of sets of images, especially when the number of sets is unknown and can contain sets that have never been encountered during training. This paper builds a probabilistic framework and a practic

From playlist Papers Explained

(ML 13.5) Generative process specification

A compact way to specify a model is by its "generative process", using a convenient convention involving the graphical model.

From playlist Machine Learning

Generative Modeling by Estimating Gradients of the Data Distribution - Stefano Ermon

Seminar on Theoretical Machine Learning Topic: Generative Modeling by Estimating Gradients of the Data Distribution Speaker: Stefano Ermon Affiliation: Stanford University Date: May 12, 2020 For more video please visit http://video.ias.edu

From playlist Mathematics

Generative Model Basics - Unconventional Neural Networks p.1

Hello and welcome to a series where we will just be playing around with neural networks. The idea here is to poke around with various neural networks, doing unconventional things with them. Doing things like trying to teach a sequence to sequence model math, doing classification with a gen

From playlist Unconventional Neural Networks

Deep Generative Models, Stable Diffusion, and the Revolution in Visual Synthesis

We had the pleasure of having Professor Björn Ommer as a guest lecturer in my course SSY340, Deep machine learning at Chalmers University of Technology. Chapters: 0:00 Introduction 8:10 Overview of generative models 15:00 Diffusion models 19:37 Stable diffusion 26:10 Retrieval-Augmented

From playlist Invited talks

Generative AI and Long-Term Memory for LLMs (OpenAI, Cohere, OS, Pinecone)

Generative AI is what many expect to be the next big technology boom, and being what it is — AI — could have far-reaching implications far beyond what we'd expect. One of the most thought-provoking use cases of generative AI belongs to Generative Question-Answering (GQA). Now, the most

From playlist Recommended

Plug and Play Language Models: A Simple Approach to Controlled Text Generation | AISC

For slides and more information on the paper, visit https://aisc.ai.science/events/2020-01-13 Discussion lead: Raheleh Makki Discussion facilitator(s): Gordon Gibson, Royal Sequeira + Salman Mohammed Motivation: Large transformer-based language models (LMs) trained on huge text corpora

From playlist Natural Language Processing

CMU Neural Nets for NLP 2017 (8): Conditioned Generation

This lecture (by Graham Neubig) for CMU CS 11-747, Neural Networks for NLP (Fall 2017) covers: * Encoder-Decoder Models * Conditional Generation and Search * Ensembling * Evaluation * Types of Data to Condition On Slides: http://phontron.com/class/nn4nlp2017/assets/slides/nn4nlp-08-condl

From playlist CMU Neural Nets for NLP 2017

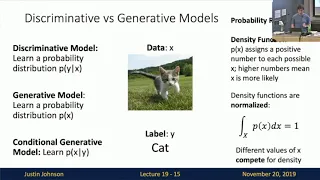

Lecture 19: Generative Models I

Lecture 19 is the first of two lectures about generative models. We compare supervised and unsupervised learning, and also compare discriminative vs generative models. We discuss autoregressive generative models that explicitly model densities, including PixelRNN and PixelCNN. We discuss a

From playlist Tango

DeepMind x UCL | Deep Learning Lectures | 9/12 | Generative Adversarial Networks

Generative adversarial networks (GANs), first proposed by Ian Goodfellow et al. in 2014, have emerged as one of the most promising approaches to generative modeling, particularly for image synthesis. In their most basic form, they consist of two "competing" networks: a generator which trie

From playlist Learning resources

Your Brain on Energy-Based Models: Applying and Scaling EBMs to Problems...- Will Grathwohl

Seminar on Theoretical Machine Learning Topic: Your Brain on Energy-Based Models: Applying and Scaling EBMs to Problems of Interest to Machine Learning Speaker: Will Grathwohl Affiliation: University of Toronto Date: March 10, 2020 For more video please visit http://video.ias.edu

From playlist Mathematics

Automated Text Generation & Data-Augmentation for Medicine, Finance, Law, and E-Commerce

This webinar teaches you how to leverage the human-level text generation capabilities of Large Transformer models to increase the accuracy of most NLP classifiers for Medicine, Finance, and Legal datasets with the Spark NLP library. We will also explore the next-generation capabilities f

From playlist AI & NLP Webinars

DeepMind x UCL | Deep Learning Lectures | 11/12 | Modern Latent Variable Models

This lecture, by DeepMind Research Scientist Andriy Mnih, explores latent variable models, a powerful and flexible framework for generative modelling. After introducing this framework along with the concept of inference, which is central to it, Andriy focuses on two types of modern latent

From playlist Learning resources

HyperTransformer: Model Generation for Supervised and Semi-Supervised Few-Shot Learning (w/ Author)

#hypertransformer #metalearning #deeplearning This video contains a paper explanation and an interview with author Andrey Zhmoginov! Few-shot learning is an interesting sub-field in meta-learning, with wide applications, such as creating personalized models based on just a handful of data

From playlist Papers Explained

Deep Generative models and Inverse Problems - Alexandros Dimakis

Seminar on Theoretical Machine Learning Topic:Deep Generative models and Inverse Problems Speaker: Alexandros Dimakis Affiliation: University of Texas at Austin Date: April 23, 2020 For more video please visit http://video.ias.edu

From playlist Mathematics

Image generative modeling for future prediction or (...) - Couprie - Workshop 1 - CEB T1 2019

Couprie (Facebook) / 05.02.2019 Image generative modeling for future prediction or inspirational purposes Generative models, and in particular adversarial ones, are becoming prevalent in computer vision as they enable enhancing artistic creation, inspire designers, prove usefulness in s

From playlist 2019 - T1 - The Mathematics of Imaging

Leveraging Language Models for Training Data Generation and Tool Learning

see more slides, notes, and other material here: https://github.com/Aggregate-Intellect/practical-llms/ https://www.linkedin.com/in/gordon-gibson-874b3130/ ** Large Language Models and Synthetic Data Research on using unlabeled data to improve large language models is exciting, and the p

From playlist Practical Large Language Models