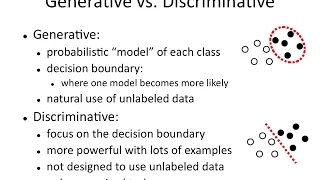

Probabilistic models | Statistical models

Flow-based generative model

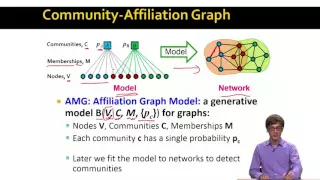

A flow-based generative model is a generative model used in machine learning that explicitly models a probability distribution by leveraging normalizing flow, which is a statistical method using the change-of-variable law of probabilities to transform a simple distribution into a complex one. The direct modeling of likelihood provides many advantages. For example, the negative log-likelihood can be directly computed and minimized as the loss function. Additionally, novel samples can be generated by sampling from the initial distribution, and applying the flow transformation. In contrast, many alternative generative modeling methods such as variational autoencoder (VAE) and generative adversarial network do not explicitly represent the likelihood function. (Wikipedia).