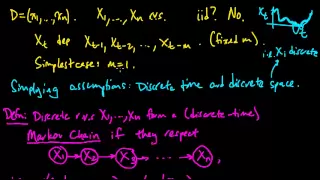

Continuous-time Markov chain

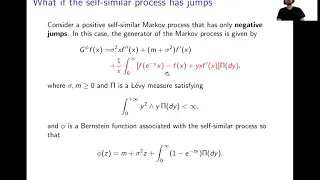

A continuous-time Markov chain (CTMC) is a continuous stochastic process in which, for each state, the process will change state according to an exponential random variable and then move to a different state as specified by the probabilities of a stochastic matrix. An equivalent formulation describes the process as changing state according to the least value of a set of exponential random variables, one for each possible state it can move to, with the parameters determined by the current state. An example of a CTMC with three states is as follows: the process makes a transition after the amount of time specified by the holding time—an exponential random variable , where i is its current state. Each random variable is independent and such that , and . When a transition is to be made, the process moves according to the jump chain, a discrete-time Markov chain with stochastic matrix: Equivalently, by the property of competing exponentials, this CTMC changes state from state i according to the minimum of two random variables, which are independent and such that for where the parameters are given by the Q-matrix Each non-diagonal entry can be computed as the probability that the jump chain moves from state i to state j, divided by the expected holding time of state i. The diagonal entries are chosen so that each row sums to 0. A CTMC satisfies the Markov property, that its behavior depends only on its current state and not on its past behavior, due to the memorylessness of the exponential distribution and of discrete-time Markov chains. (Wikipedia).