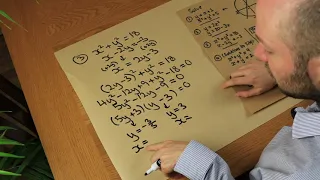

Quadratic Simultaneous Equations

"Solve simultaneous equations where one is quadratic, one is linear."

From playlist Algebra: Simultaneous Equations

Solving a multi step equation with brackets and parenthesis ex 18, 7n+2[3(1–n)–2(1+n)]=14

👉 Learn how to solve multi-step equations. An equation is a statement stating that two values are equal. A multi-step equation is an equation which can be solved by applying multiple steps of operations to get the solution. To solve a multi-step equation, we first use distribution propert

From playlist Solve Multi-Step Equations......Help!

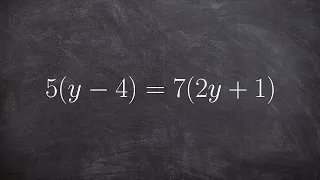

Solving an equation with distributive property on both sides

👉 Learn how to solve multi-step equations with parenthesis and variable on both sides of the equation. An equation is a statement stating that two values are equal. A multi-step equation is an equation which can be solved by applying multiple steps of operations to get to the solution. To

From playlist Solve Multi-Step Equations......Help!

Introduction to Dense Text Representations - Part 2

The second part covers basic training methods to learn dense text representation models. Content Part 2: - Basic Training Methods - Overview Loss Functions - MultipleNegativesRankingLoss - Improving Model Quality with Hard Negatives - Hard Negative Mining Slides: https://nils-reimers.de/

From playlist Introduction to Dense Text Representation

Solving a two step equation with the distributive property

👉 Learn how to solve multi-step equations with parenthesis. An equation is a statement stating that two values are equal. A multi-step equation is an equation which can be solved by applying multiple steps of operations to get to the solution. To solve a multi-step equation with parenthes

From playlist How to Solve Multi Step Equations with Parenthesis

Artificial Intelligence | Image Retrieval By Similarity Tensorflow & Keras | Session 09 | #AI

Don’t forget to subscribe! In this artificial intelligence project series, you will learn image retrieval by similarity using Tensorflow and Keras. This series will cover the necessary details to help you learn about artificial intelligence and in the process, you will learn image retrie

From playlist Image Retrieval By Similarity Tensorflow & Keras

Solving a multi step equation with variables on both sides 5+3r=5r–19

👉 Learn how to solve multi-step equations with variable on both sides of the equation. An equation is a statement stating that two values are equal. A multi-step equation is an equation which can be solved by applying multiple steps of operations to get to the solution. To solve a multi-s

From playlist How to Solve Multi Step Equations with Variables on Both Sides

Artificial Intelligence | Image Retrieval By Similarity Tensorflow & Keras | Session 10 | #AI

Don’t forget to subscribe! In this artificial intelligence project series, you will learn image retrieval by similarity using Tensorflow and Keras. This series will cover the necessary details to help you learn about artificial intelligence and in the process, you will learn image retrie

From playlist Image Retrieval By Similarity Tensorflow & Keras

Content Graphs: Multi-Task NLP Approach for Cataloging

Install NLP Libraries https://www.johnsnowlabs.com/install/ Register for Healthcare NLP Summit 2023: https://www.nlpsummit.org/#register Watch all NLP Summit 2022 sessions: https://www.nlpsummit.org/nlp-summit-2022-watch-now/ Presented by Sakshi Bhargava, Staff Data Scientist at Chegg

From playlist NLP Summit 2022

Using distributive property and combining like terms to solve linear equations

👉 Learn how to solve multi-step equations with parenthesis and variable on both sides of the equation. An equation is a statement stating that two values are equal. A multi-step equation is an equation which can be solved by applying multiple steps of operations to get to the solution. To

From playlist How to Solve Multi Step Equations with Parenthesis on Both Sides

Solve a multi step equation with variables on the same side ex 15, 4(3y–1)–5y=–11

👉 Learn how to solve multi-step equations with parenthesis. An equation is a statement stating that two values are equal. A multi-step equation is an equation which can be solved by applying multiple steps of operations to get to the solution. To solve a multi-step equation with parenthes

From playlist How to Solve Multi Step Equations with Parenthesis

Stanford CS330 I Unsupervised Pre-Training:Contrastive Learning l 2022 I Lecture 7

For more information about Stanford's Artificial Intelligence programs visit: https://stanford.io/ai To follow along with the course, visit: https://cs330.stanford.edu/ To view all online courses and programs offered by Stanford, visit: http://online.stanford.edu Chelsea Finn Computer

From playlist Stanford CS330: Deep Multi-Task and Meta Learning I Autumn 2022

Fairness in commercial face recognition algorithms

Session 3 – Dr Santhosh Narayanan, The Alan Turing Institute

From playlist Trustworthy Digital Identity – Workshop, December 2022

Deep Learning for Natural Language Processing with Jon Krohn

Jon Krohn introduces how to preprocess natural language data. He then uses hands-on code demos to build deep learning networks that make predictions using those data. This lesson is an excerpt from "Deep Learning for Natural Language Processing LiveLessons, 2nd Edition." Purchase entire

From playlist Talks and Tutorials

Supervised Contrastive Learning

The cross-entropy loss has been the default in deep learning for the last few years for supervised learning. This paper proposes a new loss, the supervised contrastive loss, and uses it to pre-train the network in a supervised fashion. The resulting model, when fine-tuned to ImageNet, achi

From playlist General Machine Learning

Quantum Transport, Lecture 12: Spin Qubits

Instructor: Sergey Frolov, University of Pittsburgh, Spring 2013 http://sergeyfrolov.wordpress.com/ Summary: single spin qubits and singlet-triplet qubits in group III-V semiconductor quantum dots, and silicon-based structures. Quantum Transport course development supported in part by the

From playlist Quantum Transport

Solve a multi step equation with two variables and distributive property ex 19, –7=3(t–5)–t

👉 Learn how to solve multi-step equations with parenthesis. An equation is a statement stating that two values are equal. A multi-step equation is an equation which can be solved by applying multiple steps of operations to get to the solution. To solve a multi-step equation with parenthes

From playlist How to Solve Multi Step Equations with Parenthesis

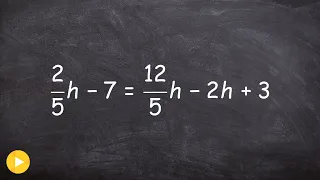

How to solve multi step equations with fractional coefficients

👉 Learn how to solve multi-step equations with variable on both sides of the equation. An equation is a statement stating that two values are equal. A multi-step equation is an equation which can be solved by applying multiple steps of operations to get to the solution. To solve a multi-s

From playlist How to Solve Multi Step Equations with Variables on Both Sides

Engineering Single and N-Photon Emission from Frequency Resolved Correlations by Elena Del Valle

PROGRAM NON-HERMITIAN PHYSICS (ONLINE) ORGANIZERS: Manas Kulkarni (ICTS, India) and Bhabani Prasad Mandal (Banaras Hindu University, India) DATE: 22 March 2021 to 26 March 2021 VENUE: Online Non-Hermitian Systems / Open Quantum Systems are not only of fundamental interest in physics a

From playlist Non-Hermitian Physics (ONLINE)

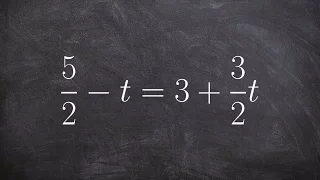

Solving a multi-step equation by multiplying by the denominator

👉 Learn how to solve multi-step equations with variable on both sides of the equation. An equation is a statement stating that two values are equal. A multi-step equation is an equation which can be solved by applying multiple steps of operations to get to the solution. To solve a multi-s

From playlist How to Solve Multi Step Equations with Variables on Both Sides