What is Neural Network in Machine Learning | Neural Network Explained | Neural Network | Simplilearn

This video by Simplilearn is based on Neural Networks in Machine Learning. This Neural Network in Machine Learning Tutorial will cover the fundamentals of Neural Networks along with theoretical and practical demonstrations for a better learning experience 🔥Enroll for Free Machine Learning

From playlist Machine Learning Algorithms [2022 Updated]

This lecture gives an overview of neural networks, which play an important role in machine learning today. Book website: http://databookuw.com/ Steve Brunton's website: eigensteve.com

From playlist Intro to Data Science

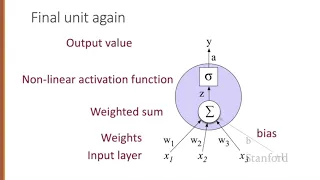

Neural Networks 1 Neural Units

From playlist Week 5: Neural Networks

Deep Learning with Neural Networks and TensorFlow Introduction

Welcome to a new section in our Machine Learning Tutorial series: Deep Learning with Neural Networks and TensorFlow. The artificial neural network is a biologically-inspired methodology to conduct machine learning, intended to mimic your brain (a biological neural network). The Artificial

From playlist Machine Learning with Python

Practical 4.0 – RNN, vectors and sequences

Recurrent Neural Networks – Vectors and sequences Full project: https://github.com/Atcold/torch-Video-Tutorials Links to the paper Vinyals et al. (2016) https://arxiv.org/abs/1609.06647 Zaremba & Sutskever (2015) https://arxiv.org/abs/1410.4615 Cho et al. (2014) https://arxiv.org/abs/1406

From playlist Deep-Learning-Course

Star Network - Intro to Algorithms

This video is part of an online course, Intro to Algorithms. Check out the course here: https://www.udacity.com/course/cs215.

From playlist Introduction to Algorithms

Recurrent Neural Networks (RNN) - Deep Learning with Neural Networks and TensorFlow 10

In this Deep Learning with TensorFlow tutorial, we cover the basics of the Recurrent Neural Network, along with the LSTM (Long Short Term Memory) cell, which is a very common RNN cell used. https://pythonprogramming.net https://twitter.com/sentdex https://www.facebook.com/pythonprogrammin

From playlist Machine Learning with Python

SBERT (Sentence Transformers) is not BERT Sentence Embedding: Intro & Tutorial (#sbert Ep 37)

SBERT is not BERT Sentence Embedding (Introduction, Tutorial). Quite some viewers ask about SBERT Sentence Transformers, and confuse it with BERT Sentence Vectors or Embedding. I try a clarification of both systems and why one outperforms the other one for sentence semantic similarity.

From playlist SBERT: Python Code Sentence Transformers: a Bi-Encoder /Transformer model #sbert

Neural Network Python | How to make a Neural Network in Python | Python Tutorial | Edureka

🔥Edureka Machine Learning Engineer Masters Program: https://www.edureka.co/masters-program/machine-learning-engineer-training This Edureka video is a part of the Python Tutorial series which will give you a detailed explanation of how neural networks work in Python. Modeled in accordance w

From playlist Python Programming Tutorials | Edureka

Stanford CS330 Deep Multi-Task & Meta Learning - Non-Parametric Few-Shot Learning l 2022 I Lecture 6

For more information about Stanford's Artificial Intelligence programs visit: https://stanford.io/ai To follow along with the course, visit: https://cs330.stanford.edu/ To view all online courses and programs offered by Stanford, visit: http://online.stanford.edu Chelsea Finn Computer

From playlist Stanford CS330: Deep Multi-Task and Meta Learning I Autumn 2022

Transformer Neural Networks - an Overview!

Let's talk about Recurrent Networks, Transformer Neural Networks, BERT Networks and Sentence Transformers all in one video! Follow me on M E D I U M: https://towardsdatascience.com/likelihood-probability-and-the-math-you-should-know-9bf66db5241b Joins us on D I S C O R D: https://discord.

From playlist Transformer Neural Networks

AI Learns Geometric Descriptors From Depth Images | Two Minute Papers #148

The paper "3DMatch: Learning Local Geometric Descriptors from RGB-D Reconstructions" is available here: http://3dmatch.cs.princeton.edu/ Recommended for you: Our earlier episode on Siamese networks - https://www.youtube.com/watch?v=a3sgFQjEfp4 WE WOULD LIKE TO THANK OUR GENEROUS PATREON

From playlist AI and Deep Learning - Two Minute Papers

Intro to Sentence Embeddings with Transformers

Transformers have wholly rebuilt the landscape of natural language processing (NLP). Before transformers, we had okay translation and language classification thanks to recurrent neural nets (RNNs) - their language comprehension was limited and led to many minor mistakes, and coherence over

From playlist NLP for Semantic Search Course

Photorealistic Images from Drawings | Two Minute Papers #80

The Two Minute Papers subreddit is available here: https://www.reddit.com/r/twominutepapers/ By using a convolutional neural networks (a powerful deep learning technique), it is now possible to build an application that takes a rough sketch as an input, and fetches photorealistic images

From playlist AI and Deep Learning - Two Minute Papers

Fine-tune Sentence Transformers the OG Way (with NLI Softmax loss)

Sentence embeddings with transformers can be used across a range of applications, such as semantic textual similarity (STS), semantic clustering, or information retrieval (IR) using concepts rather than words. This video dives deeper into the training process of the first sentence transfo

From playlist NLP for Semantic Search Course

CMU Neural Nets for NLP 2017 (7): Using/Evaluating Sentence Representations

This lecture (by Graham Neubig) for CMU CS 11-747, Neural Networks for NLP (Fall 2017) covers: * Sentence Similarity * Textual Entailment * Paraphrase Identification * Retrieval Slides: http://phontron.com/class/nn4nlp2017/assets/slides/nn4nlp-07-sentrep.pdf Code Examples: https://github

From playlist CMU Neural Nets for NLP 2017

Painter by Numbers | by Kiri Nichol | Kaggle Days San Francisco

Kiri Nichol "Painter by Numbers" This is a two-part presentation: Part 1: designing the Painter By Numbers competition Part 2 (https://youtu.be/Y2pSEZC1fhI?t=889): predictions about what machine learning - and Kaggle - might look like in the next couple years. About the presenter: After

From playlist Kaggle Days San Francisco Edition | by LogicAI + Kaggle

Machine Learning @ Amazon by Rajeev Rastogi

DISCUSSION MEETING THE THEORETICAL BASIS OF MACHINE LEARNING (ML) ORGANIZERS: Chiranjib Bhattacharya, Sunita Sarawagi, Ravi Sundaram and SVN Vishwanathan DATE : 27 December 2018 to 29 December 2018 VENUE : Ramanujan Lecture Hall, ICTS, Bangalore ML (Machine Learning) has enjoyed tr

From playlist The Theoretical Basis of Machine Learning 2018 (ML)

What Is Quantum Computing | Quantum Computing Explained | Quantum Computer | #Shorts | Simplilearn

🔥Explore Our Free Courses With Completion Certificate by SkillUp: https://www.simplilearn.com/skillup-free-online-courses?utm_campaign=QuantumComputingShorts&utm_medium=ShortsDescription&utm_source=youtube Quantum computing is a branch of computing that focuses on developing computer tech

From playlist #Shorts | #Simplilearn