Loss functions for classification

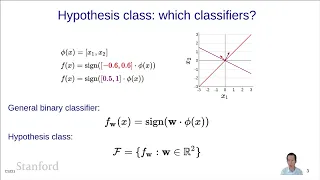

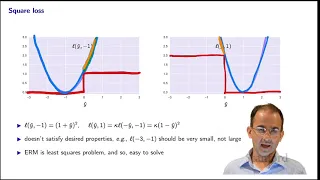

In machine learning and mathematical optimization, loss functions for classification are computationally feasible loss functions representing the price paid for inaccuracy of predictions in classification problems (problems of identifying which category a particular observation belongs to). Given as the space of all possible inputs (usually ), and as the set of labels (possible outputs), a typical goal of classification algorithms is to find a function which best predicts a label for a given input . However, because of incomplete information, noise in the measurement, or probabilistic components in the underlying process, it is possible for the same to generate different . As a result, the goal of the learning problem is to minimize expected loss (also known as the risk), defined as where is a given loss function, and is the probability density function of the process that generated the data, which can equivalently be written as Within classification, several commonly used loss functions are written solely in terms of the product of the true label and the predicted label . Therefore, they can be defined as functions of only one variable , so that with a suitably chosen function . These are called margin-based loss functions. Choosing a margin-based loss function amounts to choosing . Selection of a loss function within this framework impacts the optimal which minimizes the expected risk. In the case of binary classification, it is possible to simplify the calculation of expected risk from the integral specified above. Specifically, The second equality follows from the properties described above. The third equality follows from the fact that 1 and −1 are the only possible values for , and the fourth because . The term within brackets is known as the conditional risk. One can solve for the minimizer of by taking the functional derivative of the last equality with respect to and setting the derivative equal to 0. This will result in the following equation which is also equivalent to setting the derivative of the conditional risk equal to zero. Given the binary nature of classification, a natural selection for a loss function (assuming equal cost for false positives and false negatives) would be the 0-1 loss function (0–1 indicator function), which takes the value of 0 if the predicted classification equals that of the true class or a 1 if the predicted classification does not match the true class. This selection is modeled by where indicates the Heaviside step function.However, this loss function is non-convex and non-smooth, and solving for the optimal solution is an NP-hard combinatorial optimization problem. As a result, it is better to substitute loss function surrogates which are tractable for commonly used learning algorithms, as they have convenient properties such as being convex and smooth. In addition to their computational tractability, one can show that the solutions to the learning problem using these loss surrogates allow for the recovery of the actual solution to the original classification problem. Some of these surrogates are described below. In practice, the probability distribution is unknown. Consequently, utilizing a training set of independently and identically distributed sample points drawn from the data sample space, one seeks to minimize empirical risk as a proxy for expected risk. (See statistical learning theory for a more detailed description.) (Wikipedia).