Entropy production during free expansion of an ideal gas by Subhadip Chakraborti

Abstract: According to the second law, the entropy of an isolated system increases during its evolution from one equilibrium state to another. The free expansion of a gas, on removal of a partition in a box, is an example where we expect to see such an increase of entropy. The constructi

From playlist Seminar Series

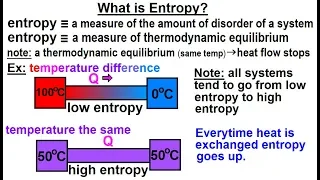

Physics - Thermodynamics 2: Ch 32.7 Thermo Potential (10 of 25) What is Entropy?

Visit http://ilectureonline.com for more math and science lectures! In this video explain and give examples of what is entropy. 1) entropy is a measure of the amount of disorder (randomness) of a system. 2) entropy is a measure of thermodynamic equilibrium. Low entropy implies heat flow t

From playlist PHYSICS 32.7 THERMODYNAMIC POTENTIALS

Maximum principle for heat equation In this video, I present the maximum principle, which is a very interesting property of the heat equation: Namely the largest (and smallest) value of solutions is attained either initially, or on the sides! Check out my PDE Playlist: https://www.yout

From playlist Partial Differential Equations

Teach Astronomy - Entropy of the Universe

http://www.teachastronomy.com/ The entropy of the universe is a measure of its disorder or chaos. If the laws of thermodynamics apply to the universe as a whole as they do to individual objects or systems within the universe, then the fate of the universe must be to increase in entropy.

From playlist 23. The Big Bang, Inflation, and General Cosmology 2

Maximum and Minimum of a set In this video, I define the maximum and minimum of a set, and show that they don't always exist. Enjoy! Check out my Real Numbers Playlist: https://www.youtube.com/playlist?list=PLJb1qAQIrmmCZggpJZvUXnUzaw7fHCtoh

From playlist Real Numbers

Entropy: The Heat Death of The Universe

Entropy: The Heat Death of The Universe - https://aperture.gg/heatdeath Sign up with Brilliant for FREE and start learning today: https://brilliant.org/aperture "Maximum Entropy" Hoodies — Available Now: https://aperture.gg/entropy As the arrow of time pushes us forward, each day the univ

From playlist Science & Technology 🚀

Thermodynamics and the End of the Universe: Energy, Entropy, and the fundamental laws of physics.

Easy to understand animation explaining energy, entropy, and all the basic concepts including refrigeration, heat engines, and the end of all life in the Universe.

From playlist Science

Thermodynamics 4a - Entropy and the Second Law I

The Second Law of Thermodynamics is one of the most important laws in all of physics. But it is also one of the more difficult to understand. Central to it are the concepts of reversibility and entropy. Note on the definition of a "closed system." I am using the term "closed system" in th

From playlist Thermodynamics

Stanford CS229: Machine Learning | Summer 2019 | Lecture 19 - Maximum Entropy and Calibration

For more information about Stanford’s Artificial Intelligence professional and graduate programs, visit: https://stanford.io/3m4pnSp Anand Avati Computer Science, PhD To follow along with the course schedule and syllabus, visit: http://cs229.stanford.edu/syllabus-summer2019.html

From playlist Stanford CS229: Machine Learning Course | Summer 2019 (Anand Avati)

Free ebook https://bookboon.com/en/partial-differential-equations-ebook What is the maximum principle for partial differential equations and how is it useful? The main result is presented and proved. Such ideas have important applications to understanding the behaviour of solutions to pa

From playlist Partial differential equations

Statistical Rethinking Winter 2019 Lecture 11

Lecture 11 of the Dec 2018 through March 2019 edition of Statistical Rethinking: A Bayesian Course with R and Stan. Covers Chapters 10 and 11: maximum entropy, generalized linear models.

From playlist Statistical Rethinking Winter 2019

Ben Andrews: Limiting shapes of fully nonlinear flows of convex hypersurfaces

Abstract: I will discuss some questions about the long-time behaviour of hypersurfaces evolving by functions of curvature which are homogeneous of degree greater than 1. ------------------------------------------------------------------------------------------------------------------------

From playlist MATRIX-SMRI Symposium: Singularities in Geometric Flows

Entropy Bounds, Light-sheets, and the Holographic Principle in Cosmology, part 1 - Raphael Bousso

Entropy Bounds, Light-sheets, and the Holographic Principle in Cosmology, part 1 Raphael Bousso University of California, Berkeley July 28, 2011

From playlist PiTP 2011

La théorie l’information sans peine - Bourbaphy - 17/11/18

Olivier Rioul (Telecom Paris Tech) / 17.11.2018 La théorie l’information sans peine ---------------------------------- Vous pouvez nous rejoindre sur les réseaux sociaux pour suivre nos actualités. Facebook : https://www.facebook.com/InstitutHenriPoincare/ Twitter : https://twitter.com

From playlist Bourbaphy - 17/11/18 - L'information

Lecture 7 | Topics in String Theory

(February 28, 2011) Leonard Susskind gives a lecture on string theory and particle physics that continues the theory behind calculating the entropy of a black hole. In the last of course of this series, Leonard Susskind continues his exploration of string theory that attempts to reconcil

From playlist Lecture Collection | Topics in String Theory (Winter 2011)

Abstraction - Seminar 4 - Sufficient statistics and the Koopman-Pitman-Darmois theorem

This seminar series is on the relations among Natural Abstraction, Renormalisation and Resolution. This week Alexander Oldenziel explains some more of Wenworth's view of natural abstractions, presents the story of sufficient statistics and the Koopman-Pitman-Darmois theorem. The webpage f

From playlist Abstraction

undergraduate machine learning 11: Maximum likelihood

Introduction to maximum likelihood. The slides are available here: http://www.cs.ubc.ca/~nando/340-2012/lectures.php This course was taught in 2012 at UBC by Nando de Freitas

From playlist undergraduate machine learning at UBC 2012

Second Law of Thermodynamics,Entropy &Gibbs Free Energy

Here is a lecture to understand 2nd law of thermodynamics in a conceptual way. Along with 2nd law, concepts of entropy and Gibbs free energy are also explained. Check http://www.learnengineering.org/2012/12/understanding-second-law-of.html to get to know about industrial applications of se

From playlist Mechanical Engineering

Topics in Combinatorics lecture 10.0 --- The formula for entropy

In this video I present the formula for the entropy of a random variable that takes values in a finite set, prove that it satisfies the entropy axioms, and prove that it is the only formula that satisfies the entropy axioms. 0:00 The formula for entropy and proof that it satisfies the ax

From playlist Topics in Combinatorics (Cambridge Part III course)

Statistical Rethinking - Lecture 13

Lecture 13 - Generalized Linear Models (intro) - Statistical Rethinking: A Bayesian Course with R Examples

From playlist Statistical Rethinking Winter 2015