Maxwell-Boltzmann distribution

Entropy and the Maxwell-Boltzmann velocity distribution. Also discusses why this is different than the Bose-Einstein and Fermi-Dirac energy distributions for quantum particles. My Patreon page is at https://www.patreon.com/EugeneK 00:00 Maxwell-Boltzmann distribution 02:45 Higher Temper

From playlist Physics

UNIFORM Probability Distribution for Discrete Random Variables (9-5)

Uniform Probability Distribution: (i.e., a rectangular distribution) is a probability distribution involving one random variable with a constant probability. Each potential outcome is equally likely, such as flipping coin and getting heads is always 50/50. On Chaos Night, Dante experiment

From playlist Discrete Probability Distributions in Statistics (WK 9 - QBA 237)

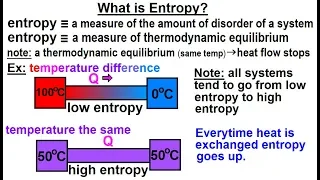

Physics - Thermodynamics 2: Ch 32.7 Thermo Potential (10 of 25) What is Entropy?

Visit http://ilectureonline.com for more math and science lectures! In this video explain and give examples of what is entropy. 1) entropy is a measure of the amount of disorder (randomness) of a system. 2) entropy is a measure of thermodynamic equilibrium. Low entropy implies heat flow t

From playlist PHYSICS 32.7 THERMODYNAMIC POTENTIALS

Entropy production during free expansion of an ideal gas by Subhadip Chakraborti

Abstract: According to the second law, the entropy of an isolated system increases during its evolution from one equilibrium state to another. The free expansion of a gas, on removal of a partition in a box, is an example where we expect to see such an increase of entropy. The constructi

From playlist Seminar Series

Topics in Combinatorics lecture 10.0 --- The formula for entropy

In this video I present the formula for the entropy of a random variable that takes values in a finite set, prove that it satisfies the entropy axioms, and prove that it is the only formula that satisfies the entropy axioms. 0:00 The formula for entropy and proof that it satisfies the ax

From playlist Topics in Combinatorics (Cambridge Part III course)

Teach Astronomy - Entropy of the Universe

http://www.teachastronomy.com/ The entropy of the universe is a measure of its disorder or chaos. If the laws of thermodynamics apply to the universe as a whole as they do to individual objects or systems within the universe, then the fate of the universe must be to increase in entropy.

From playlist 23. The Big Bang, Inflation, and General Cosmology 2

Entropy: The Heat Death of The Universe

Entropy: The Heat Death of The Universe - https://aperture.gg/heatdeath Sign up with Brilliant for FREE and start learning today: https://brilliant.org/aperture "Maximum Entropy" Hoodies — Available Now: https://aperture.gg/entropy As the arrow of time pushes us forward, each day the univ

From playlist Science & Technology 🚀

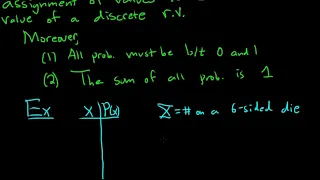

Definition of a Discrete Probability Distribution

Please Subscribe here, thank you!!! https://goo.gl/JQ8Nys Definition of a Discrete Probability Distribution

From playlist Statistics

Uniform Probability Distribution Examples

Overview and definition of a uniform probability distribution. Worked examples of how to find probabilities.

From playlist Probability Distributions

Stanford CS229: Machine Learning | Summer 2019 | Lecture 19 - Maximum Entropy and Calibration

For more information about Stanford’s Artificial Intelligence professional and graduate programs, visit: https://stanford.io/3m4pnSp Anand Avati Computer Science, PhD To follow along with the course schedule and syllabus, visit: http://cs229.stanford.edu/syllabus-summer2019.html

From playlist Stanford CS229: Machine Learning Course | Summer 2019 (Anand Avati)

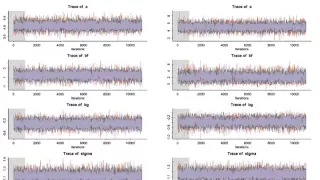

Statistical Rethinking Winter 2019 Lecture 11

Lecture 11 of the Dec 2018 through March 2019 edition of Statistical Rethinking: A Bayesian Course with R and Stan. Covers Chapters 10 and 11: maximum entropy, generalized linear models.

From playlist Statistical Rethinking Winter 2019

Statistical Rethinking - Lecture 12

Lecture 12 - MCMC / Maximum Entropy - Statistical Rethinking: A Bayesian Course with R Examples

From playlist Statistical Rethinking Winter 2015

Stanford CS330: Multi-Task and Meta-Learning, 2019 | Lecture 11 - Sergey Levine (UC Berkeley)

For more information about Stanford’s Artificial Intelligence professional and graduate programs, visit: https://stanford.io/ai Sergey Levine (UC Berkeley) Guest Lecture in Stanford CS330 http://cs330.stanford.edu/

From playlist Stanford CS330: Deep Multi-Task and Meta Learning

La théorie l’information sans peine - Bourbaphy - 17/11/18

Olivier Rioul (Telecom Paris Tech) / 17.11.2018 La théorie l’information sans peine ---------------------------------- Vous pouvez nous rejoindre sur les réseaux sociaux pour suivre nos actualités. Facebook : https://www.facebook.com/InstitutHenriPoincare/ Twitter : https://twitter.com

From playlist Bourbaphy - 17/11/18 - L'information

Maximum Entropy Models for Texture Synthesis - Leclaire - Workshop 2 - CEB T1 2019

Arthur Leclaire (Univ. Bordeaux) / 14.03.2019 Maximum Entropy Models for Texture Synthesis. The problem of examplar-based texture synthesis consists in producing an image that has the same perceptual aspect as a given texture sample. It can be formulated as sampling an image which is 'a

From playlist 2019 - T1 - The Mathematics of Imaging

Optimal Mixing of Glauber Dynamics: Entropy Factorization via High-Dimensional Expan - Zongchen Chen

Computer Science/Discrete Mathematics Seminar I Topic: Optimal Mixing of Glauber Dynamics: Entropy Factorization via High-Dimensional Expansion Speaker: Zongchen Chen Affiliation: Georgia Institute of Technology Date: February 22, 2021 For more video please visit http://video.ias.edu

From playlist Mathematics

Nexus Trimester - László Csirmaz (Central European University, Budapest) 2/3

Geometry of the entropy region László Csirmaz (Central European University, Budapest) February 16, 2016 Abstract: A three-lecture series covering some recent research on the geometry of the entropy region. The lectures will cover: 1) Shannon inequalities; the case of one, two and three va

From playlist Nexus Trimester - 2016 - Fundamental Inequalities and Lower Bounds Theme

Statistical Mechanics Lecture 3

(April 15, 20123) Leonard Susskind begins the derivation of the distribution of energy states that represents maximum entropy in a system at equilibrium. Originally presented in the Stanford Continuing Studies Program. Stanford University: http://www.stanford.edu/ Continuing Studies P

From playlist Course | Statistical Mechanics

What is Geometric Entropy, and Does it Really Increase? - Jozsef Beck

Jozsef Beck Rutgers, The State University of New Jersey April 9, 2013 We all know Shannon's entropy of a discrete probability distribution. Physicists define entropy in thermodynamics and in statistical mechanics (there are several competing schools), and want to prove the Second Law, but

From playlist Mathematics