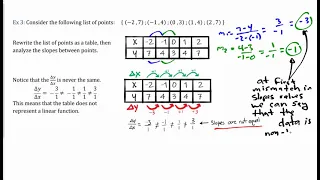

Define linear functions. Use function notation to evaluate linear functions. Learn to identify linear function from data, graphs, and equations.

From playlist Algebra 1

Define a linear function. Determine if a linear function is increasing or decreasing. Interpret linear function models. Determine linear functions. Site: http://mathispower4u.com

From playlist Introduction to Functions: Function Basics

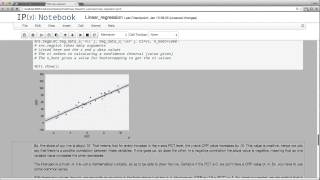

Linear regression is used to compare sets or pairs of numerical data points. We use it to find a correlation between variables.

From playlist Learning medical statistics with python and Jupyter notebooks

(ML 9.2) Linear regression - Definition & Motivation

Linear regression arises naturally from a sequence of simple choices: discriminative model, Gaussian distributions, and linear functions. A playlist of these Machine Learning videos is available here: http://www.youtube.com/view_play_list?p=D0F06AA0D2E8FFBA

From playlist Machine Learning

Introduction to Linear Functions and Slope (L10.1)

This lesson introduces linear functions, describes the behavior of linear function, and explains how to determine the slope of a line given two points. Video content created by Jenifer Bohart, William Meacham, Judy Sutor, and Donna Guhse from SCC (CC-BY 4.0)

From playlist Introduction to Functions: Function Basics

Linear regression (4): Nonlinear features

Extending our class of regressors through additional features

From playlist cs273a

What is the parent function of a linear graph

👉 Learn about graphing linear equations. A linear equation is an equation whose highest exponent on its variable(s) is 1. i.e. linear equations has no exponents on their variables. The graph of a linear equation is a straight line. To graph a linear equation, we identify two values (x-valu

From playlist ⚡️Graph Linear Equations | Learn About

Artificial Intelligence & Machine Learning 6 - Non Linear Features | Stanford CS221: AI(Autumn 2021)

For more information about Stanford's Artificial Intelligence professional and graduate programs visit: https://stanford.io/ai Associate Professor Percy Liang Associate Professor of Computer Science and Statistics (courtesy) https://profiles.stanford.edu/percy-liang Assistant Professor

From playlist Stanford CS221: Artificial Intelligence: Principles and Techniques | Autumn 2021

Stanford EE104: Introduction to Machine Learning | 2020 | Lecture 3 - predictors

Professor Sanjay Lall Electrical Engineering To follow along with the course schedule and syllabus, visit: http://ee104.stanford.edu To view all online courses and programs offered by Stanford, visit: https://online.stanford.edu/

From playlist Stanford EE104: Introduction to Machine Learning Full Course

Stanford EE104: Introduction to Machine Learning | 2020 | Lecture 6 - empirical risk minimization

Professor Sanjay Lall Electrical Engineering To follow along with the course schedule and syllabus, visit: http://ee104.stanford.edu To view all online courses and programs offered by Stanford, visit: https://online.stanford.edu/ 0:00 Introduction 0:26 Parametrized predictors 3:09 Tra

From playlist Stanford EE104: Introduction to Machine Learning Full Course

10c Machine Learning: Polynomial Regression

Lecture on polynomial regression, including an intuitive alternative interpretation, basis expansion concepts and orthogonal basis through Hermite polynomials. Follow along with the demonstration workflow: https://github.com/GeostatsGuy/PythonNumericalDemos/blob/master/SubsurfaceDataAnaly

From playlist Machine Learning

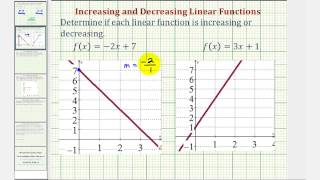

Ex: Determine if a Linear Function is Increasing or Decreasing

This video explains how to determine if a linear function is increasing or decreasing. The results are discussed graphically. Site: http://mathispower4u.com

From playlist Introduction to Functions: Function Basics

Gaël Varoquaux - Supervised Learning with Missing Values

Some data come with missing values. For instance, a survey’s participant may ignore some questions. There is an abundant statistical literature on this topic, establishing for instance how to fit model without biases due to the missingness, and imputation strategies to provide practical so

From playlist Journée statistique & informatique pour la science des données à Paris-Saclay 2021

Stanford EE104: Introduction to Machine Learning | 2020 | Lecture 8 - non quadratic losses

Professor Sanjay Lall Electrical Engineering To follow along with the course schedule and syllabus, visit: http://ee104.stanford.edu To view all online courses and programs offered by Stanford, visit: https://online.stanford.edu/

From playlist Stanford EE104: Introduction to Machine Learning Full Course

Multi-group fairness, loss minimization and indistinguishability - Parikshit Gopalan

Computer Science/Discrete Mathematics Seminar II Topic: Multi-group fairness, loss minimization and indistinguishability Speaker: Parikshit Gopalan Affiliation: VMware Research Date: April 12, 2022 Training a predictor to minimize a loss function fixed in advance is the dominant paradigm

From playlist Mathematics

Spotlight Talks Pt2 - Zhifeng Kong, Daniel Paul Kunin, Omar Montasser

Workshop on Theory of Deep Learning: Where next? Topic: Spotlight Talks Pt2 Speaker: Zhifeng Kong, Daniel Paul Kunin, Omar Montasser Date: October 18, 2019 For more video please visit http://video.ias.edu

From playlist Mathematics

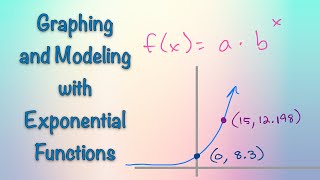

Applying Exponential Models // Math Minute [#34] [ALGEBRA]

Exponential functions work a lot like linear functions. There are typically two parameters that guide the use of the exponential function: the initial value (like the y-intercept of a linear function) and the factor of growth (like the slope of a linear function). There are some additional

From playlist Math Minutes

Artificial Intelligence & Machine Learning 2 - Linear Regression | Stanford CS221: AI (Autumn 2021)

For more information about Stanford's Artificial Intelligence professional and graduate programs visit: https://stanford.io/ai Associate Professor Percy Liang Associate Professor of Computer Science and Statistics (courtesy) https://profiles.stanford.edu/percy-liang Assistant Professor

From playlist Stanford CS221: Artificial Intelligence: Principles and Techniques | Autumn 2021