R & Python - Latent Semantic Analysis

Lecturer: Dr. Erin M. Buchanan Summer 2020 https://www.patreon.com/statisticsofdoom This video is part of my human language modeling class - this video set covers the updated version with both R and Python. This video is the start of a section on vector space models. First up is latent

From playlist Human Language (ANLY 540)

Lecturer: Dr. Erin M. Buchanan Summer 2019 https://www.patreon.com/statisticsofdoom This video is part of my human language modeling class. This video covers latent semantic analysis to move from collocations onto vector space models. You will learn about vector space models and how to

From playlist Human Language (ANLY 540)

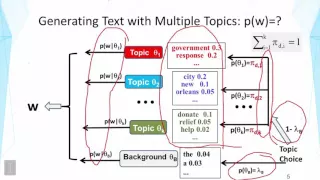

Applied Machine Learning 2019 - Lecture 18 - Topic Models

Latent Semantic Analysis, Non-negative Matrix Factorization for Topic models, Latent Dirichlet Allocation Markov Chain Monte Carlo and Gibbs sampling Class website with slides and more materials: https://www.cs.columbia.edu/~amueller/comsw4995s19/schedule/

From playlist Applied Machine Learning - Spring 2019

Lecturer: Dr. Erin M. Buchanan Harrisburg University of Science and Technology Summer 2019 This video covers Topics Models and their comparison to other semantic vector space models, such as Latent Semantic Analysis. I cover what is a topic model, how to build one in R, and how to explor

From playlist Human Language (ANLY 540)

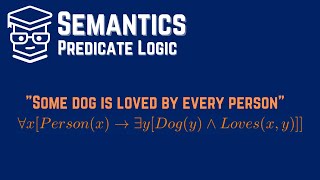

An Overview of Predicate Logic for Linguists - Semantics in Linguistics

This video covers predicate logic in #semantics for #linguistics. We talk about predicates, quantifiers (for all, for some), how to translate sentences into predicate logic, scope, bound variables, free variables, and assignment functions. Join this channel to get access to perks: https:/

From playlist Semantics in Linguistics

VSM, LSA, & SVD | Introduction to Text Analytics with R Part 7

Part 7 of this video series includes specific coverage of: – The trade-offs of expanding the text analytics feature space with n-grams. – How bag-of-words representations map to the vector space model (VSM). – Usage of the dot product between document vectors as a proxy for correlation. –

From playlist Introduction to Text Analytics with R

Simplified Machine Learning Workflows with Anton Antonov, Session #6: Semantic Analysis (Part 1)

Anton Antonov, a senior mathematical programmer with a PhD in applied mathematics, live-demos key Wolfram Language features that are very useful in machine learning. In this session, he discusses the Latent Semantic Analysis Workflows. Notebook materials are available at: https://wolfr.am

From playlist Simplified Machine Learning Workflows with Anton Antonov

Tristan Bepler - Detection of objects in cryo-electron micrographs using geometric deep learning

Recorded 16 November 2022. Tristan Bepler of the New York Structural Biology Center presents "Detection and segmentation of objects in cryo-electron micrographs using geometric deep learning" at IPAM's Cryo-Electron Microscopy and Beyond Workshop. Abstract: In this talk I will discuss mach

From playlist 2022 Cryo-Electron Microscopy and Beyond

Lecture 15C : Deep autoencoders for document retrieval and visualization

Neural Networks for Machine Learning by Geoffrey Hinton [Coursera 2013] Lecture 15C : Deep autoencoders for document retrieval and visualization

From playlist Neural Networks for Machine Learning by Professor Geoffrey Hinton [Complete]

Lecture 15.3 — Deep autoencoders for document retrieval [Neural Networks for Machine Learning]

Lecture from the course Neural Networks for Machine Learning, as taught by Geoffrey Hinton (University of Toronto) on Coursera in 2012. Link to the course (login required): https://class.coursera.org/neuralnets-2012-001

From playlist [Coursera] Neural Networks for Machine Learning — Geoffrey Hinton

SICSS 2019 -- Dictionary-based text analysis

From playlist All Videos

Simplified Machine Learning Workflows with Anton Antonov, Session #8: Semantic Analysis (Part 3)

Anton Antonov, a senior mathematical programmer with a PhD in applied mathematics, live-demos key Wolfram Language features that are very useful in machine learning. This session will be the part 3 where he discusses the Latent Semantic Analysis Workflows. Notebook materials are available

From playlist Simplified Machine Learning Workflows with Anton Antonov

An Overview of Set Theory for Linguists - Semantics in Linguistics

I introduce set theory for linguists. This focuses on language more-so than the technical details, but this is enough to get you started in understanding semantics. Join this channel to get access to perks: https://www.youtube.com/channel/UCGYSfZbPp3BiAFs531PBY7g/join Instagram: http://i

From playlist Semantics in Linguistics

Data around us, like images and documents, are very high dimensional. Autoencoders can learn a simpler representation of it. This representation can be used in many ways: - fast data transfers across a network - Self driving cars (Semantic Segmentation) - Neural Inpainting: Completing sect

From playlist Algorithms and Concepts

Lecture 15/16 : Modeling hierarchical structure with neural nets

Neural Networks for Machine Learning by Geoffrey Hinton [Coursera 2013] 15A From Principal Components Analysis to Autoencoders 15B Deep Autoencoders 15C Deep autoencoders for document retrieval and visualization 15D Semantic hashing 15E Learning binary codes for image retrieval 15F Shallo

From playlist Neural Networks for Machine Learning by Professor Geoffrey Hinton [Complete]

From playlist Exploratory Data Analysis