Anthony Nouy: Adaptive low-rank approximations for stochastic and parametric equations [...]

Find this video and other talks given by worldwide mathematicians on CIRM's Audiovisual Mathematics Library: http://library.cirm-math.fr. And discover all its functionalities: - Chapter markers and keywords to watch the parts of your choice in the video - Videos enriched with abstracts, b

From playlist Numerical Analysis and Scientific Computing

Approximating Functions in a Metric Space

Approximations are common in many areas of mathematics from Taylor series to machine learning. In this video, we will define what is meant by a best approximation and prove that a best approximation exists in a metric space. Chapters 0:00 - Examples of Approximation 0:46 - Best Aproximati

From playlist Approximation Theory

Polynomial approximations -- Calculus II

This lecture is on Calculus II. It follows Part II of the book Calculus Illustrated by Peter Saveliev. The text of the book can be found at http://calculus123.com.

From playlist Calculus II

Low rank approximations: Barack Obama vs. Donald Trump

This is part of an online course on digital image processing in MATLAB. More info here: https://www.udemy.com/improcMATLAB/?couponCode=MXC-DISC4ALL Images and code: sincxpress.com/SVDpresidents.zip More courses on scientific programming, signal and image processing, and related topics:

From playlist Image processing in MATLAB

Math 060 Linear Algebra 34 120814: Singular Value Decomposition and Low-rank Approximation (1/2)

Use of the Singular Value Decomposition: best low-rank approximation of a matrix. Frobenius norm not affected by orthogonal matrices; distance of a matrix to the space of matrices of rank k or lower; construction of a matrix that achieves that distance, using the SVD.

From playlist Course 4: Linear Algebra

Error bounds for Taylor approximations -- Calculus II

This lecture is on Calculus II. It follows Part II of the book Calculus Illustrated by Peter Saveliev. The text of the book can be found at http://calculus123.com.

From playlist Calculus II

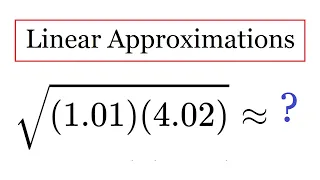

Linear Approximations and Differentials

Linear Approximation In this video, I explain the concept of a linear approximation, which is just a way of approximating a function of several variables by its tangent planes, and I illustrate this by approximating complicated numbers f without using a calculator. Enjoy! Subscribe to my

From playlist Partial Derivatives

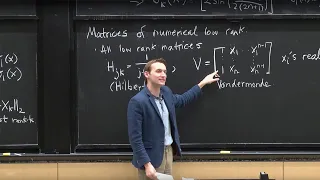

Lecture 17: Rapidly Decreasing Singular Values

MIT 18.065 Matrix Methods in Data Analysis, Signal Processing, and Machine Learning, Spring 2018 Instructor: Alex Townsend View the complete course: https://ocw.mit.edu/18-065S18 YouTube Playlist: https://www.youtube.com/playlist?list=PLUl4u3cNGP63oMNUHXqIUcrkS2PivhN3k Professor Alex Town

From playlist MIT 18.065 Matrix Methods in Data Analysis, Signal Processing, and Machine Learning, Spring 2018

Relative Error Tensor Low Rank Approximation by David Woodruff

Statistical Physics Methods in Machine Learning DATE:26 December 2017 to 30 December 2017 VENUE:Ramanujan Lecture Hall, ICTS, Bengaluru The theme of this Discussion Meeting is the analysis of distributed/networked algorithms in machine learning and theoretical computer science in the "th

From playlist Statistical Physics Methods in Machine Learning

Ye Ke (7/29/22): Geometry of convergence analysis for low rank partially orthogonal tensor approx.

Abstract: Low rank partially orthogonal tensor approximation (LRPOTA) is an important problem in tensor computations and their applications (PCA, ICA, signal processing, data mining, latent variable model, dictionary learning, etc.). It includes Low rank orthogonal tensor approximation (LR

From playlist Applied Geometry for Data Sciences 2022

Linformer: Self-Attention with Linear Complexity (Paper Explained)

Transformers are notoriously resource-intensive because their self-attention mechanism requires a squared number of memory and computations in the length of the input sequence. The Linformer Model gets around that by using the fact that often, the actual information in the attention matrix

From playlist Papers Explained

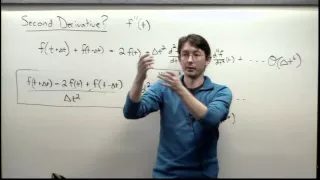

Lecture: Higher-order Accuracy Schemes for Differentiation and Integration

The accuracy of the differentiation approximations is considered and new schemes are developed to lower the error. Integration is also introduced as a numerical algorithm.

From playlist Beginning Scientific Computing

Ankur Moitra : Tensor Decompositions and their Applications

Recording during the thematic meeting: «Nexus of Information and Computation Theories » theJanuary 27, 2016 at the Centre International de Rencontres Mathématiques (Marseille, France) Filmmaker: Guillaume Hennenfent

From playlist Nexus Trimester - 2016 -Tutorial Week at CIRM

8ECM Invited Lecture: Daniel Kressner

From playlist 8ECM Invited Lectures

Time Integration of Tree Tensor Networks

Professor Christian Lubich University of Tübingen, Germany

From playlist Distinguished Visitors Lecture Series

NIPS 2011 Sparse Representation & Low-rank Approximation Workshop: Fast & Memory...

Sparse Representation and Low-rank Approximation Workshop at NIPS 2011 Invited Talk: Fast and Memory-efficient Low Rank Approximation of Massive Graphs by Inderjit Dhillon, University of Texas at Austin Abstract: Social network analysis requires us to perform a variety of analysis ta

From playlist NIPS 2011 Sparse Representation & Low-rank Approx Workshop

DDPS | CUR Matrix Decomposition for Scalable Reduced-Order Modeling

CUR Matrix Decomposition for Scalable Reduced-Order Modeling of Nonlinear Partial Differential Equations using Time-Dependent Bases Description: Dynamical low-rank approximation (DLRA) of nonlinear matrix/tensor differential equations has proven successful in solving high-dimensional part

From playlist Data-driven Physical Simulations (DDPS) Seminar Series

A-Level Maths E2-01 Small-Angle Approximation: Geometrical Derivation

Navigate all of my videos at https://sites.google.com/site/tlmaths314/ Like my Facebook Page: https://www.facebook.com/TLMaths-1943955188961592/ to keep updated Follow me on Instagram here: https://www.instagram.com/tlmaths/ My LIVE Google Doc has the new A-Level Maths specification and

From playlist A-Level Maths E2: Small-Angle Approximation