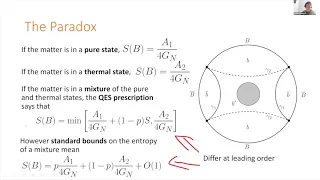

Information theory | Entropy and information

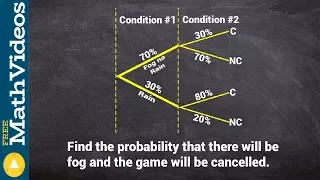

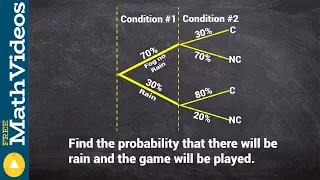

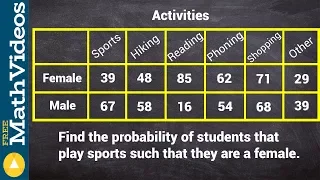

Conditional entropy

In information theory, the conditional entropy quantifies the amount of information needed to describe the outcome of a random variable given that the value of another random variable is known. Here, information is measured in shannons, nats, or hartleys. The entropy of conditioned on is written as . (Wikipedia).