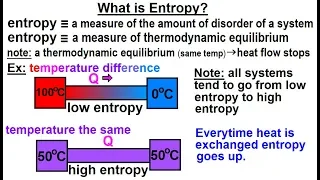

Physics - Thermodynamics 2: Ch 32.7 Thermo Potential (10 of 25) What is Entropy?

Visit http://ilectureonline.com for more math and science lectures! In this video explain and give examples of what is entropy. 1) entropy is a measure of the amount of disorder (randomness) of a system. 2) entropy is a measure of thermodynamic equilibrium. Low entropy implies heat flow t

From playlist PHYSICS 32.7 THERMODYNAMIC POTENTIALS

Topics in Combinatorics lecture 10.0 --- The formula for entropy

In this video I present the formula for the entropy of a random variable that takes values in a finite set, prove that it satisfies the entropy axioms, and prove that it is the only formula that satisfies the entropy axioms. 0:00 The formula for entropy and proof that it satisfies the ax

From playlist Topics in Combinatorics (Cambridge Part III course)

Entropy production during free expansion of an ideal gas by Subhadip Chakraborti

Abstract: According to the second law, the entropy of an isolated system increases during its evolution from one equilibrium state to another. The free expansion of a gas, on removal of a partition in a box, is an example where we expect to see such an increase of entropy. The constructi

From playlist Seminar Series

Entropy is often taught as a measure of how disordered or how mixed up a system is, but this definition never really sat right with me. How is "disorder" defined and why is one way of arranging things any more disordered than another? It wasn't until much later in my physics career that I

From playlist Thermal Physics/Statistical Physics

Physics 32.5 Statistical Thermodynamics (15 of 39) Definition of Entropy of a Microstate

Visit http://ilectureonline.com for more math and science lectures! To donate: http://www.ilectureonline.com/donate https://www.patreon.com/user?u=3236071 In this video I will match entropy and thermodynamics probability in statistical thermodynamics. Next video in the polar coordinates

Maxwell-Boltzmann distribution

Entropy and the Maxwell-Boltzmann velocity distribution. Also discusses why this is different than the Bose-Einstein and Fermi-Dirac energy distributions for quantum particles. My Patreon page is at https://www.patreon.com/EugeneK 00:00 Maxwell-Boltzmann distribution 02:45 Higher Temper

From playlist Physics

Teach Astronomy - Entropy of the Universe

http://www.teachastronomy.com/ The entropy of the universe is a measure of its disorder or chaos. If the laws of thermodynamics apply to the universe as a whole as they do to individual objects or systems within the universe, then the fate of the universe must be to increase in entropy.

From playlist 23. The Big Bang, Inflation, and General Cosmology 2

The ENTROPY EQUATION and its Applications | Thermodynamics and Microstates EXPLAINED

Entropy is a hotly discussed topic... but how can we actually CALCULATE the entropy of a system? (Note: The written document discussed here can be found in the pinned comment below!) Hey everyone, I'm back with a new video, and this time it's a bit different to my usual ones! In this vid

From playlist Thermodynamics by Parth G

David Sutter: "A chain rule for the quantum relative entropy"

Entropy Inequalities, Quantum Information and Quantum Physics 2021 "A chain rule for the quantum relative entropy" David Sutter - IBM Zürich Research Laboratory Abstract: The chain rule for the conditional entropy allows us to view the conditional entropy of a large composite system as a

From playlist Entropy Inequalities, Quantum Information and Quantum Physics 2021

Nexus Trimester - Mokshay Madiman (University of Delaware)

The Stam region, or the differential entropy region for sums of independent random vectors Mokshay Madiman (University of Delaware) February 25, 2016 Abstract: Define the Stam region as the subset of the positive orthant in [Math Processing Error] that arises from considering entropy powe

From playlist Nexus Trimester - 2016 - Fundamental Inequalities and Lower Bounds Theme

Nexus Trimester - Thomas Courtade (UC-Berkeley)

Strong Data Processing and the Entropy Power Inequality Thomas Courtade (UC-Berkeley) February 10, 2016 Abstract: Proving an impossibility result in information theory typically boils down to quantifying a tension between information measures that naturally emerge in an operational setti

From playlist Nexus Trimester - 2016 - Distributed Computation and Communication Theme

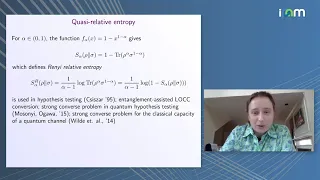

Anna Vershynina: "Quasi-relative entropy: the closest separable state & reversed Pinsker inequality"

Entropy Inequalities, Quantum Information and Quantum Physics 2021 "Quasi-relative entropy: the closest separable state and the reversed Pinsker inequality" Anna Vershynina - University of Houston Abstract: It is well known that for pure states the relative entropy of entanglement is equ

From playlist Entropy Inequalities, Quantum Information and Quantum Physics 2021

Matrix trace inequalities for quantum entropy - M. Berta - Main Conference - CEB T3 2017

Mario Berta (Imperial) / 11.12.2017 Title: Matrix trace inequalities for quantum entropy Abstract: I will present multivariate trace inequalities that extend the Golden-Thompson and Araki-Lieb-Thirring inequalities as well as some logarithmic trace inequalities to arbitrarily many matric

From playlist 2017 - T3 - Analysis in Quantum Information Theory - CEB Trimester

Equidistribution of Measures with High Entropy for General Surface Diffeomorphisms by Omri Sarig

PROGRAM : ERGODIC THEORY AND DYNAMICAL SYSTEMS (HYBRID) ORGANIZERS : C. S. Aravinda (TIFR-CAM, Bengaluru), Anish Ghosh (TIFR, Mumbai) and Riddhi Shah (JNU, New Delhi) DATE : 05 December 2022 to 16 December 2022 VENUE : Ramanujan Lecture Hall and Online The programme will have an emphasis

From playlist Ergodic Theory and Dynamical Systems 2022

La théorie l’information sans peine - Bourbaphy - 17/11/18

Olivier Rioul (Telecom Paris Tech) / 17.11.2018 La théorie l’information sans peine ---------------------------------- Vous pouvez nous rejoindre sur les réseaux sociaux pour suivre nos actualités. Facebook : https://www.facebook.com/InstitutHenriPoincare/ Twitter : https://twitter.com

From playlist Bourbaphy - 17/11/18 - L'information

Optimized quantum f-divergences and data processing - M. Wilde - Main Conference - CEB T3 2017

Mark Wilde (Baton Rouge) / 11.12.2017 Title: Optimized quantum f-divergences and data processing Abstract: The quantum relative entropy is a measure of the distinguishability of two quantum states, and it is a unifying concept in quantum information theory: many information measures such

From playlist 2017 - T3 - Analysis in Quantum Information Theory - CEB Trimester

The adjoint Brascamp-Lieb inequality - Terence Tao

Analysis and Mathematical Physics Topic: The adjoint Brascamp-Lieb inequality Speaker: Terence Tao Affiliation: University of California, Los Angeles Date: March 08, 2023 The Brascamp-Lieb inequality is a fundamental inequality in analysis, generalizing more classical inequalities such a

From playlist Mathematics

Workshop 1 "Operator Algebras and Quantum Information Theory" - CEB T3 2017 - H.Osaka

Hiroyuki Osaka (Ritsumeikan) / 14.09.17 Title: Operator means and application to generalized entropies Abstract: In this talk we present a relation between generalized entropies and operator means. For example, as pointed by Furuichi \cite{SF11}, two upper bounds on the Tsallis entropie

From playlist 2017 - T3 - Analysis in Quantum Information Theory - CEB Trimester

Physics 32.5 Statistical Thermodynamics (16 of 39) Definition of Entropy of a Microstate: Example

Visit http://ilectureonline.com for more math and science lectures! To donate: http://www.ilectureonline.com/donate https://www.patreon.com/user?u=3236071 In this video I will explain entropy in statistical thermodynamics using the Boltzman definition. Next video in this series can be s

Eduard Feireisl: Stability issues in the theory of complete fluid systems

Find this video and other talks given by worldwide mathematicians on CIRM's Audiovisual Mathematics Library: http://library.cirm-math.fr. And discover all its functionalities: - Chapter markers and keywords to watch the parts of your choice in the video - Videos enriched with abstracts, b

From playlist Mathematical Physics