(ML 18.4) Examples of Markov chains with various properties (part 1)

A very simple example of a Markov chain with two states, to illustrate the concepts of irreducibility, aperiodicity, and stationary distributions.

From playlist Machine Learning

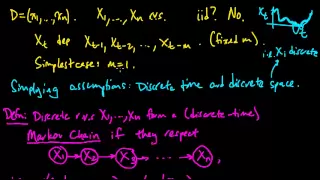

(ML 14.2) Markov chains (discrete-time) (part 1)

Definition of a (discrete-time) Markov chain, and two simple examples (random walk on the integers, and a oversimplified weather model). Examples of generalizations to continuous-time and/or continuous-space. Motivation for the hidden Markov model.

From playlist Machine Learning

Markov Chains Clearly Explained! Part - 1

Let's understand Markov chains and its properties with an easy example. I've also discussed the equilibrium state in great detail. #markovchain #datascience #statistics For more videos please subscribe - http://bit.ly/normalizedNERD Markov Chain series - https://www.youtube.com/playl

From playlist Markov Chains Clearly Explained!

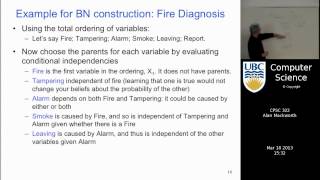

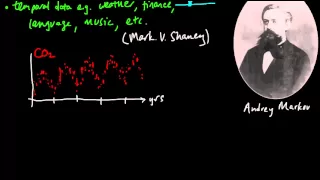

(ML 14.1) Markov models - motivating examples

Introduction to Markov models, using intuitive examples of applications, and motivating the concept of the Markov chain.

From playlist Machine Learning

Intro to Markov Chains & Transition Diagrams

Markov Chains or Markov Processes are an extremely powerful tool from probability and statistics. They represent a statistical process that happens over and over again, where we try to predict the future state of a system. A markov process is one where the probability of the future ONLY de

From playlist Discrete Math (Full Course: Sets, Logic, Proofs, Probability, Graph Theory, etc)

Markov Chains: n-step Transition Matrix | Part - 3

Let's understand Markov chains and its properties. In this video, I've discussed the higher-order transition matrix and how they are related to the equilibrium state. #markovchain #datascience #statistics For more videos please subscribe - http://bit.ly/normalizedNERD Markov Chain ser

From playlist Markov Chains Clearly Explained!

Absorption probabilities in finite Markov chains

Code discussed in this video: https://gist.github.com/Nikolaj-K/f660de8cec4551cfb879479470625e20 Wikipedia: https://en.wikipedia.org/wiki/Absorbing_Markov_chain How's life?

From playlist Programming

On Experiments for Causal Inference and System Identification, Nihat Ay

In the first part of his presentation, Professor Nihat Ay of the Max Planck Institute for Mathematics in the Sciences will provide an introduction to the field of causal networks. He will focus on instructive simple examples in order to highlight the core conceptual and philosophical ideas

From playlist Franke Program in Science and the Humanities

Nexus Trimester - Ioannis Kontoyiannis (Athens U of Econ & Business)

Testing temporal causality and estimating directed information Ioannis Kontoyiannis (Athens U of Econ & Business) March 18, 2016 Abstract: The problem of estimating the directed information rate between two Markov chains of arbitrary (but finite) order is considered. Specifically for the

From playlist 2016-T1 - Nexus of Information and Computation Theory - CEB Trimester

Matrix Limits and Markov Chains

In this video I present a cool application of linear algebra in which I use diagonalization to calculate the eventual outcome of a mixing problem. This process is a simple example of what's called a Markov chain. Note: I just got a new tripod and am still experimenting with it; sorry if t

From playlist Eigenvalues

Emmanuel Candès: "Sailing Through Data: Discoveries and Mirages"

Green Family Lecture Series 2018 "Sailing Through Data: Discoveries and Mirages" Emmanuel Candès, Stanford University Abstract: For a long time, science has operated as follows: a scientific theory can only be empirically tested, and only after it has been advanced. Predictions are deduc

From playlist Public Lectures

Elina Robeva: "Hidden Variables in Linear Non-Gaussian Causal Models"

Tensor Methods and Emerging Applications to the Physical and Data Sciences 2021 Workshop III: Mathematical Foundations and Algorithms for Tensor Computations "Hidden Variables in Linear Non-Gaussian Causal Models" Elina Robeva - University of British Columbia Abstract: Identifying causal

From playlist Tensor Methods and Emerging Applications to the Physical and Data Sciences 2021

Markov Chain Stationary Distribution : Data Science Concepts

What does it mean for a Markov Chain to have a steady state? Markov Chain Intro Video : https://www.youtube.com/watch?v=prZMpThbU3E

From playlist Data Science Concepts

Statistical Rethinking 2022 Lecture 17 - Measurement Error

Slides and other course materials: https://github.com/rmcelreath/stat_rethinking_2022 Intro: Music: https://www.youtube.com/watch?v=xXHH6bBAjDQ Palms: https://www.youtube.com/watch?v=We2KHqtqDos Pancake: https://www.youtube.com/watch?v=44ORuxym4fo Pause: https://www.youtube.com/watch?v=p

From playlist Statistical Rethinking 2022

Peter BÜHLMANN - Robust, generalizable and causal-oriented machine learning

https://ams-ems-smf2022.inviteo.fr/

From playlist International Meeting 2022 AMS-EMS-SMF

Chiara Sabatti: Knockoff genotypes: value in counterfeit

CIRM VIRTUAL EVENT Recorded during the meeting "Mathematical Methods of Modern Statistics 2" the June 05, 2020 by the Centre International de Rencontres Mathématiques (Marseille, France) Filmmaker: Guillaume Hennenfent Find this video and other talks given by worldwide mathematicians

From playlist Virtual Conference

Prob & Stats - Markov Chains: Method 2 (35 of 38) Finding the Stable State & Transition Matrices

Visit http://ilectureonline.com for more math and science lectures! In this video I will explain the standard form of the absorbing Markov chain. Next video in the Markov Chains series: http://youtu.be/MrmMyK5CuWs

From playlist iLecturesOnline: Probability & Stats 3: Markov Chains & Stochastic Processes