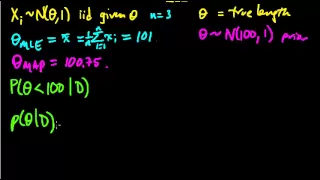

(ML 7.1) Bayesian inference - A simple example

Illustration of the main idea of Bayesian inference, in the simple case of a univariate Gaussian with a Gaussian prior on the mean (and known variances).

From playlist Machine Learning

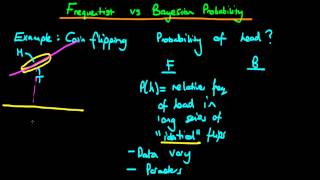

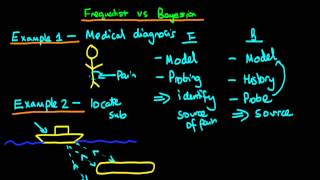

Bayesian vs frequentist statistics probability - part 1

This video provides an intuitive explanation of the difference between Bayesian and classical frequentist statistics. If you are interested in seeing more of the material, arranged into a playlist, please visit: https://www.youtube.com/playlist?list=PLFDbGp5YzjqXQ4oE4w9GVWdiokWB9gEpm Unfo

From playlist Bayesian statistics: a comprehensive course

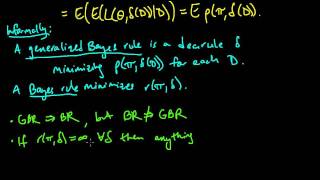

(ML 11.8) Bayesian decision theory

Choosing an optimal decision rule under a Bayesian model. An informal discussion of Bayes rules, generalized Bayes rules, and the complete class theorems.

From playlist Machine Learning

Kernel of a group homomorphism

In this video I introduce the definition of a kernel of a group homomorphism. It is simply the set of all elements in a group that map to the identity element in a second group under the homomorphism. The video also contain the proofs to show that the kernel is a normal subgroup.

From playlist Abstract algebra

(ML 11.4) Choosing a decision rule - Bayesian and frequentist

Choosing a decision rule, from Bayesian and frequentist perspectives. To make the problem well-defined from the frequentist perspective, some additional guiding principle is introduced such as unbiasedness, minimax, or invariance.

From playlist Machine Learning

Stanford CS229: Machine Learning | Summer 2019 | Lecture 9 - Bayesian Methods - Parametric & Non

For more information about Stanford’s Artificial Intelligence professional and graduate programs, visit: https://stanford.io/3ptRUmB Anand Avati Computer Science, PhD To follow along with the course schedule and syllabus, visit: http://cs229.stanford.edu/syllabus-summer2019.html

From playlist Stanford CS229: Machine Learning Course | Summer 2019 (Anand Avati)

ML Tutorial: Bayesian Machine Learning (Zoubin Ghahramani)

Machine Learning Tutorial at Imperial College London: Bayesian Machine Learning Zoubin Ghahramani (University of Cambridge) January 29, 2014

From playlist Machine Learning Tutorials

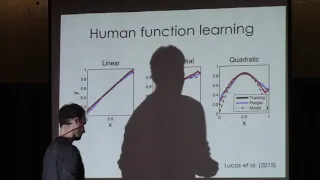

Compositional inductive biases in human function learning - Samuel J. Gershman

IAS-PNI Seminar on ML and Neuroscience Topic: Compositional inductive biases in human function learning Speaker: Samuel J. Gershman Affiliation: Harvard University Date: January 14, 2020 For more video please visit http://video.ias.edu

From playlist Mathematics

Calculating dimension and basis of range and kernel

German version here: https://youtu.be/lBdwtUa_BGM Support the channel on Steady: https://steadyhq.com/en/brightsideofmaths Official supporters in this month: - Petar Djurkovic - Lukas Mührke Here, I explain the typical calculation scheme for getting dimension and basis for the image/ran

From playlist Linear algebra (English)

Regularization (Machine Learning): Georg Gottwald

Machine Learning for the Working Mathematician: Week Four 17 March 2022 Georg Gottwald, Regularization Seminar series homepage (includes Zoom link): https://sites.google.com/view/mlwm-seminar-2022

From playlist Machine Learning for the Working Mathematician

Determine the Kernel of a Linear Transformation Given a Matrix (R3, x to 0)

This video explains how to determine the kernel of a linear transformation.

From playlist Kernel and Image of Linear Transformation

Marcelo Pereyra: Bayesian inference and mathematical imaging - Lecture 2: Markov chain Monte Carlo

Bayesian inference and mathematical imaging - Part 2: Markov chain Monte Carlo Abstract: This course presents an overview of modern Bayesian strategies for solving imaging inverse problems. We will start by introducing the Bayesian statistical decision theory framework underpinning Bayesi

From playlist Probability and Statistics

Introduction to the Kernel and Image of a Linear Transformation

This video introduced the topics of kernel and image of a linear transformation.

From playlist Kernel and Image of Linear Transformation

From playlist COMP0168 (2020/21)

Stanford CS330 Deep Multi-Task & Meta Learning - Bayesian Meta-Learning l 2022 I Lecture 12

For more information about Stanford's Artificial Intelligence programs visit: https://stanford.io/ai To follow along with the course, visit: https://cs330.stanford.edu/ To view all online courses and programs offered by Stanford, visit: http://online.stanford.edu Chelsea Finn Computer

From playlist Stanford CS330: Deep Multi-Task and Meta Learning I Autumn 2022

11d Machine Learning: Bayesian Linear Regression

Lecture on Bayesian linear regression. By adopting the Bayesian approach (instead of the frequentist approach of ordinary least squares linear regression) we can account for prior information and directly model the distributions of the model parameters by updating with training data. Foll

From playlist Machine Learning

Andreas Mueller - Automated Machine Learning - AI With The Best Oct 2017

AI With The Best hosted 50+ speakers and hundreds of attendees from all over the world on a single platform on October 14-15, 2017. The platform held live talks, Insights/Questions pages, and bookings for 1-on-1s with speakers. Recent years have seen a widespread adoption of machine learn

From playlist talks

Andreas Krause: "Safe and Efficient Exploration in Reinforcement Learning"

Intersections between Control, Learning and Optimization 2020 "Safe and Efficient Exploration in Reinforcement Learning" Andreas Krause - ETH Zurich Abstract: At the heart of Reinforcement Learning lies the challenge of trading exploration -- collecting data for learning better models --

From playlist Intersections between Control, Learning and Optimization 2020

Bayesian vs frequentist statistics

This video provides an intuitive explanation of the difference between Bayesian and classical frequentist statistics. If you are interested in seeing more of the material, arranged into a playlist, please visit: https://www.youtube.com/playlist?list=PLFDbGp5YzjqXQ4oE4w9GVWdiokWB9gEpm Un

From playlist Bayesian statistics: a comprehensive course

ML Tutorial: Probabilistic Numerical Methods (Jon Cockayne)

Machine Learning Tutorial at Imperial College London: Probabilistic Numerical Methods Jon Cockayne (University of Warwick) February 22, 2017

From playlist Machine Learning Tutorials