(IC 1.6) A different notion of "information"

An informal discussion of the distinctions between our everyday usage of the word "information" and the information-theoretic notion of "information". A playlist of these videos is available at: http://www.youtube.com/playlist?list=PLE125425EC837021F Attribution for image of TV static:

From playlist Information theory and Coding

(IC 1.1) Information theory and Coding - Outline of topics

A playlist of these videos is available at: http://www.youtube.com/playlist?list=PLE125425EC837021F Overview of central topics in Information theory and Coding. Compression (source coding) theory: Source coding theorem, Kraft-McMillan inequality, Rate-distortion theorem Error-correctio

From playlist Information theory and Coding

From information theory to learning via Statistical Physics: Introduction: by Florent Krzakala

26 December 2016 to 07 January 2017 VENUE: Madhava Lecture Hall, ICTS Bangalore Information theory and computational complexity have emerged as central concepts in the study of biological and physical systems, in both the classical and quantum realm. The low-energy landscape of classical

From playlist US-India Advanced Studies Institute: Classical and Quantum Information

From information theory to learning via Statistical Physics by Florent Krzakala

26 December 2016 to 07 January 2017 VENUE: Madhava Lecture Hall, ICTS Bangalore Information theory and computational complexity have emerged as central concepts in the study of biological and physical systems, in both the classical and quantum realm. The low-energy landscape of classical

From playlist US-India Advanced Studies Institute: Classical and Quantum Information

Thermodynamics of Information by Juan MR Parrondo (Lecture 4)

26 December 2016 to 07 January 2017 VENUE: Madhava Lecture Hall, ICTS Bangalore Information theory and computational complexity have emerged as central concepts in the study of biological and physical systems, in both the classical and quantum realm. The low-energy landscape of classical

From playlist US-India Advanced Studies Institute: Classical and Quantum Information

Thermodynamics of Information by Juan MR Parrondo (Lecture 2)

26 December 2016 to 07 January 2017 VENUE: Madhava Lecture Hall, ICTS Bangalore Information theory and computational complexity have emerged as central concepts in the study of biological and physical systems, in both the classical and quantum realm. The low-energy landscape of classical

From playlist US-India Advanced Studies Institute: Classical and Quantum Information

Thermodynamics of Information by Juan MR Parrondo (Lecture 1)

26 December 2016 to 07 January 2017 VENUE: Madhava Lecture Hall, ICTS Bangalore Information theory and computational complexity have emerged as central concepts in the study of biological and physical systems, in both the classical and quantum realm. The low-energy landscape of classical

From playlist US-India Advanced Studies Institute: Classical and Quantum Information

Computational Differential Geometry, Optimization Algorithms by Mark Transtrum

26 December 2016 to 07 January 2017 VENUE: Madhava Lecture Hall, ICTS Bangalore Information theory and computational complexity have emerged as central concepts in the study of biological and physical systems, in both the classical and quantum realm. The low-energy landscape of classical

From playlist US-India Advanced Studies Institute: Classical and Quantum Information

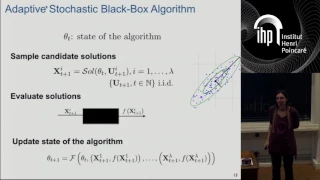

Shannon 100 - 27/10/2016 - Anne AUGER

How information theory sheds new light on black-box optimization Anne Auger (INRIA) Black-box optimization problems occur frequently in many domains ranging from engineering to biology or medicine. In black-box optimization, no information on the function to be optimized besides current

From playlist Shannon 100

Huffman Codes: An Information Theory Perspective

Huffman Codes are one of the most important discoveries in the field of data compression. When you first see them, they almost feel obvious in hindsight, mainly due to how simple and elegant the algorithm ends up being. But there's an underlying story of how they were discovered by Huffman

From playlist Data Compression

David McAllester - Dependent Type Theory from the Perspective of Mathematics, Physics, and (...)

Dependent type theory imposes a type system on Zemelo-Fraenkel set theory (ZFC). From a mathematics and physics perspective dependent type theory naturally generalizes the Bourbaki notion of structure and provides a universal notion of isomorphism and symmetry. This comes with a universal

From playlist Mikefest: A conference in honor of Michael Douglas' 60th birthday

Tightening information-theoretic generalization bounds with data-dependent estimate... - Daniel Roy

Workshop on Theory of Deep Learning: Where next? Topic: Tightening information-theoretic generalization bounds with data-dependent estimates with an application to SGLD Speaker: Daniel Roy Affiliation: University of Toronto Date: October 15, 2019 For more video please visit http://video

From playlist Mathematics

IMS Public Lecture: Are Quantum Computers The Next Generation Of Supercomputers?

Reinhard Werner, Technische Universität Braunschweig, Germany

From playlist Public Lectures

Information Theory Meets Quantum Physics: The magic of wave dynamics by Apoorva Patel

26 December 2016 to 07 January 2017 VENUE: Madhava Lecture Hall, ICTS Bangalore Information theory and computational complexity have emerged as central concepts in the study of biological and physical systems, in both the classical and quantum realm. The low-energy landscape of classical

From playlist US-India Advanced Studies Institute: Classical and Quantum Information

The Abel Prize announcement 2021 - Avi Wigderson and László Lovász

0:49 The Abel Prize announced by Hans Petter Graver, President of The Norwegian Academy of Science and Letters 1:38 Citation by Hans Munthe-Kaas, Chair of the Abel committee 10:22 Popular presentation of the prize winners work by Alex Bellos, British writer, and science communicator 17:43

From playlist The Abel Prize announcements

Unit 3 Debate: Tomer Ullman and Laura Schulz

MIT RES.9-003 Brains, Minds and Machines Summer Course, Summer 2015 View the complete course: https://ocw.mit.edu/RES-9-003SU15 Instructor: Tomer Ullman, Laura Schulz Speakers debate what makes a good theory of the world, the potential role of stochastic search in theory formation, goal-o

From playlist MIT RES.9-003 Brains, Minds and Machines Summer Course, Summer 2015

Ximena Fernández 7/20/22: Morse theory for group presentations and the persistent fundamental group

Discrete Morse theory is a combinatorial tool to simplify the structure of a given (regular) CW-complex up to homotopy equivalence, in terms of the critical cells of discrete Morse functions. In this talk, I will present a refinement of this theory that guarantees not only a homotopy equiv

From playlist AATRN 2022

Universality in numerical computations with random data. Analytical results. - Percy Deift

Analysis Math-Physics Seminar Topic: Universality in numerical computations with random data. Analytical results. Speaker: Percy Deift Affiliation: New York University Date: October 19, 2016 For more video, please visit http://video.ias.edu

From playlist Mathematics

From information theory to learning via Statistical Physics: From statistical by Florent Krzakala

26 December 2016 to 07 January 2017 VENUE: Madhava Lecture Hall, ICTS Bangalore Information theory and computational complexity have emerged as central concepts in the study of biological and physical systems, in both the classical and quantum realm. The low-energy landscape of classical

From playlist US-India Advanced Studies Institute: Classical and Quantum Information