How to find correlation in Excel with the Data Analysis Toolpak

Click this link for more information on correlation coefficients plus more FREE Excel videos and tips: http://www.statisticshowto.com/what-is-the-pearson-correlation-coefficient/

From playlist Regression Analysis

RELATIONSHIPS Between Variables: Standardized Covariance (7-1)

Correlation is a way of measuring the extent to which two variables are related. The term correlation is synonymous with “relationship.” Variables are related when changes in one variable are consistently associated with changes in another variable. Dr. Daniel reviews Variance, Covariance,

From playlist Correlation And Regression in Statistics (WK 07 - QBA 237)

Estimate the Correlation Coefficient Given a Scatter Plot

This video explains how to estimate the correlation coefficient given a scatter plot.

From playlist Performing Linear Regression and Correlation

Covariance (14 of 17) Covariance Matrix "Normalized" - Correlation Coefficient

Visit http://ilectureonline.com for more math and science lectures! To donate:a http://www.ilectureonline.com/donate https://www.patreon.com/user?u=3236071 We will find the “normalized” matrix (or the correlation coefficients) from the covariance matrix from the previous video using 3 sa

From playlist COVARIANCE AND VARIANCE

Covariance Definition and Example

What is covariance? How do I find it? Step by step example of a solved covariance problem for a sample, along with an explanation of what the results mean and how it compares to correlation. 00:00 Overview 03:01 Positive, Negative, Zero Correlation 03:19 Covariance for a Sample Example

From playlist Correlation

Limits of correlation (applied)

Correlation is a standardized covariance (i.e., translated into unit-less form with volatilities). It cannot be used alone: (i) it can be "distorted" by low volatilities, and (ii) it does not give information revealed by the scatter (in this example, both hedge fund series are similarly co

From playlist Statistics: Introduction

This video explains how to find the correlation coefficient which describes the strength of the linear relationship between two variables x and y. My Website: https://www.video-tutor.net Patreon: https://www.patreon.com/MathScienceTutor Amazon Store: https://www.amazon.com/shop/theorga

From playlist Statistics

Function Entropy in Deep-Learning Networks – Mean Field Behaviour and Large... by David Saad

DISCUSSION MEETING : STATISTICAL PHYSICS OF MACHINE LEARNING ORGANIZERS : Chandan Dasgupta, Abhishek Dhar and Satya Majumdar DATE : 06 January 2020 to 10 January 2020 VENUE : Madhava Lecture Hall, ICTS Bangalore Machine learning techniques, especially “deep learning” using multilayer n

From playlist Statistical Physics of Machine Learning 2020

Scatterplots, Part 3: The Formula Behind the Correlation Coefficient

We use the Scatterplots & Correlation app to explain the formula behind the correlation coefficient. The app allows you to find and plot the z-scores, showing the 4 quadrants in which points on the scatterplot can fall.

From playlist Chapter 3: Relationships between two variables

Areejit Samal (7/25/22): Forman-Ricci curvature: A geometry-inspired measure with wide applications

Abstract: In the last few years, we have been active in the development of geometry-inspired measures for the edge-based characterization of real-world complex networks. In particular, we were first to introduce a discretization of the classical Ricci curvature proposed by R. Forman to the

From playlist Applied Geometry for Data Sciences 2022

Eun-Ah Kim - Machine Learning for Quantum Simulation - IPAM at UCLA

Recorded 15 April 2022. Eun-Ah Kim of Cornell University presents "Machine Learning for Quantum Simulation" at IPAM's Model Reduction in Quantum Mechanics Workshop. Learn more online at: http://www.ipam.ucla.edu/programs/workshops/workshop-ii-model-reduction-in-quantum-mechanics/?tab=sched

From playlist 2022 Model Reduction in Quantum Mechanics Workshop

Restricted Boltzmann Machines: Stastical Physics and applications... by Simona Cocco

DISCUSSION MEETING : STATISTICAL PHYSICS OF MACHINE LEARNING ORGANIZERS : Chandan Dasgupta, Abhishek Dhar and Satya Majumdar DATE : 06 January 2020 to 10 January 2020 VENUE : Madhava Lecture Hall, ICTS Bangalore Machine learning techniques, especially “deep learning” using multilayer n

From playlist Statistical Physics of Machine Learning 2020

Daniel Roberts: "Deep learning as a toy model of the 1/N-expansion and renormalization"

Machine Learning for Physics and the Physics of Learning 2019 Workshop IV: Using Physical Insights for Machine Learning "Deep learning as a toy model of the 1/N-expansion and renormalization" Daniel Roberts - Diffeo Institute for Pure and Applied Mathematics, UCLA November 20, 2019

From playlist Machine Learning for Physics and the Physics of Learning 2019

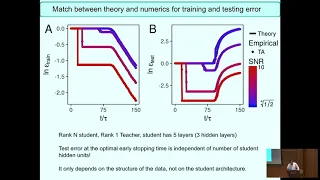

Statistical mechanics of deep learning by Surya Ganguli

Statistical Physics Methods in Machine Learning DATE: 26 December 2017 to 30 December 2017 VENUE: Ramanujan Lecture Hall, ICTS, Bengaluru The theme of this Discussion Meeting is the analysis of distributed/networked algorithms in machine learning and theoretical computer science in the

From playlist Statistical Physics Methods in Machine Learning

Aurora Clark (3/10/20): Topology in chemistry applications

Title: Topology in chemistry applications: Order parameters, collective variables and so much more Speaker: Aurora Clark, Department of Chemistry, Washington State University Abstract: In recent years the methods associated with topological data analysis have begun to be used to understa

From playlist DELTA (Descriptors of Energy Landscape by Topological Analysis), Webinar 2020

Statistical mechanics of deep learning - Surya Ganguli

Workshop on Theory of Deep Learning: Where next? Topic: Statistical mechanics of deep learning Speaker: Surya Ganguli Affiliation: Stanford University Date: October 18, 2019 For more video please visit http://video.ias.edu

From playlist Mathematics

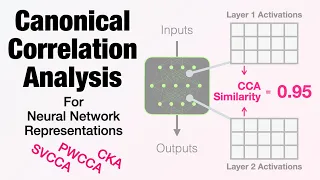

Inspecting Neural Networks with CCA - A Gentle Intro (Explainable AI for Deep Learning)

Canonical Correlation Analysis is one of the methods used to explore deep neural networks. Methods like CKA and SVCCA reveal to us insights into how a neural network processes its inputs. This is often done by using CKA and SVCCA as a similarity measure for different activation matrices. I

From playlist Explainable AI Guide

Kim-Anh Lê Cao: Matrix factorisation techniques for data integration

Gene module detection methods aim to group genes with similar expression profiles to shed light into functional relationships and co-regulation, and infer gene regulatory networks. Methods proposed so far use clustering to group genes based on global similarity in their expression profiles

From playlist Probability and Statistics

Covariance (12 of 17) Covariance Matrix wth 3 Data Sets and Correlation Coefficients

Visit http://ilectureonline.com for more math and science lectures! To donate:a http://www.ilectureonline.com/donate https://www.patreon.com/user?u=3236071 We will find the correlation coefficients of the 3 data sets form the previous 2 videos. Next video in this series can be seen at:

From playlist COVARIANCE AND VARIANCE