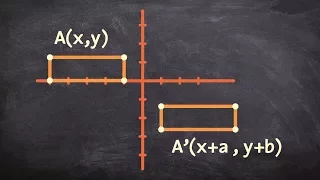

What is a transformation vector

👉 Learn how to apply transformations of a figure and on a plane. We will do this by sliding the figure based on the transformation vector or directions of translations. When performing a translation we are sliding a given figure up, down, left or right. The orientation and size of the fi

From playlist Transformations

Transformations of a function - How to do Pre-Calc

👉 Learn how to determine the transformation of a function. Transformations can be horizontal or vertical, cause stretching or shrinking or be a reflection about an axis. You will see how to look at an equation or graph and determine the transformation. You will also learn how to graph a t

From playlist Characteristics of Functions

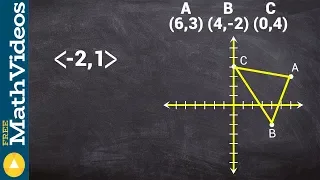

Apply a translation vector to translate a figure ex 1

👉 Learn how to apply transformations of a figure and on a plane. We will do this by sliding the figure based on the transformation vector or directions of translations. When performing a translation we are sliding a given figure up, down, left or right. The orientation and size of the fi

From playlist Transformations

How to apply a transformation vector to translate a figure

👉 Learn how to apply transformations of a figure and on a plane. We will do this by sliding the figure based on the transformation vector or directions of translations. When performing a translation we are sliding a given figure up, down, left or right. The orientation and size of the fi

From playlist Transformations

Transformations: Describe Translation (Grade 4) - OnMaths GCSE Maths Revision

Topic: Transformations: Describe Translation Do this paper online for free: https://www.onmaths.com/transformations/ Grade: 4 This question appears on calculator and non-calculator higher and foundation GCSE papers. Practise and revise with OnMaths. Go to onmaths.com for more resources, l

From playlist Transformations

Pre-Calculus - Introduction to function transformations

This video will introduce you to the basic idea of applying transformations to a function. An example is presented at the end so that you can see how they are applied. For further examples watch the rest of the videos associated with the playlist. For more videos please visit http://www

From playlist Pre-Calculus

Pretrained Transformers as Universal Computation Engines (Machine Learning Research Paper Explained)

#universalcomputation #pretrainedtransformers #finetuning Large-scale pre-training and subsequent fine-tuning is a common recipe for success with transformer models in machine learning. However, most such transfer learning is done when a model is pre-trained on the same or a very similar

From playlist Papers Explained

All You Need to Know on Multilingual Sentence Vectors (1 Model, 50+ Languages)

We've learned about how sentence transformers can be used to create high-quality vector representations of text. We can then use these vectors to find similar vectors, which can be used for many applications such as semantic search or topic modeling. These models are very good at producin

From playlist NLP for Semantic Search Course

Chat with your Image! BLIP-2 connects Q-Former w/ VISION-LANGUAGE models (ViT & T5 LLM)

Combined Vision-Language Transformers, interlinked w/ a Q-Former, a Querying Transformer! BLIP 2. BLIP-2! The financial resources for pre-training both systems (Vision and Language) are astronomical? Let me introduce you to a clever, new training method: BLIP-2. Multimodal Large Language M

From playlist VISION Transformers- new transformer based technology in 2023

DeepMind x UCL | Deep Learning Lectures | 7/12 | Deep Learning for Natural Language Processing

This lecture, by DeepMind Research Scientist Felix Hill, first discusses the motivation for modelling language with ANNs: language is highly contextual, typically non-compositional and relies on reconciling many competing sources of information. This section also covers Elman's Finding Str

From playlist Learning resources

Transfer learning and Transformer models (ML Tech Talks)

In this session of Machine Learning Tech Talks, Software Engineer from Google Research, Iulia Turc, will walk us through the recent history of natural language processing, including the current state of the art architecture, the Transformer. 0:00 - Intro 1:07 - Encoding text 8:21 - Langu

From playlist ML & Deep Learning

The Narrated Transformer Language Model

AI/ML has been witnessing a rapid acceleration in model improvement in the last few years. The majority of the state-of-the-art models in the field are based on the Transformer architecture. Examples include models like BERT (which when applied to Google Search, resulted in what Google cal

From playlist Language AI & NLP

GPT-1 (basic for understanding GPT-2 and GPT-3)

GPT-3 is super intelligent NLP deep learning model. In order to understand GPT-3 or later version, we should understand fundamental basic of it, and this video is covering the basic of GPT which covers before the transformer, and summary of transformer and the basic fundamental of GPT by l

From playlist GPT

Kaggle Reading Group: Bidirectional Encoder Representations from Transformers (aka BERT) | Kaggle

Join Kaggle Data Scientist Rachael as she reads through an NLP paper! Today's paper is "BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding" (Devlin et al, unpublished). You can find a copy here: https://arxiv.org/pdf/1810.04805.pdf SUBSCRIBE: http://www.youtu

From playlist Kaggle Reading Group | Kaggle

Transformer Neural Networks - an Overview!

Let's talk about Recurrent Networks, Transformer Neural Networks, BERT Networks and Sentence Transformers all in one video! Follow me on M E D I U M: https://towardsdatascience.com/likelihood-probability-and-the-math-you-should-know-9bf66db5241b Joins us on D I S C O R D: https://discord.

From playlist Transformer Neural Networks

GShard: Scaling Giant Models with Conditional Computation and Automatic Sharding (Paper Explained)

Google builds a 600 billion parameter transformer to do massively multilingual, massive machine translation. Interestingly, the larger model scale does not come from increasing depth of the transformer, but from increasing width in the feedforward layers, combined with a hard routing to pa

From playlist Papers Explained

Unit 7 AP5 End of Unit review Q8

From playlist Mr Ronald's Year 10 Groups