Computer algebra | Genetic programming | Regression analysis

Symbolic regression

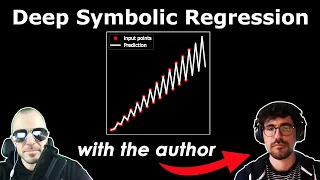

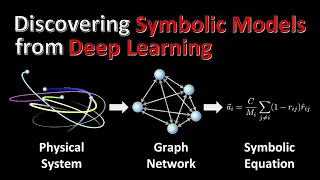

Symbolic regression (SR) is a type of regression analysis that searches the space of mathematical expressions to find the model that best fits a given dataset, both in terms of accuracy and simplicity. No particular model is provided as a starting point for symbolic regression. Instead, initial expressions are formed by randomly combining mathematical building blocks such as mathematical operators, analytic functions, constants, and state variables. Usually, a subset of these primitives will be specified by the person operating it, but that's not a requirement of the technique. The symbolic regression problem for mathematical functions has been tackled with a variety of methods, including recombining equations most commonly using genetic programming, as well as more recent methods utilizing Bayesian methods and neural networks. Another non-classical alternative method to SR is called Universal Functions Originator (UFO), which has a different mechanism, search-space, and building strategy. Further methods such as Exact Learning attempt to transform the fitting problem into a moments problem in a natural function space, usually built around generalizations of the Meijer-G function. By not requiring a priori specification of a model, symbolic regression isn't affected by human bias, or unknown gaps in domain knowledge. It attempts to uncover the intrinsic relationships of the dataset, by letting the patterns in the data itself reveal the appropriate models, rather than imposing a model structure that is deemed mathematically tractable from a human perspective. The fitness function that drives the evolution of the models takes into account not only error metrics (to ensure the models accurately predict the data), but also special complexity measures, thus ensuring that the resulting models reveal the data's underlying structure in a way that's understandable from a human perspective. This facilitates reasoning and favors the odds of getting insights about the data-generating system. It has been proven that symbolic regression is an NP-hard problem, in the sense that one cannot always find the best possible mathematical expression to fit to a given dataset in polynomial time. (Wikipedia).