Graph and Subgraph Sparsification and its Implications to Linear System Solving... - Alex Kolla

Alexandra Kolla Institute for Advanced Study November 10, 2009 I will first give an overview of several constructions of graph sparsifiers and their properties. I will then present a method of sparsifying a subgraph W of a graph G with optimal number of edges and talk about the implicatio

From playlist Mathematics

Recursively Defined Sets - An Intro

Recursively defined sets are an important concept in mathematics, computer science, and other fields because they provide a framework for defining complex objects or structures in a simple, iterative way. By starting with a few basic objects and applying a set of rules repeatedly, we can g

From playlist All Things Recursive - with Math and CS Perspective

Structured Regularization Summer School - C. Fernandez-Granda - 20/06/2017

Carlos Fernandez-Granda (NYU): A sampling theorem for robust deconvolution Abstract: In the 70s and 80s geophysicists proposed using l1-norm regularization for deconvolution problem in the context of reflection seismology. Since then such methods have had a great impact in high-dimensiona

From playlist Structured Regularization Summer School - 19-22/06/2017

NIPS 2011 Sparse Representation & Low-rank Approximation Workshop: Dictionary-Dependent Penalties...

Sparse Representation and Low-rank Approximation Workshop at NIPS 2011 Invited Talk: Dictionary-Dependent Penalties for Sparse Estimation and Rank Minimization by David Wipf, University of California at San Diego Abstract: In the majority of recent work on sparse estimation algorit

From playlist NIPS 2011 Sparse Representation & Low-rank Approx Workshop

A Compressed Overview of Sparsity

This talk presents a high level overview of compressed sensing, especially as it relates to engineering applied mathematics. We provide context for sparsity and compression, followed by good rules of thumb and key ingredients to apply compressed sensing.

From playlist Research Abstracts from Brunton Lab

Sebastian Pokutta: "Structured ML Training via Conditional Gradients"

Deep Learning and Combinatorial Optimization 2021 "Structured ML Training via Conditional Gradients" Sebastian Pokutta - Konrad-Zuse-Zentrum für Informationstechnik (ZIB), Department of Mathematics Abstract: Conditional Gradient methods are an important class of methods to minimize (non-

From playlist Deep Learning and Combinatorial Optimization 2021

Structured Regularization Summer School - A.Hansen - 1/4 - 19/06/2017

Anders Hansen (Cambridge) Lectures 1 and 2: Compressed Sensing: Structure and Imaging Abstract: The above heading is the title of a new book to be published by Cambridge University Press. In these lectures I will cover some of the main issues discussed in this monograph/textbook. In par

From playlist Structured Regularization Summer School - 19-22/06/2017

30th Imaging & Inverse Problems (IMAGINE) OneWorld SIAM-IS Virtual Seminar Series Talk

Date: Wednesday, June 30, 2021, 10:00am Eastern Time Zone (US & Canada) Speaker: Leon Bungert Title: A Bregman Learning Framework for Sparse Neural Networks Abstract: I will present a novel learning framework based on stochastic Bregman iterations. It allows to train sparse neural netwo

From playlist Imaging & Inverse Problems (IMAGINE) OneWorld SIAM-IS Virtual Seminar Series

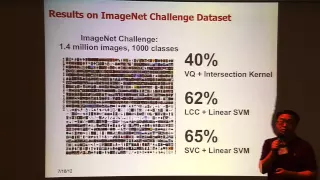

Kai Yu: "Image Classification Using Sparse Coding, Pt. 2"

Graduate Summer School 2012: Deep Learning, Feature Learning "Image Classification Using Sparse Coding, Pt. 2" Kai Yu, Baidu Inc. Institute for Pure and Applied Mathematics, UCLA July 18, 2012 For more information: https://www.ipam.ucla.edu/programs/summer-schools/graduate-summer-school

From playlist GSS2012: Deep Learning, Feature Learning

Structured Regularization Summer School - A.Hansen - 2/4 - 19/06/2017

Anders Hansen (Cambridge) Lectures 1 and 2: Compressed Sensing: Structure and Imaging Abstract: The above heading is the title of a new book to be published by Cambridge University Press. In these lectures I will cover some of the main issues discussed in this monograph/textbook. In par

From playlist Structured Regularization Summer School - 19-22/06/2017

Yonina Eldar - Model Based Deep Learning with Application to Super Resolution - IPAM at UCLA

Recorded 27 October 2022. Yonina Eldar of the Weizmann Institute of Science presents "Model Based Deep Learning with Application to Super Resolution" at IPAM's Mathematical Advances for Multi-Dimensional Microscopy Workshop. Abstract: Deep neural networks provide unprecedented performance

From playlist 2022 Mathematical Advances for Multi-Dimensional Microscopy

Deep Learning Lecture 2.5 - Regularization

Deep Learning Lecture - Estimator Theory 4 - L2 / Ridge regularization - Sparsity-Inducing regularization - Practical workflow for ML training and validation

From playlist Deep Learning Lecture

TeraLasso for sparse time-varying image modeling - Hero - Workshop 2 - CEB T1 2019

Alfred Hero (Univ. of Michigan) / 15.03.2019 TeraLasso for sparse time-varying image modeling. We propose a new ultrasparse graphical model for representing time varying images, and other multiway data, based on a Kronecker sum representation of the spatio-temporal inverse covariance ma

From playlist 2019 - T1 - The Mathematics of Imaging

Ulugbek Kamilov: "Computational Imaging: Reconciling Models and Learning"

Deep Learning and Medical Applications 2020 "Computational Imaging: Reconciling Models and Learning" Ulugbek Kamilov, Washington University in St. Louis Abstract: There is a growing need in biological, medical, and materials imaging research to recover information lost during data acquis

From playlist Deep Learning and Medical Applications 2020

Structured Regularization Summer School - A.Hansen - 4/4 - 20/06/2017

Anders Hansen (Cambridge) Lectures 1 and 2: Compressed Sensing: Structure and Imaging Abstract: The above heading is the title of a new book to be published by Cambridge University Press. In these lectures I will cover some of the main issues discussed in this monograph/textbook. In par

From playlist Structured Regularization Summer School - 19-22/06/2017

Inaugural Imaging & Inverse Problems (IMAGINE) OneWorld SIAM-IS Virtual Seminar Series Talk

Date: Wednesday, October 14, 10:00am EDT Speaker: Michael Friedlander, University of British Columbia Title: Polar deconvolution of mixed signals Abstract: The signal demixing problem seeks to separate the superposition of multiple signals into its constituent components. We model the s

From playlist Imaging & Inverse Problems (IMAGINE) OneWorld SIAM-IS Virtual Seminar Series

Stéphane Mallat - Multiscale Models for Image Classification and Physics with Deep Networks

Abstract: Approximating high-dimensional functionals with low-dimensional models is a central issue of machine learning, image processing, physics and mathematics. Deep convolutional networks are able to approximate such functionals over a wide range of applications. This talk shows that t

From playlist 2nd workshop Nokia-IHES / AI: what's next?

Factorization-based Sparse Solvers and Preconditions, Lecture 3

Xiaoye Sherry Li's (from Lawrence Berkeley National Laboratory) lecture number three on Factorization-based sparse solves and preconditioners

From playlist Gene Golub SIAM Summer School Videos

Structured Regularization Summer School - A.Hansen - 3/4 - 20/06/2017

Anders Hansen (Cambridge) Lectures 1 and 2: Compressed Sensing: Structure and Imaging Abstract: The above heading is the title of a new book to be published by Cambridge University Press. In these lectures I will cover some of the main issues discussed in this monograph/textbook. In par

From playlist Structured Regularization Summer School - 19-22/06/2017