Hybrid sparse stochastic processes and the resolution of (...) - Unser - Workshop 2 - CEB T1 2019

Michael Unser (EPFL) / 12.03.2019 Hybrid sparse stochastic processes and the resolution of linear inverse problems. Sparse stochastic processes are continuous-domain processes that are specified as solutions of linear stochastic differential equations driven by white Lévy noise. These p

From playlist 2019 - T1 - The Mathematics of Imaging

We propose a sparse regression method capable of discovering the governing partial differential equation(s) of a given system by time series measurements in the spatial domain. The regression framework relies on sparsity promoting techniques to select the nonlinear and partial derivative

From playlist Research Abstracts from Brunton Lab

"Data-Driven Optimization in Pricing and Revenue Management" by Arnoud den Boer - Lecture 1

In this course we will study data-driven decision problems: optimization problems for which the relation between decision and outcome is unknown upfront, and thus has to be learned on-the-fly from accumulating data. This type of problems has an intrinsic tension between statistical goals a

From playlist Thematic Program on Stochastic Modeling: A Focus on Pricing & Revenue Management

Introduction to the paper https://arxiv.org/abs/2002.06707

From playlist Research

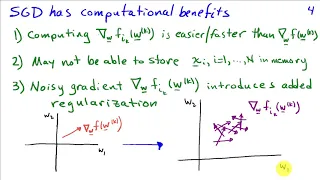

Silvia Villa - Generalization properties of multiple passes stochastic gradient method

The stochastic gradient method has become an algorithm of choice in machine learning, because of its simplicity and small computational cost, especially when dealing with big data sets. Despite its widespread use, the generalization properties of the variants of stochastic

From playlist Schlumberger workshop - Computational and statistical trade-offs in learning

"Data-Driven Optimization in Pricing and Revenue Management" by Arnoud den Boer - Lecture 3

In this course we will study data-driven decision problems: optimization problems for which the relation between decision and outcome is unknown upfront, and thus has to be learned on-the-fly from accumulating data. This type of problems has an intrinsic tension between statistical goals a

From playlist Thematic Program on Stochastic Modeling: A Focus on Pricing & Revenue Management

From playlist COMP0168 (2020/21)

Stochastic Gradient Descent: where optimization meets machine learning- Rachel Ward

2022 Program for Women and Mathematics: The Mathematics of Machine Learning Topic: Stochastic Gradient Descent: where optimization meets machine learning Speaker: Rachel Ward Affiliation: University of Texas, Austin Date: May 26, 2022 Stochastic Gradient Descent (SGD) is the de facto op

From playlist Mathematics

The KPZ Universality Class and Equation - Ivan Corwin

The KPZ Universality Class and Equation Ivan Corwin Courant Institute of Mathematics, New York University February 11, 2011 ANALYSIS/MATHEMATICAL PHYSICS SEMINAR The Gaussian central limit theorem says that for a wide class of stochastic systems, the bell curve (Gaussian distribution) des

From playlist Mathematics

Ivan Guo: Stochastic Optimal Transport in Financial Mathematics

Abstract: In recent years, the field of optimal transport has attracted the attention of many high-profile mathematicians with a wide range of applications. In this talk we will discuss some of its recent applications in financial mathematics, particularly on the problems of model calibra

From playlist SMRI Seminars

Terry Lyons: Modelling Diffusive Systems

This lecture was held at The University of Oslo, May 24, 2007 and was part of the Abel Prize Lectures in connection with the Abel Prize Week celebrations. Program for the Abel Lectures 2007 1. “A Short History of Large Deviations” by Srinivasa Varadhan, Abel Laureate 2007, Courant

From playlist Abel Lectures

Constructing a solution of the 2D Kardar-Parisi-Zhang equation (Lecture - 03) by Sourav Chatterjee

INFOSYS-ICTS RAMANUJAN LECTURES SOME OPEN QUESTIONS ABOUT SCALING LIMITS IN PROBABILITY THEORY SPEAKER Sourav Chatterjee (Stanford University, California, USA) DATE & TIME 14 January 2019 to 18 January 2019 VENUE Madhava Lecture Hall, ICTS campus GALLERY Lecture 1: Yang-Mills for mathemat

From playlist Infosys-ICTS Ramanujan Lectures

Fourier transforms: heat equation

Free ebook https://bookboon.com/en/partial-differential-equations-ebook How to solve the heat equation via Fourier transforms. An example is discussed and solved.

From playlist Partial differential equations

Ohad Shamir - Trade-offs in Distributed Learning

In many large-scale applications, learning must be done on training data which is distributed across multiple machines. This presents an important challenge, with multiple trade-offs between optimization accuracy, statistical performance, communication

From playlist Schlumberger workshop - Computational and statistical trade-offs in learning

Peter Friz: Some examples of homogenization related rough paths

Find this video and other talks given by worldwide mathematicians on CIRM's Audiovisual Mathematics Library: http://library.cirm-math.fr. And discover all its functionalities: - Chapter markers and keywords to watch the parts of your choice in the video - Videos enriched with abstracts, b

From playlist SPECIAL 7th European congress of Mathematics Berlin 2016.

Lecture Lorenzo Pareschi: Uncertainty quantification for kinetic equations III

The lecture was held within the of the Hausdorff Trimester Program: Kinetic Theory Abstract: In these lectures we overview some recent results in the field of uncertainty quantification for kinetic equations with random inputs. Uncertainties may be due to various reasons, like lack of kn

From playlist Summer School: Trails in kinetic theory: foundational aspects and numerical methods

Quentin Berthet: Learning with differentiable perturbed optimizers

Machine learning pipelines often rely on optimization procedures to make discrete decisions (e.g. sorting, picking closest neighbors, finding shortest paths or optimal matchings). Although these discrete decisions are easily computed in a forward manner, they cannot be used to modify model

From playlist Control Theory and Optimization

Plamen Turkedjiev: Least squares regression Monte Carlo for approximating BSDES and semilinear PDES

Abstract: In this lecture, we shall discuss the key steps involved in the use of least squares regression for approximating the solution to BSDEs. This includes how to obtain explicit error estimates, and how these error estimates can be used to tune the parameters of the numerical scheme

From playlist Probability and Statistics