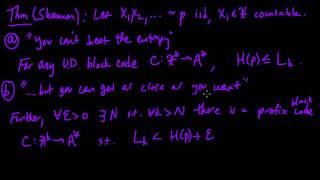

(IC 3.9) Source coding theorem (optimal lossless compression)

Proof of Shannon's "Source coding theorem", characterizing the entropy as the best possible lossless compression (using block codes) for a discrete memoryless source. A playlist of these videos is available at: http://www.youtube.com/playlist?list=PLE125425EC837021F

From playlist Information theory and Coding

Shannon Nyquist Sampling Theorem

Follow on Twitter: @eigensteve Brunton's website: https://eigensteve.com This video discusses the famous Shannon-Nyquist sampling theorem, which discusses limits on signal reconstruction given how fast it is sampled and the frequency content of the signal. For original papers: Shannon

From playlist Sparsity and Compression [Data-Driven Science and Engineering]

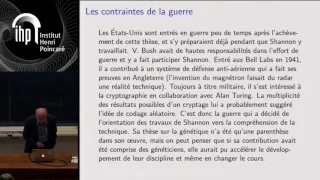

Shannon 100 - 26/10/2016 - Olivier RIOUL

Shannon’s Formula Wlog(1+SNR): A Historical Perspective Olivier Rioul (Télécom-ParisTech) As is well known, the milestone event that founded the field of information theory is the publication of Shannon’s 1948 paper entitled "A Mathematical Theory of Communication". This article brings t

From playlist Shannon 100

(IC 3.10) Relative entropy as the mismatch inefficiency

By considering the inefficiency due to using the wrong probability distribution to design a code using Shannon coding, we arrive at the relative entropy. A playlist of these videos is available at: http://www.youtube.com/playlist?list=PLE125425EC837021F

From playlist Information theory and Coding

(IC 3.5) Bounds on optimal expected length

Using Shannon coding, one can get within 1 of the entropy. This gives an upper bound on the expected codeword length of an optimal code. A playlist of these videos is available at: http://www.youtube.com/playlist?list=PLE125425EC837021F

From playlist Information theory and Coding

(IC 5.10) Generalizing arithmetic coding to non-i.i.d. models

Arithmetic coding can accommodate essentially any probabilistic model of the source, in a very natural way. A playlist of these videos is available at: http://www.youtube.com/playlist?list=PLE125425EC837021F

From playlist Information theory and Coding

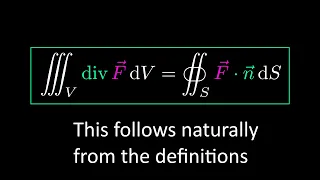

26: Divergence Theorem - Valuable Vector Calculus

Video explaining the definition of divergence: https://youtu.be/UEU9dLgmBH4 Video on surface integrals: https://youtu.be/hVBoEEJlNuI The divergence theorem, also called Gauss's theorem, is a natural consequence of the definition of divergence. In this video, we'll see an intuitive explana

From playlist Valuable Vector Calculus

(IC 3.7) Block codes for compression

Introduction to the idea of encoding a sequence of source symbols using blocks, rather than a single symbol at a time. A playlist of these videos is available at: http://www.youtube.com/playlist?list=PLE125425EC837021F

From playlist Information theory and Coding

Cryptography and Network Security by Prof. D. Mukhopadhyay, Department of Computer Science and Engineering, IIT Kharagpur. For more details on NPTEL visit http://nptel.iitm.ac.in

From playlist Computer - Cryptography and Network Security

Huffman Codes: An Information Theory Perspective

Huffman Codes are one of the most important discoveries in the field of data compression. When you first see them, they almost feel obvious in hindsight, mainly due to how simple and elegant the algorithm ends up being. But there's an underlying story of how they were discovered by Huffman

From playlist Data Compression

Order, Entropy, Information, and Compression (Lecture 2) by Dov Levine

PROGRAM ENTROPY, INFORMATION AND ORDER IN SOFT MATTER ORGANIZERS: Bulbul Chakraborty, Pinaki Chaudhuri, Chandan Dasgupta, Marjolein Dijkstra, Smarajit Karmakar, Vijaykumar Krishnamurthy, Jorge Kurchan, Madan Rao, Srikanth Sastry and Francesco Sciortino DATE: 27 August 2018 to 02 Novemb

From playlist Entropy, Information and Order in Soft Matter

Sergio Verdu - Information Theory Today

Founded by Claude Shannon in 1948, information theory has taken on renewed vibrancy with technological advances that pave the way for attaining the fundamental limits of communication channels and information sources. Increasingly playing a role as a design driver, information theory is b

From playlist NOKIA-IHES Workshop

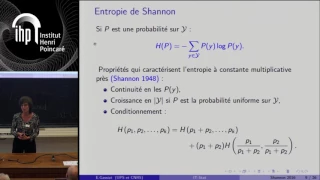

Shannon 100 - 26/10/2016 - Elisabeth GASSIAT

Entropie, compression et statistique Elisabeth Gassiat (Université de Paris-Sud) Claude Shannon est l'inventeur de la théorie de l'information. Il a introduit la notion d'entropie comme mesure de l'information contenue dans un message vu comme provenant d'une source stochastique et démon

From playlist Shannon 100

La théorie l’information sans peine - Bourbaphy - 17/11/18

Olivier Rioul (Telecom Paris Tech) / 17.11.2018 La théorie l’information sans peine ---------------------------------- Vous pouvez nous rejoindre sur les réseaux sociaux pour suivre nos actualités. Facebook : https://www.facebook.com/InstitutHenriPoincare/ Twitter : https://twitter.com

From playlist Bourbaphy - 17/11/18 - L'information

Shannon 100 - 28/10/2016 - Robert G. GALLAGER

From playlist Shannon 100

Shannon 100 - 27/10/2016 - Gérard BATTAIL

En suivant Shannon : de la technique à la compréhension de la vie Gérard Battail (Télécom ParisTech ER) L’application de la théorie de l’information à la biologie est le thème principal de cette conférence. Shannon, étudiant au MIT, a soutenu en 1940 une thèse intitulée An algebra for th

From playlist Shannon 100

IMS Public Lecture: Trends in Wireless Communications

Sergio Verdú, Princeton University

From playlist Public Lectures

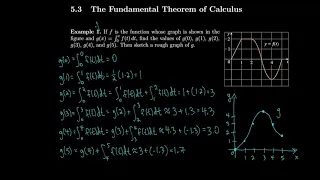

Calculus 5.3 The Fundamental Theorem of Calculus

My notes are available at http://asherbroberts.com/ (so you can write along with me). Calculus: Early Transcendentals 8th Edition by James Stewart

From playlist Calculus

Mini-course on information by Rajaram Nityananda (Part 1)

Information processing in biological systems URL: https://www.icts.res.in/discussion_meeting/ipbs2016/ DATES: Monday 04 Jan, 2016 - Thursday 07 Jan, 2016 VENUE: ICTS campus, Bangalore From the level of networks of genes and proteins to the embryonic and neural levels, information at var

From playlist Information processing in biological systems