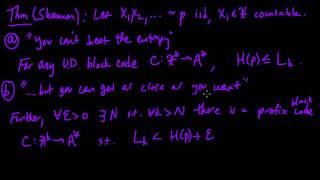

(IC 3.9) Source coding theorem (optimal lossless compression)

Proof of Shannon's "Source coding theorem", characterizing the entropy as the best possible lossless compression (using block codes) for a discrete memoryless source. A playlist of these videos is available at: http://www.youtube.com/playlist?list=PLE125425EC837021F

From playlist Information theory and Coding

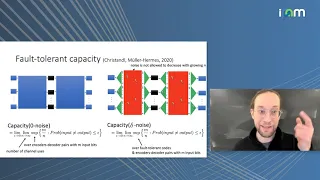

Matthias Christandl: "Fault-tolerant Coding for Quantum Communication"

Entropy Inequalities, Quantum Information and Quantum Physics 2021 "Fault-tolerant Coding for Quantum Communication" Matthias Christandl - University of Copenhagen Abstract: Designing encoding and decoding circuits to reliably send messages over many uses of a noisy channel is a central

From playlist Entropy Inequalities, Quantum Information and Quantum Physics 2021

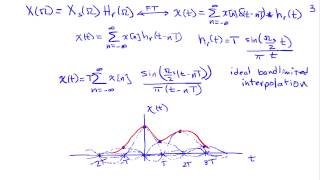

Reconstruction and the Sampling Theorem

http://AllSignalProcessing.com for more great signal-processing content: ad-free videos, concept/screenshot files, quizzes, MATLAB and data files. Analysis of the conditions under which a continuous-time signal can be reconstructed from its samples, including ideal bandlimited interpolati

From playlist Sampling and Reconstruction of Signals

Aliasing and the Sampling Theorem Simplified

http://AllSignalProcessing.com for free e-book on frequency relationships and more great signal processing content, including concept/screenshot files, quizzes, MATLAB and data files. A presentation of aliasing, the sampling theorem, and the Fourier transform representation of a sampled s

From playlist Sampling and Reconstruction of Signals

This video is a brief introduction to linear codes: dimensions, G (generating matrix), H (parity check matrix), their forms. Also gives an example of how to convert between G and H. Here is the formal definition of a Linear Code: A linear code of dimension k and length n over a field

From playlist Cryptography and Coding Theory

(IC 1.4) Source-channel separation

The source-encoder-channel-decoder-destination pipeline. Decoupling the combined encoding problem into compression (source coding) and error-correction (channel coding) via the source-channel separation theorem. A playlist of these videos is available at: http://www.youtube.com/playlist?

From playlist Information theory and Coding

Notation and Basic Signal Properties

http://AllSignalProcessing.com for free e-book on frequency relationships and more great signal processing content, including concept/screenshot files, quizzes, MATLAB and data files. Signals as functions, discrete- and continuous-time signals, sampling, images, periodic signals, displayi

From playlist Introduction and Background

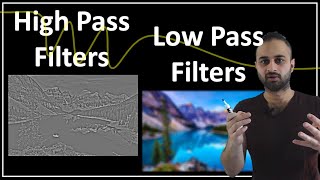

Low Pass Filters & High Pass Filters : Data Science Concepts

What is a low pass filter? What is a high pass filter? Sobel Filter: https://en.wikipedia.org/wiki/Sobel_operator

From playlist Time Series Analysis

(IC 1.1) Information theory and Coding - Outline of topics

A playlist of these videos is available at: http://www.youtube.com/playlist?list=PLE125425EC837021F Overview of central topics in Information theory and Coding. Compression (source coding) theory: Source coding theorem, Kraft-McMillan inequality, Rate-distortion theorem Error-correctio

From playlist Information theory and Coding

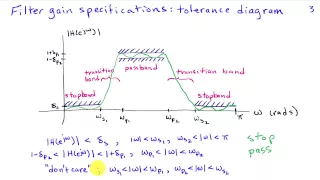

Introduction to Frequency Selective Filtering

http://AllSignalProcessing.com for free e-book on frequency relationships and more great signal processing content, including concept/screenshot files, quizzes, MATLAB and data files. Separation of signals based on frequency content using lowpass, highpass, bandpass, etc filters. Filter g

From playlist Introduction to Filter Design

Nexus Trimester - Joerg Kliewer (New Jersey Institute of Technology)

Lossy Compression with Privacy Constraints: Optimality of Polar Codes Joerg Kliewer (New Jersey Institute of Technology) April 01, 2016

From playlist Nexus Trimester - 2016 - Secrecy and Privacy Theme

Polynomial Maps With Noisy Input-Distributions - Jop Briet

Workshop on Additive Combinatorics and Algebraic Connections Topic: Polynomial Maps With Noisy Input-Distributions Speaker: Jop Briet Affiliation: Centrum Wiskunde & Informatica Date: October 25, 2022 A problem from theoretical computer science posed by Buhrman asks to show that a certa

From playlist Mathematics

Measurements vs. Bits: Compressed Sensors and Info Theory

October 18, 2006 lecture by Dror Baron for the Stanford University Computer Systems Colloquium (EE 380). Dror Baron discusses the numerous rich insights information theory has to offer Compressed Sensing (CS), an emerging field based on the revelation that optimization routines can reco

From playlist Course | Computer Systems Laboratory Colloquium (2006-2007)

Capacity Approaching Coding for Low Noise (...) - D. Touchette - Main Conference - CEB T3 2017

Dave Touchette (Waterloo) / 12.12.2017 Title: Capacity Approaching Coding for Low Noise Interactive Quantum Communication Abstract: We consider the problem of implementing two-party interactive quantum communication over noisy channels, a necessary endeavor if we wish to fully reap quan

From playlist 2017 - T3 - Analysis in Quantum Information Theory - CEB Trimester

Nexus trimester - Michael Langberg (SUNY at Buffalo)

A reductionist view of network information theory Michael Langberg (SUNY at Buffalo) February 08, 2016 Abstract: The network information theory literature includes beautiful results describing codes and performance limits for many different networks. While common tools and themes are evi

From playlist Nexus Trimester - 2016 - Distributed Computation and Communication Theme

Determining Signal Similarities

Get a Free Trial: https://goo.gl/C2Y9A5 Get Pricing Info: https://goo.gl/kDvGHt Ready to Buy: https://goo.gl/vsIeA5 Find a signal of interest within another signal, and align signals by determining the delay between them using Signal Processing Toolbox™. For more on Signal Processing To

From playlist Signal Processing and Communications

Shannon 100 - 26/10/2016 - Olivier RIOUL

Shannon’s Formula Wlog(1+SNR): A Historical Perspective Olivier Rioul (Télécom-ParisTech) As is well known, the milestone event that founded the field of information theory is the publication of Shannon’s 1948 paper entitled "A Mathematical Theory of Communication". This article brings t

From playlist Shannon 100