Jelena Diakonikolas: Local Acceleration of Frank-Wolfe Methods

Conditional gradients (a.k.a. Frank-Wolfe) methods are the convex optimization methods of choice in settings where the feasible set is a convex polytope for which projections are expensive or even computationally intractable, but linear optimization can be implemented efficiently. Unlike p

From playlist Workshop: Continuous approaches to discrete optimization

Vianney Perchet - Highly-Smooth Zero-th Order Online Optimization

We consider online convex optimization with noisy zero-th order information, that is noisy function evaluations at any desired point. We focus on problems with high degrees of smoothness, such as online logistic regression. We show that as opposed to g

From playlist Schlumberger workshop - Computational and statistical trade-offs in learning

Haotian Jiang: Minimizing Convex Functions with Integral Minimizers

Given a separation oracle SO for a convex function f that has an integral minimizer inside a box with radius R, we show how to find an exact minimizer of f using at most • O(n(n + log(R))) calls to SO and poly(n,log(R)) arithmetic operations, or • O(nlog(nR)) calls to SO and exp(O(n)) · po

From playlist Workshop: Continuous approaches to discrete optimization

A very basic overview of optimization, why it's important, the role of modeling, and the basic anatomy of an optimization project.

From playlist Optimization

Calculus: We present a procedure for solving word problems on optimization using derivatives. Examples include the fence problem and the minimum distance from a point to a line problem.

From playlist Calculus Pt 1: Limits and Derivatives

Lecture 13 | Convex Optimization I (Stanford)

Professor Stephen Boyd, of the Stanford University Electrical Engineering department, continues his lecture on geometric problems for the course, Convex Optimization I (EE 364A). Convex Optimization I concentrates on recognizing and solving convex optimization problems that arise in eng

From playlist Lecture Collection | Convex Optimization

Nonconvex Minimax Optimization - Chi Ji

Seminar on Theoretical Machine Learning Topic: Nonconvex Minimax Optimization Speaker: Chi Ji Affiliation: Princeton University; Member, School of Mathematics Date: November 20, 2019 For more video please visit http://video.ias.edu

From playlist Mathematics

Jorge Nocedal: "Tutorial on Optimization Methods for Machine Learning, Pt. 3"

Graduate Summer School 2012: Deep Learning, Feature Learning "Tutorial on Optimization Methods for Machine Learning, Pt. 3" Jorge Nocedal, Northwestern University Institute for Pure and Applied Mathematics, UCLA July 18, 2012 For more information: https://www.ipam.ucla.edu/programs/summ

From playlist GSS2012: Deep Learning, Feature Learning

Online Parallel Paging and Green Paging

Abstract: The parallel paging problem captures the task of efficiently sharing a cache among multiple parallel processors. Whereas the single-processor version of the problem has been well understood for decades, it has remained an open question how to find optimal algorithms for the multi

From playlist SIAG-ACDA Online Seminar Series

Lecture 7 | Convex Optimization I

Professor Stephen Boyd, of the Stanford University Electrical Engineering department, expands upon his previous lectures on convex optimization problems for the course, Convex Optimization I (EE 364A). Convex Optimization I concentrates on recognizing and solving convex optimization pro

From playlist Lecture Collection | Convex Optimization

Yusuke Kobayashi: A weighted linear matroid parity algorithm

The lecture was held within the framework of the follow-up workshop to the Hausdorff Trimester Program: Combinatorial Optimization. Abstract: The matroid parity (or matroid matching) problem, introduced as a common generalization of matching and matroid intersection problems, is so gener

From playlist Follow-Up-Workshop "Combinatorial Optimization"

Adaptive Sampling via Sequential Decision Making - András György

The workshop aims at bringing together researchers working on the theoretical foundations of learning, with an emphasis on methods at the intersection of statistics, probability and optimization. Lecture blurb Sampling algorithms are widely used in machine learning, and their success of

From playlist The Interplay between Statistics and Optimization in Learning

Lecture 11 | Convex Optimization II (Stanford)

Lecture by Professor Stephen Boyd for Convex Optimization II (EE 364B) in the Stanford Electrical Engineering department. Professor Boyd lectures on Sequential Convex Programming. This course introduces topics such as subgradient, cutting-plane, and ellipsoid methods. Decentralized conv

From playlist Lecture Collection | Convex Optimization

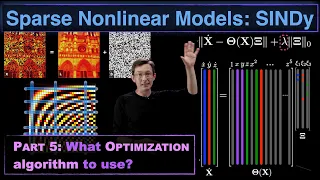

Sparse Nonlinear Dynamics Models with SINDy, Part 5: The Optimization Algorithms

This video discusses the various machine learning optimization schemes that may be used for the Sparse Identification of Nonlinear Dynamics (SINDy) algorithm. We discuss the LASSO sparse regression, sequential thresholded least squares (STLS), and the sparse relaxed regularized regression

From playlist Data-Driven Dynamical Systems with Machine Learning

Here we go over the sequential model, the basic building block of doing anything that's related to Deep Learning in Keras. (this is super important to understand everything else that is coming after this in the series!) I go over the two ways of declaring the functional signature (either

From playlist A Bit of Deep Learning and Keras

Ohad Shamir - Trade-offs in Distributed Learning

In many large-scale applications, learning must be done on training data which is distributed across multiple machines. This presents an important challenge, with multiple trade-offs between optimization accuracy, statistical performance, communication

From playlist Schlumberger workshop - Computational and statistical trade-offs in learning

From playlist CS294-112 Deep Reinforcement Learning Sp17

Anthony Nouy: Adaptive low-rank approximations for stochastic and parametric equations [...]

Find this video and other talks given by worldwide mathematicians on CIRM's Audiovisual Mathematics Library: http://library.cirm-math.fr. And discover all its functionalities: - Chapter markers and keywords to watch the parts of your choice in the video - Videos enriched with abstracts, b

From playlist Numerical Analysis and Scientific Computing

Bayesian Optimization in the Wild: Risk-Averse Decisions and Budget Constraints

A Google TechTalk, presented by Anastasia Makarova, 2022/08/23 Google BayesOpt Speaker Series - ABSTRACT: Black-box optimization tasks frequently arise in high-stakes applications such as material discovery or hyperparameter tuning of complex systems. In many of these applications, there i

From playlist Google BayesOpt Speaker Series 2021-2022