More periodic oscillations in a modified Brusselator

This is a longer version of the video https://youtu.be/mRcN-4kzGFY , with a different coloring of molecules, to make the oscillations more visible. The Brusselator model was proposed by Ilya Prigogine and his collaborators at the Université Libre de Bruxelles, to describe an oscillating a

From playlist Molecular dynamics

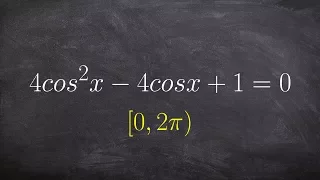

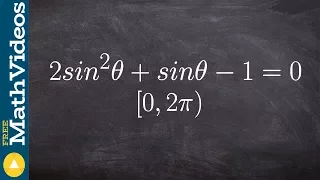

Solve a trig equation by factoring a perfect square trinomial

👉 Learn how to solve trigonometric equations. There are various methods that can be used to evaluate trigonometric equations, they include factoring out the GCF and simplifying the factored equation. Another method is to use a trigonometric identity to reduce and then simplify the given eq

From playlist Solve Trigonometric Equations by Factoring

How to Get Classical Physics from Quantum Mechanics

We tend to think of Classical Physics as straightforward and intuitive and Quantum Mechanics as difficult and conceptually challenging. However, this is not always the case! In classical mechanics, a standard technique for finding the evolution equations for a system is the method of least

From playlist Quantum Mechanics

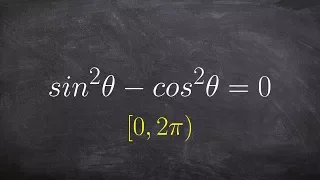

Solving a trigonometric equation with applying pythagorean identity

👉 Learn how to solve trigonometric equations. There are various methods that can be used to evaluate trigonometric equations, they include factoring out the GCF and simplifying the factored equation. Another method is to use a trigonometric identity to reduce and then simplify the given eq

From playlist Solve Trigonometric Equations by Factoring

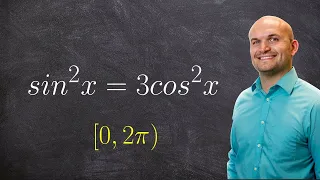

Solving a trig function with sine and cosine

👉 Learn how to solve trigonometric equations. There are various methods that can be used to evaluate trigonometric equations, they include factoring out the GCF and simplifying the factored equation. Another method is to use a trigonometric identity to reduce and then simplify the given eq

From playlist Solve Trigonometric Equations by Factoring

Stanley Osher: "Linearized Bregman Algorithm for L1-regularized Logistic Regression"

Graduate Summer School 2012: Deep Learning, Feature Learning "Linearized Bregman Algorithm for L1-regularized Logistic Regression" Stanley Osher, UCLA Institute for Pure and Applied Mathematics, UCLA July 20, 2012 For more information: https://www.ipam.ucla.edu/programs/summer-schools/g

From playlist GSS2012: Deep Learning, Feature Learning

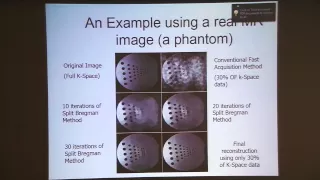

Stanley Osher: "Compressed Sensing: Recovery, Algorithms, and Analysis"

Graduate Summer School 2012: Deep Learning, Feature Learning "Compressed Sensing: Recovery, Algorithms, and Analysis" Stanley Osher, UCLA Institute for Pure and Applied Mathematics, UCLA July 20, 2012 For more information: https://www.ipam.ucla.edu/programs/summer-schools/graduate-summe

From playlist GSS2012: Deep Learning, Feature Learning

Babak Hassibi: "Implicit and Explicit Regularization in Deep Neural Networks"

Tensor Methods and Emerging Applications to the Physical and Data Sciences 2021 Workshop IV: Efficient Tensor Representations for Learning and Computational Complexity "Implicit and Explicit Regularization in Deep Neural Networks" Babak Hassibi - California Institute of Technology Abstra

From playlist Tensor Methods and Emerging Applications to the Physical and Data Sciences 2021

Lieven Vandenberghe: "Bregman proximal methods for semidefinite optimization."

Intersections between Control, Learning and Optimization 2020 "Bregman proximal methods for semidefinite optimization." Lieven Vandenberghe - University of California, Los Angeles (UCLA) Abstract: We discuss first-order methods for semidefinite optimization, based on non-Euclidean projec

From playlist Intersections between Control, Learning and Optimization 2020

30th Imaging & Inverse Problems (IMAGINE) OneWorld SIAM-IS Virtual Seminar Series Talk

Date: Wednesday, June 30, 2021, 10:00am Eastern Time Zone (US & Canada) Speaker: Leon Bungert Title: A Bregman Learning Framework for Sparse Neural Networks Abstract: I will present a novel learning framework based on stochastic Bregman iterations. It allows to train sparse neural netwo

From playlist Imaging & Inverse Problems (IMAGINE) OneWorld SIAM-IS Virtual Seminar Series

How to solve a trig equation by factoring

👉 Learn how to solve trigonometric equations. There are various methods that can be used to evaluate trigonometric equations, they include factoring out the GCF and simplifying the factored equation. Another method is to use a trigonometric identity to reduce and then simplify the given eq

From playlist Solve Trigonometric Equations by Factoring

Solving a natural logarithmic equation

👉 Learn how to solve logarithmic equations. Logarithmic equations are equations with logarithms in them. To solve a logarithmic equation, we first isolate the logarithm part of the equation. After we have isolated the logarithm part of the equation, we then get rid of the logarithm. This i

From playlist Solve Logarithmic Equations

Bregman persistence homology [Hana Dal Poz Kouřimská]

In this tutorial you will learn about what Bregman Divergences are and how to use them to generalize persistence homology. There are few formulas and lots of pictures :) !!! There is a mistake in the video in the definition of the Bregman divergence (minute 2:08). As the last condition, i

From playlist Tutorial-a-thon 2021 Spring

Graeffe's Root-Squaring Method (also called Graeffe-Dandelin-Lobachevskiĭ or Dandelin–Lobachesky–Graeffe method) for finding roots of polynomials. The method solves for all of the roots of a polynomial by only using the coefficients and does not require derivatives nor an interation funct

From playlist Root Finding

Dynamical, symplectic and stochastic perspectives on optimization – Michael Jordan – ICM2018

Plenary Lecture 20 Dynamical, symplectic and stochastic perspectives on gradient-based optimization Michael Jordan Abstract: Our topic is the relationship between dynamical systems and optimization. This is a venerable, vast area in mathematics, counting among its many historical threads

From playlist Plenary Lectures

More videos like this online at http://www.theurbanpenguin.com We now look at how we can use and define methods in ruby to help keep the code tidy and concise. This also helps with readability of the code and later maintenance. In the example we use we take the decimal to ip address conver

From playlist RUBY

Deep Learning and the “Blessing” of Dimensionality - Babak Hassibi - 6/7/2019

Changing Directions & Changing the World: Celebrating the Carver Mead New Adventures Fund. June 7, 2019 in Beckman Institute Auditorium at Caltech. The symposium features technical talks from Carver Mead New Adventures Fund recipients, alumni, and Carver Mead himself! Since 2014, this Fu

From playlist Carver Mead New Adventures Fund Symposium

Michael Jordan: "Optimization & Dynamical Systems: Variational, Hamiltonian, & Symplectic Perspe..."

High Dimensional Hamilton-Jacobi PDEs 2020 Workshop II: PDE and Inverse Problem Methods in Machine Learning "Optimization and Dynamical Systems: Variational, Hamiltonian, and Symplectic Perspectives" Michael Jordan - University of California, Berkeley (UC Berkeley) Abstract: We analyze t

From playlist High Dimensional Hamilton-Jacobi PDEs 2020

Solving an logarithmic equation

👉 Learn how to solve logarithmic equations. Logarithmic equations are equations with logarithms in them. To solve a logarithmic equation, we first isolate the logarithm part of the equation. After we have isolated the logarithm part of the equation, we then get rid of the logarithm. This i

From playlist Solve Logarithmic Equations

Dominique Spehner : Measuring quantum correlations with relative Rényi entropie

Recording during the thematic meeting : "Geometrical and Topological Structures of Information" the August 31, 2017 at the Centre International de Rencontres Mathématiques (Marseille, France) Filmmaker: Guillaume Hennenfent

From playlist Geometry