How a Computer know a Sentence is Grammatical: Context Free Grammars [Lecture]

This is a single lecture from a course. If you you like the material and want more context (e.g., the lectures that came before), check out the whole course: https://boydgraber.org/teaching/CMSC_723/ (Including homeworks and reading.) Music: https://soundcloud.com/alvin-grissom-ii/review

From playlist Computational Linguistics I

7.1: Intro to Session 7: Context-Free Grammar - Programming with Text

This video introduces Session 7: Context-Free Grammar from the ITP course "Programming from A to Z". A Context-Free Grammar is a set of recursive "replacement" rules to generate text. In this session, I discuss two JavaScript libraries: Tracery and RiTa.js for working with context-free gr

From playlist Programming with Text - All Videos

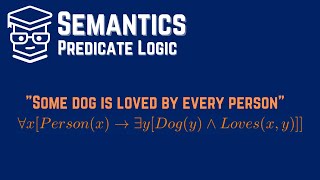

An Overview of Predicate Logic for Linguists - Semantics in Linguistics

This video covers predicate logic in #semantics for #linguistics. We talk about predicates, quantifiers (for all, for some), how to translate sentences into predicate logic, scope, bound variables, free variables, and assignment functions. Join this channel to get access to perks: https:/

From playlist Semantics in Linguistics

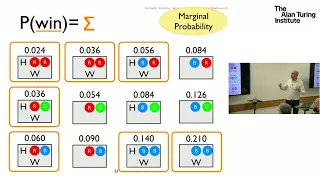

Probabilistic logic programming and its applications - Luc De Raedt, Leuven

Probabilistic programs combine the power of programming languages with that of probabilistic graphical models. There has been a lot of progress in this paradigm over the past twenty years. This talk will introduce probabilistic logic programming languages, which are based on Sato's distrib

From playlist Logic and learning workshop

NOUN PHRASES - ENGLISH GRAMMAR

We discuss noun phrases. Noun phrases consist of a head noun, proper name, or pronoun. Noun phrases can be modified by adjective phrases or other noun phrases. Noun phrases take determiners as specifiers. We also draw trees for noun phrase. you want to support the channel, hit the "JOIN"

From playlist English Grammar

Fellow Short Talks: Dr Charles Sutton, Edinburgh University

Charles Sutton is a Reader (equivalent to Associate Professor: http://bit.ly/1W9UhqT) in Machine Learning at the University of Edinburgh. He has over 50 publications in a broad range of applications of probabilistic machine learning. His work in machine learning for software engineering ha

From playlist Short Talks

Building context-free grammars: Theory of Computation (Mar 12 2021)

Context free grammars! This is a recording of a live class for Math 3342, Theory of Computation, an undergraduate course for math & computer science majors at Fairfield University, Spring 2021. Class website: http://cstaecker.fairfield.edu/~cstaecker/courses/2021s3342/

From playlist Math 3342 (Theory of Computation) Spring 2021

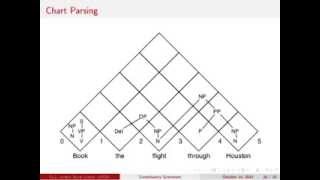

How to Parse a Sentence with the CYK Algorithm [Lecture]

Dependency Parsing: https://youtu.be/ZT1Et5wd1SQ This is a single lecture from a course. If you you like the material and want more context (e.g., the lectures that came before), check out the whole course: https://boydgraber.org/teaching/CMSC_723/ (Including homeworks and reading.) Mus

From playlist Computational Linguistics I

Stanford CS224N: NLP with Deep Learning | Winter 2019 | Lecture 18 – Constituency Parsing, TreeRNNs

For more information about Stanford’s Artificial Intelligence professional and graduate programs, visit: https://stanford.io/3wL2FCD Professor Christopher Manning, Stanford University http://onlinehub.stanford.edu/ Professor Christopher Manning Thomas M. Siebel Professor in Machine Lear

From playlist Stanford CS224N: Natural Language Processing with Deep Learning Course | Winter 2019

Paola Cantù : Logic and Interaction:pragmatics and argumentation theory

HYBRID EVENT Recorded during the meeting "Logic and transdisciplinarity" the February 11, 2022 by the Centre International de Rencontres Mathématiques (Marseille, France) Filmmaker: Guillaume Hennenfent Find this video and other talks given by worldwide mathematicians on CIRM's Audiov

From playlist Logic and Foundations

Matthijs Vákár: Mathematical foundations of automatic differentiation

HYBRID EVENT Recorded during the meeting "Logic of Probabilistic Programming" the January 31, 2022 by the Centre International de Rencontres Mathématiques (Marseille, France) Filmmaker: Guillaume Hennenfent Find this video and other talks given by worldwide mathematicians on CIRM's Aud

From playlist Virtual Conference

CMU Neural Nets for NLP 2017 (13): Parsing With Dynamic Programs

This lecture (by Graham Neubig) for CMU CS 11-747, Neural Networks for NLP (Fall 2017) covers: * What is Graph-based Parsing? * Minimum Spanning Tree Parsing * Structured Training and Other Improvements * Dynamic Programming Methods for Phrase Structure Parsing * Reranking Slides: http:/

From playlist CMU Neural Nets for NLP 2017