Dynamic Random Access Memory (DRAM). Part 3: Binary Decoders

This is the third in a series of computer science videos is about the fundamental principles of Dynamic Random Access Memory, DRAM, and the essential concepts of DRAM operation. This video covers the role of the row address decoder and the workings of generic binary decoders. It also expl

From playlist Random Access Memory

In this video, I present some applications of artificial neural networks and describe how such networks are typically structured. My hope is to create another video (soon) in which I describe how neural networks are actually trained from data.

From playlist Machine Learning

Mapping The Brain | Digging Deeper

Should the United States spend billions to completely map the human brain? Will it ever be possible to build an artificial brain - and, if we do, what are the implications for the future? Join Ben and Matt as they talk about some interesting stuff that didn't make it into the Deceptive Bra

From playlist Stuff They Don't Want You To Know, New Episodes!

Backpropagation explained | Part 2 - The mathematical notation

We covered the intuition behind what backpropagation's role is during the training of an artificial neural network. https://youtu.be/XE3krf3CQls Now, we're going to focus on the math that's underlying backprop. The math is pretty involved, and so we're going to break it up into bite-size

From playlist Deep Learning Fundamentals - Intro to Neural Networks

Coding Decoding Reasoning Tricks | Coding Decoding Reasoning Examples | Simplilearn

This video on Coding, decoding and reasoning tricks will help you make it easy while preparing for all job-related exams. This video also covers some examples related to coding, decoding, and reasoning and provides a clear explanation for every topic. Topics covered in this coding, decodi

From playlist Data Structures & Algorithms [2022 Updated]

Neuroscientists are starting to decipher what a person is seeing, remembering and even dreaming just by looking at their brain activity. They call it brain decoding. In this Nature Video, we see three different uses of brain decoding, including a virtual reality experiment that could use

From playlist Neuro

Linear Algebra for Computer Scientists. 9. Decomposing Vectors

This computer science video is one of a series on linear algebra for computer scientists. In this video you will learn how to express a given vector as a linear combination of a set of given basis vectors. In other words, you will learn how to determine the coefficients that were used to

From playlist Linear Algebra for Computer Scientists

Seminar 5: Tom Mitchell - Neural Representations of Language

MIT RES.9-003 Brains, Minds and Machines Summer Course, Summer 2015 View the complete course: https://ocw.mit.edu/RES-9-003SU15 Instructor: Tom Mitchell Modeling the neural representations of language using machine learning to classify words from fMRI data, predictive models for word feat

From playlist MIT RES.9-003 Brains, Minds and Machines Summer Course, Summer 2015

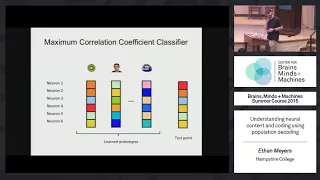

Tutorial 4: Ethan Meyers - Understanding Neural Content via Population Decoding

MIT RES.9-003 Brains, Minds and Machines Summer Course, Summer 2015 View the complete course: https://ocw.mit.edu/RES-9-003SU15 Instructor: Ethan Meyers Population decoding is a powerful way to analyze neural data - from single cell recordings, fMRI, MEG, EEG, etc - to understand the info

From playlist MIT RES.9-003 Brains, Minds and Machines Summer Course, Summer 2015

3.0 back-propagation algorithm: introduction

Deep Learning Course Purdue University Fall 2016 https://docs.google.com/document/d/1_p4Y_9Y79uBiMB8ENvJ0Uy8JGqhMQILIFrLrAgBXw60

From playlist Deep-Learning-Course

Stanford CS224N NLP with Deep Learning | Winter 2021 | Lecture 7 - Translation, Seq2Seq, Attention

For more information about Stanford's Artificial Intelligence professional and graduate programs visit: https://stanford.io/3CnshYl This lecture covers: 1. Introduce a new task: Machine Translation [15 mins] - Machine Translation (MT) is the task of translating a sentence x from one langu

From playlist Stanford CS224N: Natural Language Processing with Deep Learning | Winter 2021

Deep Learning for Audio and Natural Language Processing

In the third webinar in the Machine Learning webinar series, learn to use machine learning for audio analysis with some real-world applications of neural net models. Also featured is a demonstration of using neural net models for natural language processing.

From playlist Machine Learning Webinar Series

Deep Decoder: Concise Image Representations from Untrained Networks (Lecture 2) by Paul Hand

DISCUSSION MEETING THE THEORETICAL BASIS OF MACHINE LEARNING (ML) ORGANIZERS: Chiranjib Bhattacharya, Sunita Sarawagi, Ravi Sundaram and SVN Vishwanathan DATE : 27 December 2018 to 29 December 2018 VENUE : Ramanujan Lecture Hall, ICTS, Bangalore ML (Machine Learning) has enjoyed tr

From playlist The Theoretical Basis of Machine Learning 2018 (ML)

OCR model for reading Captchas - Keras Code Examples

This video walks through a CNN+RNN Captcha reader. The key to this is the CTC loss, the article below goes into a deeper dive than the video. Content Links: Keras Code Examples OCR for reading Captchas: https://keras.io/examples/vision/captcha_ocr/ TDS Connectionist Temporal Classificatio

From playlist Keras Code Examples

How does Google Translate's AI work?

Let’s take a look at how Google Translate’s Neural Network works behind the scenes! Read these references below for the best understanding of Neural Machine Translation! REFERENCES [1] Landmark paper of LSTM (Hochreiter et al., 1997): https://www.bioinf.jku.at/publications/older/2604.pd

From playlist Deep Learning Research Papers

Sequence Modelling with CNNs and RNNs

In this lecture we look at CNNs and RNNs for sequence modelling. Slides & transcripts are available at: https://github.com/sebastian-hofstaetter/teaching 📖 Check out Youtube's CC - we added our high quality (human corrected) transcripts here as well. Slide Timestamps: 0:00:00 1 - Welcom

From playlist Advanced Information Retrieval 2021 - TU Wien

How Google Translate Works - The Machine Learning Algorithm Explained!

Let’s take a look at how Google Translate’s Neural Network works behind the scenes! Read these references below for the best understanding of Neural Machine Translation! SUBSCRIBE TO CODE EMPORIUM: https://www.youtube.com/c/CodeEmporium?sub_confirmation=1 To submit your video to CS Dojo

From playlist Videos from Our Other Channels

This lecture gives an overview of neural networks, which play an important role in machine learning today. Book website: http://databookuw.com/ Steve Brunton's website: eigensteve.com

From playlist Intro to Data Science

Lecture 10: Neural Machine Translation and Models with Attention

Lecture 10 introduces translation, machine translation, and neural machine translation. Google's new NMT is highlighted followed by sequence models with attention as well as sequence model decoders. ------------------------------------------------------------------------------- Natural L

From playlist Lecture Collection | Natural Language Processing with Deep Learning (Winter 2017)