Local regression

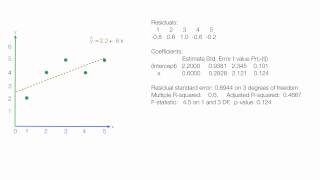

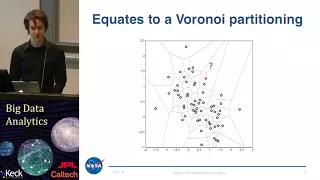

Local regression or local polynomial regression, also known as moving regression, is a generalization of the moving average and polynomial regression.Its most common methods, initially developed for scatterplot smoothing, are LOESS (locally estimated scatterplot smoothing) and LOWESS (locally weighted scatterplot smoothing), both pronounced /ˈloʊɛs/. They are two strongly related non-parametric regression methods that combine multiple regression models in a k-nearest-neighbor-based meta-model.In some fields, LOESS is known and commonly referred to as Savitzky–Golay filter (proposed 15 years before LOESS). LOESS and LOWESS thus build on "classical" methods, such as linear and nonlinear least squares regression. They address situations in which the classical procedures do not perform well or cannot be effectively applied without undue labor. LOESS combines much of the simplicity of linear least squares regression with the flexibility of nonlinear regression. It does this by fitting simple models to localized subsets of the data to build up a function that describes the deterministic part of the variation in the data, point by point. In fact, one of the chief attractions of this method is that the data analyst is not required to specify a global function of any form to fit a model to the data, only to fit segments of the data. The trade-off for these features is increased computation. Because it is so computationally intensive, LOESS would have been practically impossible to use in the era when least squares regression was being developed. Most other modern methods for process modeling are similar to LOESS in this respect. These methods have been consciously designed to use our current computational ability to the fullest possible advantage to achieve goals not easily achieved by traditional approaches. A smooth curve through a set of data points obtained with this statistical technique is called a loess curve, particularly when each smoothed value is given by a weighted quadratic least squares regression over the span of values of the y-axis scattergram criterion variable. When each smoothed value is given by a weighted linear least squares regression over the span, this is known as a lowess curve; however, some authorities treat lowess and loess as synonyms. (Wikipedia).