http://AllSignalProcessing.com for more great signal-processing content: ad-free videos, concept/screenshot files, quizzes, MATLAB and data files. The likelihood ratio test maximizes the probability of correctly deciding hypothesis H_1 is true for any given probability of deciding H_0 is

From playlist Estimation and Detection Theory

EXTRA MATH Lec 6B: Maximum likelihood estimation for the binomial model

Forelæsning med Per B. Brockhoff. Kapitler:

From playlist DTU: Introduction to Statistics | CosmoLearning.org

Probability is not Likelihood. Find out why!!!

NOTE: This video was originally made as a follow up to an overview of Maximum Likelihood https://youtu.be/XepXtl9YKwc . That video provides context that gives this video more meaning. Here's one of those tricky little things, Probability vs. Likelihood. In common conversation we use these

From playlist StatQuest

Likelihood Estimation - THE MATH YOU SHOULD KNOW!

Likelihood is a confusing term. It is not a probability, but is proportional to a probability. Likelihood and probability can't be used interchangeably. In this post, we will be dissecting the likelihood as a concept and understand why likelihood is important in machine learning. We will a

From playlist The Math You Should Know

Implications and Truth Conditions for Implications

This video defines an implication and when an implication is true or false.

From playlist Mathematical Statements (Discrete Math)

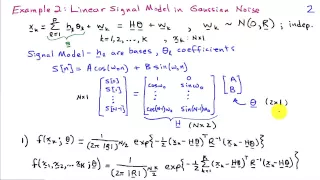

Maximum Likelihood Estimation Examples

http://AllSignalProcessing.com for more great signal processing content, including concept/screenshot files, quizzes, MATLAB and data files. Three examples of applying the maximum likelihood criterion to find an estimator: 1) Mean and variance of an iid Gaussian, 2) Linear signal model in

From playlist Estimation and Detection Theory

Suhasini Subba Rao: Reconciling the Gaussian and Whittle Likelihood with an application to ...

In time series analysis there is an apparent dichotomy between time and frequency domain methods. The aim of this paper is to draw connections between frequency and time domain methods. Our focus will be on reconciling the Gaussia likelihood and the Whittle likelihood. We derive an exact,

From playlist Virtual Conference

Rémi Bardenet: A tutorial on Bayesian machine learning: what, why and how - lecture 2

HYBRID EVENT Recorded during the meeting "End-to-end Bayesian Learning Methods " the October 25, 2021 by the Centre International de Rencontres Mathématiques (Marseille, France) Filmmaker: Guillaume Hennenfent Find this video and other talks given by worldwide mathematicians on CIRM's

From playlist Mathematical Aspects of Computer Science

EXTRA MATH 6A: introduction to likelihood theory

Forelæsning med Per B. Brockhoff. Kapitler:

From playlist DTU: Introduction to Statistics | CosmoLearning.org

Statistical methods in particle physics by Harrison Prosper

Discussion Meeting : Hunting SUSY @ HL-LHC (ONLINE) ORGANIZERS : Satyaki Bhattacharya (SINP, India), Rohini Godbole (IISc, India), Kajari Majumdar (TIFR, India), Prolay Mal (NISER-Bhubaneswar, India), Seema Sharma (IISER-Pune, India), Ritesh K. Singh (IISER-Kolkata, India) and Sanjay Kuma

From playlist HUNTING SUSY @ HL-LHC (ONLINE) 2021

From playlist STAT 501

Deep Learning 8: Unsupervised learning and generative models

Shakir Mohamed, Research Scientist, discusses unsupervised learning and generative models as part of the Advanced Deep Learning & Reinforcement Learning Lectures.

From playlist Learning resources

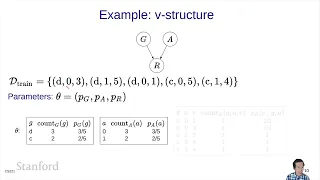

Bayesian Networks 7 - Supervised Learning | Stanford CS221: AI (Autumn 2021)

For more information about Stanford's Artificial Intelligence professional and graduate programs visit: https://stanford.io/ai Associate Professor Percy Liang Associate Professor of Computer Science and Statistics (courtesy) https://profiles.stanford.edu/percy-liang Assistant Professor

From playlist Stanford CS221: Artificial Intelligence: Principles and Techniques | Autumn 2021

Parametric Probability Distribution Fitted to Data with Bayes's Theorem

James Rock explains how he's using Bayes's Theorem to fit data to a parametric distribution with Mathematica in this talk from the Wolfram Technology Conference. For more information about Mathematica, please visit: http://www.wolfram.com/mathematica

From playlist Wolfram Technology Conference 2012

undergraduate machine learning 12: Bayesian learning

Introduction to Bayesian learning. The slides are available here: http://www.cs.ubc.ca/~nando/340-2012/lectures.php This course was taught in 2012 at UBC by Nando de Freitas

From playlist undergraduate machine learning at UBC 2012

Nando de Freitas: "An Informal Mathematical Tour of Feature Learning, Pt. 2"

Graduate Summer School 2012: Deep Learning, Feature Learning "An Informal Mathematical Tour of Feature Learning, Pt. 2" Nando de Freitas, University of British Columbia Institute for Pure and Applied Mathematics, UCLA July 26, 2012 For more information: https://www.ipam.ucla.edu/program

From playlist GSS2012: Deep Learning, Feature Learning

The Generalized Likelihood Ratio Test

http://AllSignalProcessing.com for more great signal processing content, including concept/screenshot files, quizzes, MATLAB and data files. There is no universally optimal test strategy for composite hypotheses (unknown parameters in the pdfs). The generalized likelihood ratio test (GLRT

From playlist Estimation and Detection Theory