From playlist filter (less comfortable)

Why Use Kalman Filters? | Understanding Kalman Filters, Part 1

Download our Kalman Filter Virtual Lab to practice linear and extended Kalman filter design of a pendulum system with interactive exercises and animations in MATLAB and Simulink: https://bit.ly/3g5AwyS Discover common uses of Kalman filters by walking through some examples. A Kalman filte

From playlist Understanding Kalman Filters

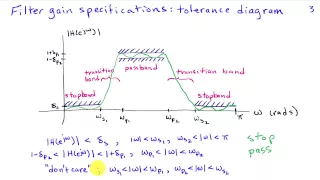

Introduction to Frequency Selective Filtering

http://AllSignalProcessing.com for free e-book on frequency relationships and more great signal processing content, including concept/screenshot files, quizzes, MATLAB and data files. Separation of signals based on frequency content using lowpass, highpass, bandpass, etc filters. Filter g

From playlist Introduction to Filter Design

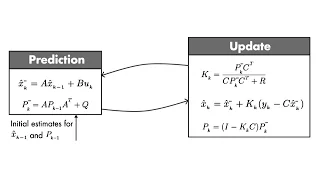

Optimal State Estimator Algorithm | Understanding Kalman Filters, Part 4

Download our Kalman Filter Virtual Lab to practice linear and extended Kalman filter design of a pendulum system with interactive exercises and animations in MATLAB and Simulink: https://bit.ly/3g5AwyS Discover the set of equations you need to implement a Kalman filter algorithm. You’ll l

From playlist Understanding Kalman Filters

Special Topics - The Kalman Filter (1 of 55) What is a Kalman Filter?

Visit http://ilectureonline.com for more math and science lectures! In this video I will explain what is Kalman filter and how is it used. Next video in this series can be seen at: https://youtu.be/tk3OJjKTDnQ

From playlist SPECIAL TOPICS 1 - THE KALMAN FILTER

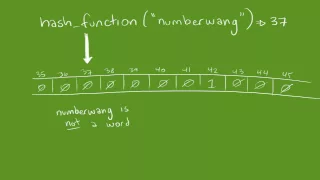

For more information on Bloom Filters, check the Wikipedias: http://en.wikipedia.org/wiki/Bloom_filter , for special topics like "How to get around the 'no deletion' rule" and "How do I generate all of these different hash functions anyways?" For other questions, like "who taught you how

From playlist Software Development Lectures

Kernel Recipes 2018 - CPU Idle Loop Rework - Rafael J. Wysocki

The CPU idle loop is the piece of code executed by logical CPUs if they have no tasks to run. If the CPU supports idle states allowing it to draw less power while not executing any instructions, the idle loop invokes a CPU idle governor to select the most suitable idle state for the CPU an

From playlist Kernel Recipes 2018

Kernel Recipes 2014 - NDIV: a low overhead network traffic diverter

NDIV is a young, very simple, yet efficient network traffic diverter. Its purpose is to help build network applications that intercept packets at line rate with a very low processing overhead. A first example application is a stateless HTTP server reaching line rate on all packet sizes.

From playlist Kernel Recipes 2014

DDPS | Physics-Guided Deep Learning for Dynamics Forecasting

In this talk from July 9, 2021, University of California, San Diego Computer Science Ph.D. student Rui Wang discusses physics-based modeling with deep learning. Description: Modeling complex physical dynamics is a fundamental task in science and engineering. There is a growing need for in

From playlist Data-driven Physical Simulations (DDPS) Seminar Series

Joshua Bon - Twisted: Improving particle filters by learning modified paths

Dr Joshua Bon (QUT) presents "Twisted: Improving particle filters by learning modified paths", 22 April 2022.

From playlist Statistics Across Campuses

Optimal State Estimator | Understanding Kalman Filters, Part 3

Download our Kalman Filter Virtual Lab to practice linear and extended Kalman filter design of a pendulum system with interactive exercises and animations in MATLAB and Simulink: https://bit.ly/3g5AwyS Watch this video for an explanation of how Kalman filters work. Kalman filters combine

From playlist Understanding Kalman Filters

Keynote: AI for Adaptive Experiment Design - Yisong Yue - 10/25/2019

AI-4-Science Workshop, October 25, 2019 at Bechtel Residence Dining Hall, Caltech. Learn more about: - AI-4-science: https://www.ist.caltech.edu/ai4science/ - Events: https://www.ist.caltech.edu/events/ Produced in association with Caltech Academic Media Technologies. ©2019 California I

From playlist AI-4-Science Workshop

TU Wien Rendering #22 - Reinhard's Tone Mapper

This lecture is held by Thomas Auzinger. In the first lecture, we discussed that we're trying to simulate light transport and measure radiance. That sounds indeed wonderful, but we can't display radiance on our display device, can we? We have to convert it to RGB somehow. It turns out that

From playlist TU Wien Rendering / Ray Tracing Course

Edouard Oyallon: One signal processing view on deep Learning - lecture 2

Since 2012, deep neural networks have led to outstanding results in many various applications, literally exceeding any previously existing methods, in texts, images, sounds, videos, graphs... They consist of a cascade of parametrized linear and non-linear operators whose parameters are opt

From playlist Mathematical Aspects of Computer Science

30 years ago a man attempted to denoise medical imagery and unknowingly set off a chain reaction of research developments leading to a modern day post processing effect that transforms images into paintings, but how did he do it? Download my GShade shader pack! https://github.com/GarrettG

From playlist Post Processing

How do Vision Transformers work? – Paper explained | multi-head self-attention & convolutions

It turns out that multi-head self-attention and convolutions are complementary. So, what makes multi-head self-attention different from convolutions? How and why do Vision Transformers work? In this video, we will find out by explaining the paper “How Do Vision Transformers Work?” by Namuk

From playlist The Transformer explained by Ms. Coffee Bean

Applications of GPU Computation in Mathematica

With Mathematica, the enormous parallel processing power of Graphical Processing Units (GPUs) can be used from an integrated built-in interface. Incorporating GPU technology into Mathematica allows high-performance solutions to be developed in many areas such as financial simulation, image

From playlist Wolfram Technology Conference 2011

Lesson 12: Deep Learning Part 2 2018 - Generative Adversarial Networks (GANs)

NB: Please go to http://course.fast.ai/part2.html to view this video since there is important updated information there. If you have questions, use the forums at http://forums.fast.ai. We start today with a deep dive into the DarkNet architecture used in YOLOv3, and use it to better under

From playlist Cutting Edge Deep Learning for Coders 2

Get a Free Trial: https://goo.gl/C2Y9A5 Get Pricing Info: https://goo.gl/kDvGHt Ready to Buy: https://goo.gl/vsIeA5 Remove an unwanted tone from a signal, and compensate for the delay introduced in the process using Signal Processing Toolbox™. For more on Signal Processing Toolbox, visi

From playlist Signal Processing and Communications