Kernel Recipes 2022 - Checking your work: validating the kernel by building and testing in CI

The Linux kernel is one of the most complex pieces of software ever written. Being in ring 0, bugs in the kernel are a big problem, so having confidence in the correctness and robustness of the kernel is incredibly important. This is difficult enough for a single version and configuration

From playlist Kernel Recipes 2022

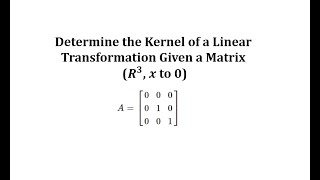

Determine the Kernel of a Linear Transformation Given a Matrix (R3, x to 0)

This video explains how to determine the kernel of a linear transformation.

From playlist Kernel and Image of Linear Transformation

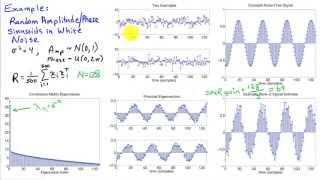

http://AllSignalProcessing.com for more great signal processing content, including concept/screenshot files, quizzes, MATLAB and data files. Representing multivariate random signals using principal components. Principal component analysis identifies the basis vectors that describe the la

From playlist Random Signal Characterization

Kernel of a group homomorphism

In this video I introduce the definition of a kernel of a group homomorphism. It is simply the set of all elements in a group that map to the identity element in a second group under the homomorphism. The video also contain the proofs to show that the kernel is a normal subgroup.

From playlist Abstract algebra

Introduction to the Kernel and Image of a Linear Transformation

This video introduced the topics of kernel and image of a linear transformation.

From playlist Kernel and Image of Linear Transformation

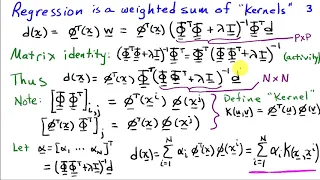

Support Vector Machines (3): Kernels

The kernel trick in the SVM dual; examples of kernels; kernel form for least-squares regression

From playlist cs273a

The Kernel Trick - THE MATH YOU SHOULD KNOW!

Some parametric methods, like polynomial regression and Support Vector Machines stand out as being very versatile. This is due to a concept called "Kernelization". In this video, we are going to kernelize linear regression. And show how they can be incorporated in other Algorithms to solv

From playlist The Math You Should Know

Determine a Basis for the Kernel of a Matrix Transformation (3 by 4)

This video explains how to determine a basis for the kernel of a matrix transformation.

From playlist Kernel and Image of Linear Transformation

Applied Machine Learning 2019 - Lecture 16 - NMF; Outlier detection

Non-negative Matrix factorization for feature extraction Outlier detection with probabilistic models Isolation forests One-class SVMs Materials and slides on the class website: https://www.cs.columbia.edu/~amueller/comsw4995s19/schedule/

From playlist Applied Machine Learning - Spring 2019

Stanford CS229: Machine Learning | Summer 2019 | Lecture 23 - Course Recap and Wrap Up

For more information about Stanford’s Artificial Intelligence professional and graduate programs, visit: https://stanford.io/3B6WitS Anand Avati Computer Science, PhD To follow along with the course schedule and syllabus, visit: http://cs229.stanford.edu/syllabus-summer2019.html

From playlist Stanford CS229: Machine Learning Course | Summer 2019 (Anand Avati)

ML Tutorial: Probabilistic Dimensionality Reduction, Part 1/2 (Neil Lawrence)

Machine Learning Tutorial at Imperial College London: Probabilistic Dimensionality Reduction, Part 1/2 Neil Lawrence (University of Sheffield) March 11, 2015

From playlist Machine Learning Tutorials

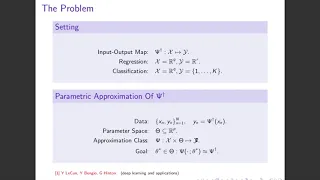

2020.05.28 Andrew Stuart - Supervised Learning between Function Spaces

Consider separable Banach spaces X and Y, and equip X with a probability measure m. Let F: X \to Y be an unknown operator. Given data pairs {x_j,F(x_j)} with {x_j} drawn i.i.d. from m, the goal of supervised learning is to approximate F. The proposed approach is motivated by the recent su

From playlist One World Probability Seminar

ML Tutorial: Probabilistic Dimensionality Reduction, Part 2/2 (Neil Lawrence)

Machine Learning Tutorial at Imperial College: Probabilistic Dimensionality Reduction, Part 2/2 Neil Lawrence (University of Sheffield) October 21, 2015

From playlist Machine Learning Tutorials

Outliers in weakly Confined Coulomb-type systems by Alon Nishry

PROGRAM: TOPICS IN HIGH DIMENSIONAL PROBABILITY ORGANIZERS: Anirban Basak (ICTS-TIFR, India) and Riddhipratim Basu (ICTS-TIFR, India) DATE & TIME: 02 January 2023 to 13 January 2023 VENUE: Ramanujan Lecture Hall This program will focus on several interconnected themes in modern probab

From playlist TOPICS IN HIGH DIMENSIONAL PROBABILITY

Seventh Imaging & Inverse Problems (IMAGINE) OneWorld SIAM-IS Virtual Seminar Series Talk

Date: Wednesday, December 2, 10:00am EDT Speaker: Martin Burger, FAU Title: Nonlinear spectral decompositions in imaging and inverse problems Abstract: This talk will describe the development of a variational theory generalizing classical spectral decompositions in linear filters and si

From playlist Imaging & Inverse Problems (IMAGINE) OneWorld SIAM-IS Virtual Seminar Series

Mohamed Ndaoud - Constructing the fractional Brownian motion

In this talk, we give a new series expansion to simulate B a fractional Brownian motion based on harmonic analysis of the auto-covariance function. The construction proposed here reveals a link between Karhunen-Loève theorem and harmonic analysis for Gaussian processes with stationarity co

From playlist Les probabilités de demain 2017

Omer Bobrowski: Random Simplicial Complexes, Lecture I

A simplicial complex is a collection of vertices, edges, triangles, tetrahedra and higher dimensional simplexes glued together. In other words, it is a higher-dimensional generalization of a graph. In recent years there has been a growing effort in developing the theory of random simplicia

From playlist Workshop: High dimensional spatial random systems

Elisabeth Gassiat - Manifold Learning with Noisy Data

It is a common idea that high dimensional data (or features) may lie on low dimensional support making learning easier. In this talk, I will present a very general set-up in which it is possible to recover low dimensional non-linear structures with noisy data, the noise being totally unkno

From playlist 8th edition of the Statistics & Computer Science Day for Data Science in Paris-Saclay, 9 March 2023

Concept Check: Describe the Kernel of a Linear Transformation (Reflection Across y-axis)

This video explains how to describe the kernel of a linear transformation that is a reflection across the y-axis.

From playlist Kernel and Image of Linear Transformation