We propose a sparse regression method capable of discovering the governing partial differential equation(s) of a given system by time series measurements in the spatial domain. The regression framework relies on sparsity promoting techniques to select the nonlinear and partial derivative

From playlist Research Abstracts from Brunton Lab

Least squares method for simple linear regression

In this video I show you how to derive the equations for the coefficients of the simple linear regression line. The least squares method for the simple linear regression line, requires the calculation of the intercept and the slope, commonly written as beta-sub-zero and beta-sub-one. Deriv

From playlist Machine learning

Partial fractions are SPECIAL! (Repeated linear factors)

► My Integrals course: https://www.kristakingmath.com/integrals-course The tricky thing about partial fractions is that there are four kinds of partial fractions problems. The kind of partial fractions decomposition you'll need to perform depends on the kinds of factors in your denominato

From playlist Integrals

Generalized Hermite Reduction, Creative Telescoping, and Definite Integration of D-Finite Functions Hermite reduction is a classical algorithmic tool in symbolic integration. It is used to decompose a given rational function as a sum of a function with simple poles and the derivative of a

From playlist DART X

Numerically Calculating Partial Derivatives

In this video we discuss how to calculate partial derivatives of a function using numerical techniques. In other words, these partials are calculated without needing an analytical representation of the function. This is useful in situations where the function in question is either too co

From playlist Vector Differential Calculus

PARTIAL FRACTIONS example with distinct linear factors

► My Integrals course: https://www.kristakingmath.com/integrals-course The tricky thing about partial fractions is that there are four kinds of partial fractions problems. The kind of partial fractions decomposition you'll need to perform depends on the kinds of factors in your denominato

From playlist Integrals

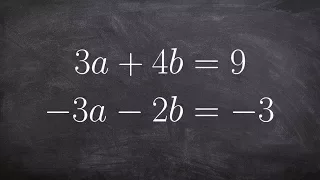

Labeling a System by Solving Using Elimination Method

👉Learn how to solve a system (of equations) by elimination. A system of equations is a set of equations which are collectively satisfied by one solution of the variables. The elimination method of solving a system of equations involves making the coefficient of one of the variables to be e

From playlist Solve a System of Equations Using Elimination | Medium

Using residuals to analyze the data

From playlist Unit 3: Linear and Non-Linear Regression

Lecture 10 | Convex Optimization I (Stanford)

Professor Stephen Boyd, of the Stanford University Electrical Engineering department, lectures on approximation and fitting within convex optimization for the course, Convex Optimization I (EE 364A). Convex Optimization I concentrates on recognizing and solving convex optimization probl

From playlist Lecture Collection | Convex Optimization

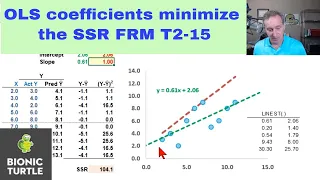

Linear regression: OLS coefficients minimize the SSR (FRM T2-15)

[my XLS is here https://trtl.bz/2uiivIm] The ordinary least squares (OLS) regression coefficients are determined by the "best fit" line that minimizes the sum of squared residuals (SSR). Discuss this video in our FRM forum! https://trtl.bz/2Kn3uJJ Subscribe here https://www.youtube.com/c

From playlist Quantitative Analysis (FRM Topic 2)

Mod-01 Lec-23 Discretization of ODE-BVP using Least Square Approximation and Gelarkin Method

Advanced Numerical Analysis by Prof. Sachin C. Patwardhan,Department of Chemical Engineering,IIT Bombay.For more details on NPTEL visit http://nptel.ac.in

From playlist IIT Bombay: Advanced Numerical Analysis | CosmoLearning.org

Laura Grigori - Randomization techniques for solving large scale linear algebra problems

Recorded 30 March 2023. Laura Grigori of Sorbonne Université presents "Randomization techniques for solving large scale linear algebra problems" at IPAM's Increasing the Length, Time, and Accuracy of Materials Modeling Using Exascale Computing workshop. Learn more online at: http://www.ipa

From playlist 2023 Increasing the Length, Time, and Accuracy of Materials Modeling Using Exascale Computing

Optimization in the Wolfram Language

This presentation by Rob Knapp focuses on optimization functionality in the Wolfram Language. Examples are shown to highlight recent progress in convex optimization, including support for complex variables, robust optimization and parametric optimization.

From playlist Wolfram Technology Conference 2020

Optimization with inexact gradient and function by Serge Gratton

DISCUSSION MEETING : STATISTICAL PHYSICS OF MACHINE LEARNING ORGANIZERS : Chandan Dasgupta, Abhishek Dhar and Satya Majumdar DATE : 06 January 2020 to 10 January 2020 VENUE : Madhava Lecture Hall, ICTS Bangalore Machine learning techniques, especially “deep learning” using multilayer n

From playlist Statistical Physics of Machine Learning 2020

36th Imaging & Inverse Problems (IMAGINE) OneWorld SIAM-IS Virtual Seminar Series Talk

Title: Methods for $\ell_p$-$\ell_q$ minimization with applications to image restoration and regression with nonconvex loss and penalty. Date: December 1, 2021, 10:00am Eastern Time Zone (US & Canada) / 2:00pm GMT Speaker: Lothar Reichel, Kent State University Abstract: Minimization prob

From playlist Imaging & Inverse Problems (IMAGINE) OneWorld SIAM-IS Virtual Seminar Series

Jorge Nocedal: "Tutorial on Optimization Methods for Machine Learning, Pt. 1"

Graduate Summer School 2012: Deep Learning, Feature Learning "Tutorial on Optimization Methods for Machine Learning, Pt. 1" Jorge Nocedal, Northwestern University Institute for Pure and Applied Mathematics, UCLA July 19, 2012 For more information: https://www.ipam.ucla.edu/programs/summ

From playlist GSS2012: Deep Learning, Feature Learning

Fitting a Line using Least Squares #SoME2

Interactive links: 1DOF: https://cryptic-spinach.github.io/1DoF/ 2DOF: https://cryptic-spinach.github.io/2DoF/ 2DOF mobile: https://cryptic-spinach.github.io/least_squares_2DoF/ Collaboration links: I worked with Chase Reynolds for the #SoME2 contest. Check out his website and Twitter her

From playlist Summer of Math Exposition 2 videos

DDPS | "When and why physics-informed neural networks fail to train" by Paris Perdikaris

Physics-informed neural networks (PINNs) have lately received great attention thanks to their flexibility in tackling a wide range of forward and inverse problems involving partial differential equations. However, despite their noticeable empirical success, little is known about how such c

From playlist Data-driven Physical Simulations (DDPS) Seminar Series

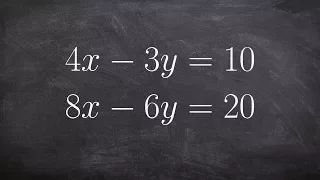

Solve a System of Linear Equations Using Elimination

👉Learn how to solve a system (of equations) by elimination. A system of equations is a set of equations which are collectively satisfied by one solution of the variables. The elimination method of solving a system of equations involves making the coefficient of one of the variables to be e

From playlist Solve a System of Equations Using Elimination | Medium

Solve a System of Linear Equations Using Elimination

👉Learn how to solve a system (of equations) by elimination. A system of equations is a set of equations which are collectively satisfied by one solution of the variables. The elimination method of solving a system of equations involves making the coefficient of one of the variables to be e

From playlist Solve a System of Equations Using Elimination | Medium