(ML 7.7.A1) Dirichlet distribution

Definition of the Dirichlet distribution, what it looks like, intuition for what the parameters control, and some statistics: mean, mode, and variance.

From playlist Machine Learning

Multivariate Gaussian distributions

Properties of the multivariate Gaussian probability distribution

From playlist cs273a

Value distribution of long Dirichlet polynomials and applications to the Riemann...-Maksym Radziwill

Maksym Radziwill Value distribution of long Dirichlet polynomials and applications to the Riemann zeta-function Stanford University; Member, School of Mathematics October 1, 2013 For more videos, visit http://video.ias.edu

From playlist Mathematics

(ML 7.8) Dirichlet-Categorical model (part 2)

The Dirichlet distribution is a conjugate prior for the Categorical distribution (i.e. a PMF a finite set). We derive the posterior distribution and the (posterior) predictive distribution under this model.

From playlist Machine Learning

(ML 7.7) Dirichlet-Categorical model (part 1)

The Dirichlet distribution is a conjugate prior for the Categorical distribution (i.e. a PMF a finite set). We derive the posterior distribution and the (posterior) predictive distribution under this model.

From playlist Machine Learning

Continuous Distributions: Beta and Dirichlet Distributions

Video Lecture from the course INST 414: Advanced Data Science at UMD's iSchool. Full course information here: http://www.umiacs.umd.edu/~jbg/teaching/INST_414/

From playlist Advanced Data Science

Topic Models: Variational Inference for Latent Dirichlet Allocation (with Xanda Schofield)

This is a single lecture from a course. If you you like the material and want more context (e.g., the lectures that came before), check out the whole course: https://sites.google.com/umd.edu/2021cl1webpage/ (Including homeworks and reading.) Xanda's Webpage: https://www.cs.hmc.edu/~xanda

From playlist Computational Linguistics I

Latent Dirichlet Allocation (Part 1 of 2)

Latent Dirichlet Allocation is a powerful machine learning technique used to sort documents by topic. Learn all about it in this video! This is part 1 of a 2 video series. Video 2: https://www.youtube.com/watch?v=BaM1uiCpj_E For information on my book "Grokking Machine Learning": https:/

From playlist Unsupervised Learning

undergraduate machine learning 23: Dirichlet and categorical distributions

Dirichlet and Categorical models. Application to twitter sentiment prediction with Naive Bayes. The slides are available here: http://www.cs.ubc.ca/~nando/340-2012/lectures.php This course was taught in 2012 at UBC by Nando de Freitas

From playlist undergraduate machine learning at UBC 2012

Henrik Hult: Power-laws and weak convergence of the Kingman coalescent

The Kingman coalescent is a fundamental process in population genetics modelling the ancestry of a sample of individuals backwards in time. In this paper, weak convergence is proved for a sequence of Markov chains consisting of two components related to the Kingman coalescent, under a pare

From playlist Probability and Statistics

(Original Paper) Latent Dirichlet Allocation (algorithm) | AISC Foundational

Toronto Deep Learning Series, 15 November 2018 Paper Review: http://www.jmlr.org/papers/volume3/blei03a/blei03a.pdf Speaker: Renyu Li (Wysdom.ai) Host: Munich Reinsurance Co-Canada Date: Nov 15th, 2018 Latent Dirichlet Allocation We describe latent Dirichlet allocation (LDA), a genera

From playlist Natural Language Processing

Applied Machine Learning 2019 - Lecture 18 - Topic Models

Latent Semantic Analysis, Non-negative Matrix Factorization for Topic models, Latent Dirichlet Allocation Markov Chain Monte Carlo and Gibbs sampling Class website with slides and more materials: https://www.cs.columbia.edu/~amueller/comsw4995s19/schedule/

From playlist Applied Machine Learning - Spring 2019

Susan Holmes: "Latent variables explain dependencies in bacterial communities"

Emerging Opportunities for Mathematics in the Microbiome 2020 "Latent variables explain dependencies in bacterial communities" Susan Holmes - Stanford University, Statistics Abstract: Data from sequencing bacterial communities are formalized as contingency tables whose columns correspond

From playlist Emerging Opportunities for Mathematics in the Microbiome 2020

What is a Unimodal Distribution?

Quick definition of a unimodal distribution and how it compares to a bimodal distribution and a multimodal distribution.

From playlist Probability Distributions

Training Latent Dirichlet Allocation: Gibbs Sampling (Part 2 of 2)

This is the second of a series of two videos on Latent Dirichlet Allocation (LDA), a powerful technique to sort documents into topics. In this video, we learn to train an LDA model using Gibbs sampling. The first video is here: https://www.youtube.com/watch?v=T05t-SqKArY

From playlist Unsupervised Learning

Statistics: Ch 7 Sample Variability (3 of 14) The Inference of the Sample Distribution

Visit http://ilectureonline.com for more math and science lectures! To donate: http://www.ilectureonline.com/donate https://www.patreon.com/user?u=3236071 We will learn if the number of samples is greater than or equal to 25 then: 1) the distribution of the sample means is a normal distr

From playlist STATISTICS CH 7 SAMPLE VARIABILILTY

From playlist Contributed talks One World Symposium 2020

Stanford CS234: Reinforcement Learning | Winter 2019 | Lecture 13 - Fast Reinforcement Learning III

For more information about Stanford’s Artificial Intelligence professional and graduate programs, visit: https://stanford.io/ai Professor Emma Brunskill, Stanford University http://onlinehub.stanford.edu/ Professor Emma Brunskill Assistant Professor, Computer Science Stanford AI for Hu

From playlist Stanford CS234: Reinforcement Learning | Winter 2019

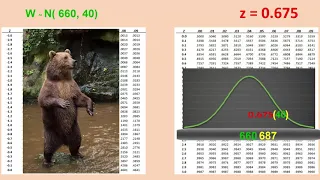

Determining values of a variable at a particular percentile in a normal distribution

From playlist Unit 2: Normal Distributions