This lecture gives an overview of neural networks, which play an important role in machine learning today. Book website: http://databookuw.com/ Steve Brunton's website: eigensteve.com

From playlist Intro to Data Science

Neural Network Architectures & Deep Learning

This video describes the variety of neural network architectures available to solve various problems in science ad engineering. Examples include convolutional neural networks (CNNs), recurrent neural networks (RNNs), and autoencoders. Book website: http://databookuw.com/ Steve Brunton

From playlist Data Science

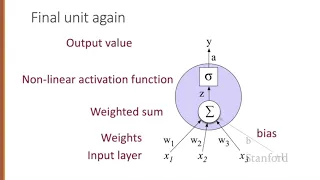

Neural Networks 1 Neural Units

From playlist Week 5: Neural Networks

What is Neural Network in Machine Learning | Neural Network Explained | Neural Network | Simplilearn

This video by Simplilearn is based on Neural Networks in Machine Learning. This Neural Network in Machine Learning Tutorial will cover the fundamentals of Neural Networks along with theoretical and practical demonstrations for a better learning experience 🔥Enroll for Free Machine Learning

From playlist Machine Learning Algorithms [2022 Updated]

Deep Learning with Neural Networks and TensorFlow Introduction

Welcome to a new section in our Machine Learning Tutorial series: Deep Learning with Neural Networks and TensorFlow. The artificial neural network is a biologically-inspired methodology to conduct machine learning, intended to mimic your brain (a biological neural network). The Artificial

From playlist Machine Learning with Python

GLOM: How to represent part-whole hierarchies in a neural network (Geoff Hinton's Paper Explained)

#glom #hinton #capsules Geoffrey Hinton describes GLOM, a Computer Vision model that combines transformers, neural fields, contrastive learning, capsule networks, denoising autoencoders and RNNs. GLOM decomposes an image into a parse tree of objects and their parts. However, unlike previo

From playlist Papers Explained

Dynamic Routing Between Capsules

Geoff Hinton's next big idea! Capsule Networks are an alternative way of implementing neural networks by dividing each layer into capsules. Each capsule is responsible for detecting the presence and properties of one particular entity in the input sample. This information is then allocated

From playlist Deep Learning Architectures

Neural Networks for Images & Audio Workshop

Carlo Giacometti, Giulio Alessandrini & Markus van Almsick

From playlist Wolfram Technology Conference 2019

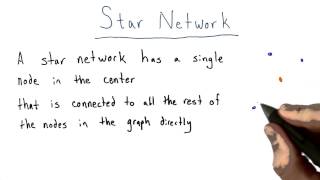

Star Network - Intro to Algorithms

This video is part of an online course, Intro to Algorithms. Check out the course here: https://www.udacity.com/course/cs215.

From playlist Introduction to Algorithms

Deep Learning Interview Questions and Answers | AI & Deep Learning Interview Questions | Edureka

** AI and Deep-Learning with TensorFlow - https://www.edureka.co/ai-deep-learning-with-tensorflow ** This video covers most of the hottest deep learning interview questions and answers. It also provides you with an understanding process of Deep Learning and the various aspects of it. PG i

From playlist Deep Learning With TensorFlow Videos

Geoffrey Hinton talk "What is wrong with convolutional neural nets ?"

Brain & Cognitive Sciences - Fall Colloquium Series Recorded December 4, 2014 Talk given at MIT. Geoffrey Hinton talks about his capsules project. Talks about the papers found here: https://arxiv.org/abs/1710.09829 and here: https://openreview.net/pdf?id=HJWLfGWRb

From playlist AI talks

The Hardware Lottery (Paper Explained)

#ai #research #hardware We like to think that ideas in research succeed because of their merit, but this story is likely incomplete. The term "hardware lottery" describes the fact that certain algorithmic ideas are successful because they happen to be suited well to the prevalent hardware

From playlist Papers Explained

Geoffrey Hinton: "Does the Brain do Inverse Graphics?"

Graduate Summer School 2012: Deep Learning, Feature Learning "Does the Brain do Inverse Graphics?" Geoffrey Hinton, University of Toronto Institute for Pure and Applied Mathematics, UCLA July 12, 2012 For more information: https://www.ipam.ucla.edu/programs/summer-schools/graduate-summe

From playlist GSS2012: Deep Learning, Feature Learning

CS25 I Stanford Seminar - How to represent part-whole hierarchies in a neural network, Geoff Hinton

"I will present a single idea about representation which allows advances made by several different groups to be combined into an imaginary system called GLOM. The advances include transformers, neural fields, contrastive representation learning, distillation and capsules. GLOM answers the

From playlist Stanford Seminars

Calling the Shot: How AI Predicted Fusion Ignition Before It Happened

The Data Science Institute (DSI) hosted a seminar by LLNL researchers Kelli Humbird and J. Luc Peterson on February 15, 2022. Read more about the DSI seminar series at https://data-science.llnl.gov/latest/seminar-series. At 1:03am on December 5, 2022, 192 laser beams at the National Ignit

From playlist DSI Virtual Seminar Series

Broadcasting Explained - Tensors for Deep Learning and Neural Networks

Tensors are the data structures of deep learning, and broadcasting is one of the most important operations that streamlines neural network programming operations. Over the last couple of videos, we've immersed ourselves in tensors, and hopefully now, we have a good understanding of how to

From playlist Deep Learning Deployment Basics - Neural Network Web Apps

In this video, I present some applications of artificial neural networks and describe how such networks are typically structured. My hope is to create another video (soon) in which I describe how neural networks are actually trained from data.

From playlist Machine Learning

Topographic VAEs learn Equivariant Capsules (Machine Learning Research Paper Explained)

#tvae #topographic #equivariant Variational Autoencoders model the latent space as a set of independent Gaussian random variables, which the decoder maps to a data distribution. However, this independence is not always desired, for example when dealing with video sequences, we know that s

From playlist Papers Explained

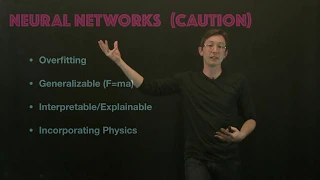

This lecture discusses some key limitations of neural networks and suggests avenues of ongoing development. Book website: http://databookuw.com/ Steve Brunton's website: eigensteve.com

From playlist Intro to Data Science