Logic: The Structure of Reason

As a tool for characterizing rational thought, logic cuts across many philosophical disciplines and lies at the core of mathematics and computer science. Drawing on Aristotle’s Organon, Russell’s Principia Mathematica, and other central works, this program tracks the evolution of logic, be

From playlist Logic & Philosophy of Mathematics

Syntax of PREDICATE LOGIC and WELL-FORMED FORMULAS (wffs) - Logic

In this video on #Logic / #PhilosophicalLogic we take a look at the requirements of well-formed formulas (wffs) in predicate logic and why we need predicate logic for stronger arguments. Then we do some practice questions. 0:00 - [Intro] 0:19 - [Why We Use Predicate Logic] 3:23 - [Syntax

From playlist Logic in Philosophy and Mathematics

An Overview of Set Theory for Linguists - Semantics in Linguistics

I introduce set theory for linguists. This focuses on language more-so than the technical details, but this is enough to get you started in understanding semantics. Join this channel to get access to perks: https://www.youtube.com/channel/UCGYSfZbPp3BiAFs531PBY7g/join Instagram: http://i

From playlist Semantics in Linguistics

There is a great deal of confusion about the term 'grammar'. Most people associate with it a book written about a language. In fact, there are various manifestations of this traditional term: presecriptive, descriptive and reference grammar. In theoretical linguistics, grammars are theory

From playlist VLC107 - Syntax: Part II

An introduction to the general types of logic statements

From playlist Geometry

Working with Functions (1 of 2: Notation & Terminology)

More resources available at www.misterwootube.com

From playlist Working with Functions

This first E-Lecture on Predicate Logic is meant as a gentle introduction. It first points out why propositional logic alone is not sufficient for the formalization of sentence meaning and then introduces the central machinery of predicate logic using several examples with which the studen

From playlist VLC103 - The Nature of Meaning

Negativity and semantic change - Will Hamilton, Stanford University

It is often argued that natural language is biased towards negative differentiation, meaning that there is more lexical diversity in negative affectual language, compared to positive language. However, we lack an understanding of the diachronic linguistic mechanisms associated with negativ

From playlist Turing Seminars

André Freitas - Building explanation machines for science: a neuro-symbolic perspective

Recorded 12 January 2023. André Freitas of the University of Manchester presents "Building explanation machines for science: a neuro-symbolic perspective" at IPAM's Explainable AI for the Sciences: Towards Novel Insights Workshop. Learn more online at: http://www.ipam.ucla.edu/programs/wor

From playlist 2023 Explainable AI for the Sciences: Towards Novel Insights

Stanford Seminar - Learning to Code: Why we Fail, How We Flourish

Andy Ko University of Washington Dynamic professionals sharing their industry experience and cutting edge research within the human-computer interaction (HCI) field will be presented in this seminar. Each week, a unique collection of technologists, artists, designers, and activists will

From playlist Stanford Seminars

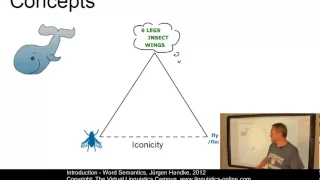

How are lexemes and objects related? How can we define the relationships between the lexemes of a language? These questions are central to word semantics and defineits main branches reference and sense. This E-Lecture provides an overview of these main areas of word semantics.

From playlist VLC101 - Linguistic Fundamentals

GNDCon 2.0 // Keynote, Harald Sack: „Die Welt ist klein und man trifft sich immer zweimal…“

Harald Sack (KIT / FIZ Karlsruhe) – Keynote Die Wissenschaften produzieren stetig wachsende Datenmengen. Deren effiziente Nutzung erfordert funktionierende Infrastrukturen. Ziel der Nationalen Forschungsdateninfrastruktur ist es, diese Datenbestände für das gesamte Wissenschaftssystem syst

From playlist ISE Conference Talks

Simplified Machine Learning Workflows with Anton Antonov, Session #6: Semantic Analysis (Part 1)

Anton Antonov, a senior mathematical programmer with a PhD in applied mathematics, live-demos key Wolfram Language features that are very useful in machine learning. In this session, he discusses the Latent Semantic Analysis Workflows. Notebook materials are available at: https://wolfr.am

From playlist Simplified Machine Learning Workflows with Anton Antonov

Stanford Seminar - Training Classifiers with Natural Language Explanations

Braden Hancock Stanford University February 20, 2019 Training accurate classifiers requires many labels, but each label provides only limited information (one bit for binary classification). In this work, we propose BabbleLabble, a framework for training classifiers in which an annotator

From playlist Stanford EE380-Colloquium on Computer Systems - Seminar Series

Peter Sewell: Underpinning mainstream engineering with mathematical semantics

LMS/BCS-FACS Evening Seminar November 2021

From playlist LMS/BCS-FACS Evening Seminars

Hibernate Tutorial 16 - CascadeTypes and Other Things

In this tutorial, we'll look at some concepts like CascadeType which can be configured for entity relationships.

From playlist Hibernate

Evaluating Performance of Large Language Models with Linguistics - Deep Random Talks S2E5

Listen to Amir Feizpour, Serena McDonnell and Bai Li speak about Natural Language Processing on DRT's this episode.

From playlist Deep Random Talks- Season 2

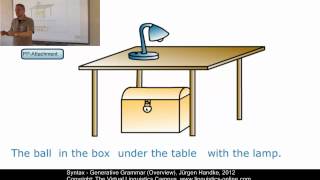

This E-Lecture discusses the fundamental ideas of generative grammar, the most influential grammar model in linguistic theory. In particular we exemplfy the main principles that account for the non-finite character of natural language as well as the phenonemon of native speaker competence.

From playlist VLC206 - Morphology and Syntax