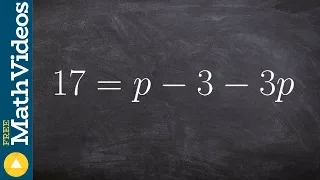

Solving and equation with the variable on the same side ex 3, 17=p–3–3p

👉 Learn how to solve two step linear equations. A linear equation is an equation whose highest exponent on its variable(s) is 1. To solve for a variable in a two step linear equation, we first isolate the variable by using inverse operations (addition or subtraction) to move like terms to

From playlist Solve Two Step Equations with Two Variables

Solving an equation by combining like terms 6=5c–9–2c

👉 Learn how to solve two step linear equations. A linear equation is an equation whose highest exponent on its variable(s) is 1. To solve for a variable in a two step linear equation, we first isolate the variable by using inverse operations (addition or subtraction) to move like terms to

From playlist Solve Two Step Equations with Two Variables

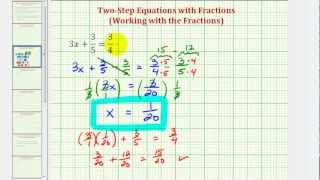

Ex 1: Solving a Two Step Equation with Fractions - NOT Clearing the Fractions

This video solves a two step equation with fractions by leaving the fractions in the equation and solving just like any other two step equation. Site: http://mathispower4u.com Blog: http://mathispower4u.wordpress.com

From playlist Solving Two-Step Equations

Solving Two Step Equations: A Summary

This video explains how to solve two step equations. http://mathispower4u.yolasite.com/

From playlist Solving Basic Equations

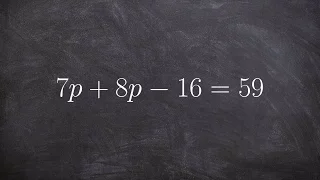

Solving an equation with variable on the same side

👉 Learn how to solve two step linear equations. A linear equation is an equation whose highest exponent on its variable(s) is 1. To solve for a variable in a two step linear equation, we first isolate the variable by using inverse operations (addition or subtraction) to move like terms to

From playlist Solve Two Step Equations with Two Variables

How to use steps to solve for two step equations

👉 Learn how to solve two step linear equations. A linear equation is an equation whose highest exponent on its variable(s) is 1. To solve for a variable in a two step linear equation, we first isolate the variable by using inverse operations (addition or subtraction) to move like terms to

From playlist Solve Two Step Equations with a Fraction

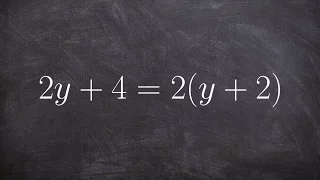

Solving an equation with a variable on both sides infinite solutions

👉 Learn how to solve multi-step equations with parenthesis and variable on both sides of the equation. An equation is a statement stating that two values are equal. A multi-step equation is an equation which can be solved by applying multiple steps of operations to get to the solution. To

From playlist Solve Multi-Step Equations......Help!

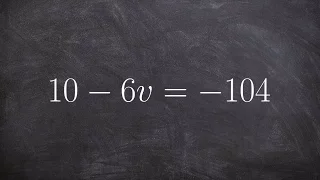

Solving for X in an equation using subtraction and division

👉 Learn how to solve two step linear equations. A linear equation is an equation whose highest exponent on its variable(s) is 1. To solve for a variable in a two step linear equation, we first isolate the variable by using inverse operations (addition or subtraction) to move like terms to

From playlist Solve Two Step Equations

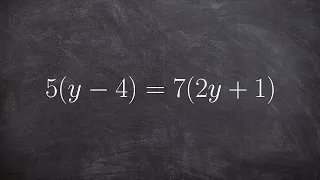

Solving an equation with distributive property on both sides

👉 Learn how to solve multi-step equations with parenthesis and variable on both sides of the equation. An equation is a statement stating that two values are equal. A multi-step equation is an equation which can be solved by applying multiple steps of operations to get to the solution. To

From playlist Solve Multi-Step Equations......Help!

Reconstruction and estimation in data driven state-space models- Monbet - Workshop 2 - CEB T3 2019

Monbet (U Rennes, FR) / 15.11.2019 Reconstruction and estimation in data driven state-space models ---------------------------------- Vous pouvez nous rejoindre sur les réseaux sociaux pour suivre nos actualités. Facebook : https://www.facebook.com/InstitutHenriPoincare/ Twitter

From playlist 2019 - T3 - The Mathematics of Climate and the Environment

EM Algorithm In Machine Learning | Expectation-Maximization | Machine Learning Tutorial | Edureka

** Machine Learning Certification Training: https://www.edureka.co/machine-learning-certification-training ** This Edureka video on 'EM Algorithm In Machine Learning' covers the EM algorithm along with the problem of latent variables in maximum likelihood and Gaussian mixture model. Follo

From playlist Machine Learning Algorithms in Python (With Demo) | Edureka

Keith Ball: Restricted Invertibility

Keith Ball (University of Warwick) Restricted Invertibility I will briefly discuss the Kadison-Singer problem and then explain a beautiful argument of Bourgain and Tzafriri that I included in an article commissioned in memory of Jean Bourgain.

From playlist Trimester Seminar Series on the Interplay between High-Dimensional Geometry and Probability

Hong Wang: The restriction problem and the polynomial method, Lecture IV

Stein’s restriction conjecture is about estimating functions with Fourier transform supported on a hypersurface, for example, a sphere in Rn. These functions can be decomposed into a sum over wave packets supported on long thin tubes. Guth introduced the polynomial method in restriction th

From playlist Harmonic Analysis and Analytic Number Theory

Machine Learning from First Principles, with PyTorch AutoDiff — Topic 66 of ML Foundations

#MLFoundations #Calculus #MachineLearning In preceding videos in this series, we learned all the most essential differential calculus theory needed for machine learning. In this epic video, it all comes together to enable us to perform machine learning from first principles and fit a line

From playlist Calculus for Machine Learning

Lecture 12 | Machine Learning (Stanford)

Lecture by Professor Andrew Ng for Machine Learning (CS 229) in the Stanford Computer Science department. Professor Ng discusses unsupervised learning in the context of clustering, Jensen's inequality, mixture of Gaussians, and expectation-maximization. This course provides a broad in

From playlist Lecture Collection | Machine Learning

Christian-Yann Robert: Hill random forests with application to tornado damage insurance

CONFERENCE Recording during the thematic meeting : "MLISTRAL" the September 29, 2022 at the Centre International de Rencontres Mathématiques (Marseille, France) Filmmaker: Guillaume Hennenfent Find this video and other talks given by worldwide mathematicians on CIRM's Audiovisual Mathem

From playlist Probability and Statistics

Chao Gao: Statistical Optimality and Algorithms for Top-K Ranking - Lecture 2

CIRM VIRTUAL CONFERENCE In the first presentation, we will consider the top-K ranking problem. The statistical properties of two popular algorithms, MLE and rank centrality (spectral ranking) will be precisely characterized. In terms of both partial and exact recovery, the MLE achieves op

From playlist Virtual Conference

Bayesian Networks 9 - EM Algorithm | Stanford CS221: AI (Autumn 2021)

For more information about Stanford's Artificial Intelligence professional and graduate programs visit: https://stanford.io/ai Associate Professor Percy Liang Associate Professor of Computer Science and Statistics (courtesy) https://profiles.stanford.edu/percy-liang Assistant Professor

From playlist Stanford CS221: Artificial Intelligence: Principles and Techniques | Autumn 2021

Estimating the number of atoms in the observable universe

This video looks at a simple method for estimating the number of atoms in the observable universe. The first step involves estimating the number of atoms in a typical star. The second step involves estimating the number of stars in a typical galaxy. The third step involves estimating the n

From playlist Pen and Paper

Solving for x in an equation using addition and division

👉 Learn how to solve two step linear equations. A linear equation is an equation whose highest exponent on its variable(s) is 1. To solve for a variable in a two step linear equation, we first isolate the variable by using inverse operations (addition or subtraction) to move like terms to

From playlist Solve Two Step Equations