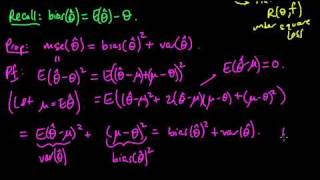

(ML 11.5) Bias-Variance decomposition

Explanation and proof of the bias-variance decomposition (a.k.a. bias-variance trade-off) for estimators.

From playlist Machine Learning

The Spectral Proper Orthogonal Decomposition

I made this video in an attempt to popularize the Spectral POD technique. It is an incredibly powerful analysis tool for understanding the data coming from a multitude of sensors. It elevates the Fourier Transform to a whole new level; hence I call it "The Mother of All Fourier Transforms"

From playlist Summer of Math Exposition 2 videos

QR Decomposition of a matrix and applications to least squares Check out my Orthogonality playlist: https://www.youtube.com/watch?v=Z8ceNvUgI4Q&list=PLJb1qAQIrmmAreTtzhE6MuJhAhwYYo_a9 Subscribe to my channel: https://www.youtube.com/channel/UCoOjTxz-u5zU0W38zMkQIFw

From playlist Orthogonality

Tensor Decomposition Definitions of Neural Net Architectures

This paper describes complexity theory of neural networks, defined by tensor decompositions, with a review of simplification of the tensor decomposition for simpler neural network architectures. The concept of Z-completeness for a network N is defined in the existence of a tensor decomposi

From playlist Wolfram Technology Conference 2021

Madeleine Udell: "Low Rank Tucker Approximation of a Tensor from Streaming Data"

Tensor Methods and Emerging Applications to the Physical and Data Sciences 2021 Workshop III: Mathematical Foundations and Algorithms for Tensor Computations "Low Rank Tucker Approximation of a Tensor from Streaming Data" Madeleine Udell - Cornell University, Computational and Mathematica

From playlist Tensor Methods and Emerging Applications to the Physical and Data Sciences 2021

Fast and optimal low-rank tensor regression via importance - Garvesh Raskutti, UW-Madison

Recent years have witnessed an increased cross-fertilisation between the fields of statistics and computer science. In the era of Big Data, statisticians are increasingly facing the question of guaranteeing prescribed levels of inferential accuracy within certain time budget. On the other

From playlist Statistics and computation

Linear Algebra 13e: The LU Decomposition

https://bit.ly/PavelPatreon https://lem.ma/LA - Linear Algebra on Lemma http://bit.ly/ITCYTNew - Dr. Grinfeld's Tensor Calculus textbook https://lem.ma/prep - Complete SAT Math Prep

From playlist Part 1 Linear Algebra: An In-Depth Introduction with a Focus on Applications

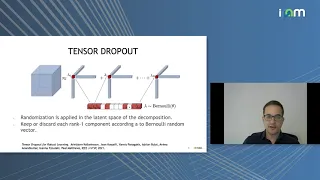

Jean Kossaifi: "Efficient Tensor Representation for Deep Learning with TensorLy and PyTorch"

Tensor Methods and Emerging Applications to the Physical and Data Sciences 2021 Workshop IV: Efficient Tensor Representations for Learning and Computational Complexity "Efficient Tensor Representation for Deep Learning with TensorLy and PyTorch" Jean Kossaifi - Nvidia Corporation Abstrac

From playlist Tensor Methods and Emerging Applications to the Physical and Data Sciences 2021

On Expressiveness and Optimization in Deep Learning - Nadav Cohen

Members' Seminar Topic: On Expressiveness and Optimization in Deep Learning Speaker: Nadav Cohen Affiliation: Member, School of Mathematics Date: April 2, 2018 For more videos, please visit http://video.ias.edu

From playlist Mathematics

Solve a System of Linear Equations Using LU Decomposition

This video explains how to use LU Decomposition to solve a system of linear equations. Site: http://mathispower4u.com Blog: http://mathispower4u.wordpress.com

From playlist Matrix Equations

How to Set Up the Partial Fraction Decomposition

Please Subscribe here, thank you!!! https://goo.gl/JQ8Nys How to Set Up the Partial Fraction Decomposition. Just setting them up. See my other videos for actual solved problems.

From playlist Partial Fraction Decomposition

Lars Grasedyck: "Multigrid in Hierarchical Low Rank Tensor Formats"

Tensor Methods and Emerging Applications to the Physical and Data Sciences 2021 Workshop I: Tensor Methods and their Applications in the Physical and Data Sciences "Multigrid in Hierarchical Low Rank Tensor Formats" Lars Grasedyck - RWTH Aachen University Abstract: In this presentation w

From playlist Tensor Methods and Emerging Applications to the Physical and Data Sciences 2021

Anthony Nouy: Adaptive low-rank approximations for stochastic and parametric equations [...]

Find this video and other talks given by worldwide mathematicians on CIRM's Audiovisual Mathematics Library: http://library.cirm-math.fr. And discover all its functionalities: - Chapter markers and keywords to watch the parts of your choice in the video - Videos enriched with abstracts, b

From playlist Numerical Analysis and Scientific Computing

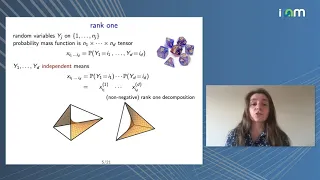

Anna Seigal: "Tensors in Statistics and Data Analysis"

Watch part 1/2 here: https://youtu.be/9unKtBoO5Hw Tensor Methods and Emerging Applications to the Physical and Data Sciences Tutorials 2021 "Tensors in Statistics and Data Analysis" Anna Seigal - University of Oxford Abstract: I will give an overview of tensors as they arise in settings

From playlist Tensor Methods and Emerging Applications to the Physical and Data Sciences 2021

Linear Algebra 18a: Introduction to the Eigenvalue Decomposition

https://bit.ly/PavelPatreon https://lem.ma/LA - Linear Algebra on Lemma http://bit.ly/ITCYTNew - Dr. Grinfeld's Tensor Calculus textbook https://lem.ma/prep - Complete SAT Math Prep

From playlist Part 3 Linear Algebra: Linear Transformations

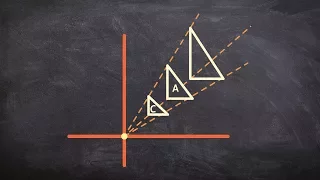

👉 Learn about dilations. Dilation is the transformation of a shape by a scale factor to produce an image that is similar to the original shape but is different in size from the original shape. A dilation that creates a larger image is called an enlargement or a stretch while a dilation tha

From playlist Transformations

Anh-Huy Phan: "Chain Tensor Network: Instability and how to deal with it"

Tensor Methods and Emerging Applications to the Physical and Data Sciences 2021 Workshop III: Mathematical Foundations and Algorithms for Tensor Computations "Chain Tensor Network: Instability and how to deal with it" Anh-Huy Phan - Skolkovo Institute of Science and Technology Abstract:

From playlist Tensor Methods and Emerging Applications to the Physical and Data Sciences 2021

Ex 2: Partial Fraction Decomposition (Linear Factors)

This video explains how to perform partial fraction decomposition when the denominator has 2 distinct linear factors. Site: http://mathispower4u.com Blog: http://mathispower4u.wordpress.com

From playlist Performing Partial Fraction Decomposition

Integration Using Partial Fraction Decomposition Part 1

This video shows how partial fraction decomposition can be used to simplify and integral. This video only shows linear factors. Part 1 of 2 Site: http://mathispower4u.com

From playlist Integration Using Partial Fractions

Anthony Nouy: Approximation and learning with tree tensor networks - Lecture 1

Recorded during the meeting "Data Assimilation and Model Reduction in High Dimensional Problems" the July 21, 2021 by the Centre International de Rencontres Mathématiques (Marseille, France) Filmmaker: Luca Récanzone A kinetic description of a plasma in external and self-consistent fiel

From playlist Numerical Analysis and Scientific Computing