Recordings of the corresponding course on Coursera. If you are interested in exercises and/or a certificate, have a look here: https://www.coursera.org/learn/pca-machine-learning

From playlist PCA

Sparse matrices in sparse analysis - Anna Gilbert

Members' Seminar Topic: Sparse matrices in sparse analysis Speaker: Anna Gilbert Affiliation: University of Michigan; Member, School of Mathematics Date: October 28, 2019 For more video please visit http://video.ias.edu

From playlist Mathematics

PCA (video 7): PCA in High Dimensions

Recordings of the corresponding course on Coursera. If you are interested in exercises and/or a certificate, have a look here: https://www.coursera.org/learn/pca-machine-learning

From playlist PCA

Robust Modal Decompositions for Fluid Flows

This research abstract by Isabel Scherl describes how to use robust principle component analysis (RPCA) for robust modal decompositions of fluid flows that have large outliers or corruption. https://doi.org/10.1103/PhysRevFluids.5.054401 https://arxiv.org/abs/1905.07062 Robust principa

From playlist Research Abstracts from Brunton Lab

Machine Learning for Signal Processing: Data Compression and Denoising

In this meetup, we will understand how to use machine learning tools for signal processing. In particular: data compression and noise removal. To do so, we will discuss Principal Component Analysis (PCA) and explore how linear algebra can be used for these and other applications. Presente

From playlist Fundamentals of Machine Learning

Computational Linear Algebra 4: Randomized SVD & Robust PCA

Course materials available here: https://github.com/fastai/numerical-linear-algebra We use randomized SVD and robust PCA for background removal of a surveillance video. Implemented in Python and Scikit-Learn Review of this material in next video. Course overview blog post: http://www.fast

From playlist Computational Linear Algebra

Computational Linear Algebra 5: Robust PCA & LU Factorization

Course materials available here: https://github.com/fastai/numerical-linear-algebra We review randomized SVD & robust PCA (for background removal on a surveillance video), and introduce Gaussian Elimination & LU factorization. Topics covered here reviewed in next video.

From playlist Computational Linear Algebra

Santanu Dey: "Solving SDPs by using sparse PCA"

Deep Learning and Combinatorial Optimization 2021 "Solving SDPs by using sparse PCA" Santanu Dey - Georgia Institute of Technology, School of Industrial and Systems Engineering Abstract: Quadratically-constrained quadratic programs (QCQPs) are optimization models whose remarkable express

From playlist Deep Learning and Combinatorial Optimization 2021

PCA 3: direction of greatest variance

Full lecture: http://bit.ly/PCA-alg Principal Component Analysis (PCA) reduces the dimensionality of the data by selecting directions along which our data has the largest variance. Picking the direction of greatest variance preserves the distances in the original space (far-away points i

From playlist Principal Component Analysis

Robust Principal Component Analysis (RPCA)

Robust statistics is essential for handling data with corruption or missing entries. This robust variant of principal component analysis (PCA) is now a workhorse algorithm in several fields, including fluid mechanics, the Netflix prize, and image processing. Book Website: http://databoo

From playlist Data-Driven Science and Engineering

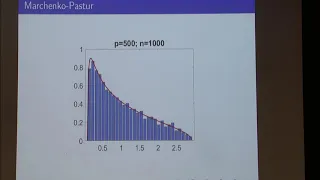

20. Principal Component Analysis (cont.)

MIT 18.650 Statistics for Applications, Fall 2016 View the complete course: http://ocw.mit.edu/18-650F16 Instructor: Philippe Rigollet In this lecture, Prof. Rigollet talked about principal component analysis: main principle, algorithm, example, and beyond practice. License: Creative Com

From playlist MIT 18.650 Statistics for Applications, Fall 2016

Principal Component Analysis (PCA)

Principal component analysis (PCA) is a workhorse algorithm in statistics, where dominant correlation patterns are extracted from high-dimensional data. Book PDF: http://databookuw.com/databook.pdf Book Website: http://databookuw.com These lectures follow Chapter 1 from: "Data-Driven S

From playlist Data-Driven Science and Engineering

Yann LeCun: "Deep Learning, Graphical Models, Energy-Based Models, Structured Prediction, Pt. 2"

Graduate Summer School 2012: Deep Learning, Feature Learning "Deep Learning, Graphical Models, Energy-Based Models, Structured Prediction, Pt. 2" Yann LeCun, New York University Institute for Pure and Applied Mathematics, UCLA July 9, 2012 For more information: https://www.ipam.ucla.edu

From playlist GSS2012: Deep Learning, Feature Learning

ML Tutorial: Probabilistic Dimensionality Reduction, Part 2/2 (Neil Lawrence)

Machine Learning Tutorial at Imperial College: Probabilistic Dimensionality Reduction, Part 2/2 Neil Lawrence (University of Sheffield) October 21, 2015

From playlist Machine Learning Tutorials

Finding structure in high dimensional data, methods and fundamental limitations - Boaz Nadler

Members' Seminar Topic: Finding structure in high dimensional data, methods and fundamental limitations Speaker: Boaz Nadler Affiliation: Weizmann Institute of Science; Member, School of Mathematics Date: October 14, 2019 For more video please visit http://video.ias.edu

From playlist Mathematics

PCA algorithm step by step with python code

[https://github.com/minsuk-heo/python_tutorial/blob/master/data_science/pca/PCA.ipynb] explain PCA (principal component analysis) step by step and demonstrate python implementation. all machine learning youtube videos from me, https://www.youtube.com/playlist?list=PLVNY1HnUlO26x597OgAN8T

From playlist Machine Learning