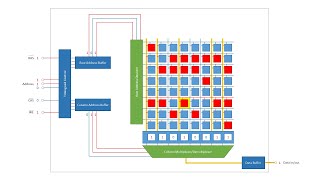

Dynamic Random Access Memory (DRAM). Part 3: Binary Decoders

This is the third in a series of computer science videos is about the fundamental principles of Dynamic Random Access Memory, DRAM, and the essential concepts of DRAM operation. This video covers the role of the row address decoder and the workings of generic binary decoders. It also expl

From playlist Random Access Memory

Linear Algebra for Computer Scientists. 9. Decomposing Vectors

This computer science video is one of a series on linear algebra for computer scientists. In this video you will learn how to express a given vector as a linear combination of a set of given basis vectors. In other words, you will learn how to determine the coefficients that were used to

From playlist Linear Algebra for Computer Scientists

What is the alternate in sign sequence

👉 Learn about sequences. A sequence is a list of numbers/values exhibiting a defined pattern. A number/value in a sequence is called a term of the sequence. There are many types of sequence, among which are: arithmetic and geometric sequence. An arithmetic sequence is a sequence in which

From playlist Sequences

List-Decoding Multiplicity Codes - Swastik Kopparty

Swastik Kopparty Rutgers University April 10, 2012 We study the list-decodability of multiplicity codes. These codes, which are based on evaluations of high-degree polynomials and their derivatives, have rate approaching 1 while simultaneously allowing for sublinear-time error-correction.

From playlist Mathematics

Blockwise Parallel Decoding for Deep Autoregressive Models

https://arxiv.org/abs/1811.03115 Abstract: Deep autoregressive sequence-to-sequence models have demonstrated impressive performance across a wide variety of tasks in recent years. While common architecture classes such as recurrent, convolutional, and self-attention networks make differen

From playlist All Videos

Sequential Spectra- PART 2: Preliminary Definitions

We cover one definition of sequential spectra, establish the smash tensoring and powering operations, as well as some adjunctions. Credits: nLab: https://ncatlab.org/nlab/show/Introdu... Animation library: https://github.com/3b1b/manim Music: ► Artist Attribution • Music By: "KaizanBlu"

From playlist Sequential Spectra

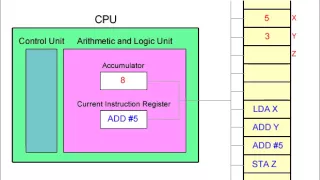

Fetch Decode Execute Cycle and the Accumulator

This (silent) video illustrates the fetch decode execute cycle. A simplified view of the CPU focusses on the role of the accumulator register when a program runs. For simplicity, the machine code commands being executed are represented by assembly language code. This assembly language co

From playlist Computer Hardware and Architecture

Dynamic Random Access Memory (DRAM). Part 2: Read and Write Cycles

This is the second in a series of computer science videos is about the fundamental principles of Dynamic Random Access Memory, DRAM, and the essential concepts of DRAM operation. This video covers stages of the read cycle and the write cycle including memory address multiplexing as a means

From playlist Random Access Memory

What is the definition of a geometric sequence

👉 Learn about sequences. A sequence is a list of numbers/values exhibiting a defined pattern. A number/value in a sequence is called a term of the sequence. There are many types of sequence, among which are: arithmetic and geometric sequence. An arithmetic sequence is a sequence in which

From playlist Sequences

Attention is all you need 논문으로 큰 주목을 받은 Transformer에 대해 심층적으로 알아봅니다. 트랜스포머에 사용된 다양한 기술들도(포지셔널 인코딩, 멀티 헤드 어텐션, 셀프 어텐션, 레이블 스무딩, 레지듀얼 커넥션) 쉬운 예제와 함께 알아봅니다. 제가 만든 모든 머신러닝 관련 영상은 아래 재생목록에서 쉽게 찾으실 수 있습니다. https://www.youtube.com/playlist?list=PLVNY1HnUlO241gILgQloWAs0xrrkqQfKe

From playlist 머신러닝

Code AUTOENCODERS w/ Python + KERAS Layers (Colab, TensorFlow2, Autumn 2022)

An elegant way to code AUTOENCODERS with KERAS layers in TensorFlow2 on COLAB w/ Python. Autoencoders are applied for dimensionality reduction, where PCA fails for non-linearity. A coding Example on COLAB shows the way forward to code your own AUTOENCODER for your low-dimensional latent sp

From playlist Stable Diffusion / Latent Diffusion models for Text-to-Image AI

Code AUTOENCODER in Python 2022 to de-noise Photos (COLAB, KERAS, Convolutional2D, Tensorflow2)

AUTOENCODERS with Con2D KERAS Layers de-noise a photo, Python Code to follow along! Convolutional Neural Networks build a VISION NN, which will be the core component of the AUTOENCODER to de-noise pictures. With KERAS as a high-level API. Easily design your own autoencoder. Official Tens

From playlist Stable Diffusion / Latent Diffusion models for Text-to-Image AI

Transformer Decoder coded from scratch

ABOUT ME ⭕ Subscribe: https://www.youtube.com/c/CodeEmporium?sub_confirmation=1 📚 Medium Blog: https://medium.com/@dataemporium 💻 Github: https://github.com/ajhalthor 👔 LinkedIn: https://www.linkedin.com/in/ajay-halthor-477974bb/ RESOURCES [ 1 🔎] Blowing up the decoder archtecture: https:

From playlist Transformers from scratch

[Transformer] Attention Is All You Need | AISC Foundational

22 October 2018 For slides and more information, visit https://aisc.ai.science/events/2018-10-22 Paper: https://arxiv.org/abs/1706.03762 Speaker: Joseph Palermo (Dessa) Host: Insight Date: Oct 22nd, 2018 Attention Is All You Need The dominant sequence transduction models are based on

From playlist Natural Language Processing

[BERT] Pretranied Deep Bidirectional Transformers for Language Understanding (algorithm) | TDLS

Toronto Deep Learning Series Host: Ada + @ML Explained - Aggregate Intellect - AI.SCIENCE Date: Nov 6th, 2018 Aggregate Intellect is a Global Marketplace where ML Developers Connect, Collaborate, and Build. -Connect with peers & experts at https://ai.science -Join our Slack Community:

From playlist Natural Language Processing

The Transformer for language translation (NLP video 18)

We cover an implementation of the transformer architecture, as described in the "Attention is all you need" paper. Transformers offer an approach to language translation that use neither RNNs nor CNNs. We address the task of translation from French to English, and find that we get higher

From playlist fast.ai Code-First Intro to Natural Language Processing

Semi Supervised Learning - Session 5

Exercise continued: Model: Dimensions Layer types Q&A: latent space with respect to loss components Loss function Train, test methods

From playlist Unsupervised and Weakly Supervised Learning

👉 Learn about sequences. A sequence is a list of numbers/values exhibiting a defined pattern. A number/value in a sequence is called a term of the sequence. There are many types of sequence, among which are: arithmetic and geometric sequence. An arithmetic sequence is a sequence in which

From playlist Sequences

Transformer Neural Networks - EXPLAINED! (Attention is all you need)

Please subscribe to keep me alive: https://www.youtube.com/c/CodeEmporium?sub_confirmation=1 BLOG: https://medium.com/@dataemporium ⭐ Coursera Plus: $100 off until September 29th, 2022 for access to 7000+ courses: https://imp.i384100.net/Coursera-Plus MATH COURSES (7 day free trial) 📕 M

From playlist Transformer Neural Networks