Viswanath Nagarajan: Approximation Friendly Discrepancy Rounding

We consider the general problem of rounding a fractional vector to an integral vector while (approximately) satisfying a number of linear constraints. Randomized rounding and discrepancy-based rounding are two of the strongest rounding methods known. However these algorithms are very diffe

From playlist HIM Lectures: Trimester Program "Combinatorial Optimization"

From playlist Algorithms 1

Multiplying Fractions - Positive Only

This video provides a lesson on how to multiply fraction and also explains what is happening when we multiply fractions. Complete Video Library: http://www.mathispower4u.com Search by Topic: http://www.mathispower4u.wordpress.com

From playlist Multiplying and Dividing Fractions

DeepMind x UCL | Deep Learning Lectures | 11/12 | Modern Latent Variable Models

This lecture, by DeepMind Research Scientist Andriy Mnih, explores latent variable models, a powerful and flexible framework for generative modelling. After introducing this framework along with the concept of inference, which is central to it, Andriy focuses on two types of modern latent

From playlist Learning resources

14 Machine Learning: Decision Tree

Lecture on machine learning prediction with decision trees. A simple, intuitive prerequisite for more powerful ensemble tree methods. Follow along with the demonstration in Python: https://github.com/GeostatsGuy/PythonNumericalDemos/blob/master/SubsurfaceDataAnalytics_DecisionTree.ipynb

From playlist Machine Learning

20 Data Analytics: Decision Tree

Lecture on decision tree-based machine learning with workflows in R and Python and linkages to bagging, boosting and random forest.

From playlist Data Analytics and Geostatistics

15b Machine Learning: Gradient Boosting

Lecture on ensemble machine learning with boosting with a demonstration based on tree based boosting.

From playlist Machine Learning

The Neuroscience of Addiction - with Marc Lewis

Neuroscientist and former addict Marc Lewis makes the case that addiction isn't a disease at all, although it has been recently branded as such. Watch the Q&A: https://www.youtube.com/watch?v=pEjMi1OPnYY Subscribe for regular science videos: http://bit.ly/RiSubscRibe Marc's book "The Biol

From playlist Ri Talks

Aaditya Ramdas: Universal inference using the split likelihood ratio test

CIRM VIRTUAL EVENT Recorded during the meeting "Mathematical Methods of Modern Statistics 2" the June 05, 2020 by the Centre International de Rencontres Mathématiques (Marseille, France) Filmmaker: Guillaume Hennenfent Find this video and other talks given by worldwide mathematicians

From playlist Virtual Conference

Merge Sort 4 – Towards an Implementation (Recursive Function)

This is the fourth in a series of videos about the merge sort. It includes a description of some pseudocode which combines into a single recursive function a helper program for splitting a list, and a helper program for merging a pair of ordered lists. This video describes how successive

From playlist Sorting Algorithms

Addition GONE WRONG! (Fixing Roundoff Error, part 1) #MegaFavNumbers

Part 2: https://youtu.be/fojaJcAk1sQ This is my entry in the #MegaFavNumbers collaboration, about a case of catastrophic roundoff error I ran into, and how floating-point representation consistently produces 16,777,216. Part 2 explains and compares methods of addressing this sort of roun

From playlist MegaFavNumbers

Learn how to determine the product of two fractions with unlike denominators

👉 Learn how to multiply fractions. To multiply fractions, we need to multiply the numerator by the numerator and multiply the denominator by the denominator. We then reduce the fraction. By reducing the fraction we are writing it in most simplest form. It is very important to understand t

From playlist How to Multiply Fractions

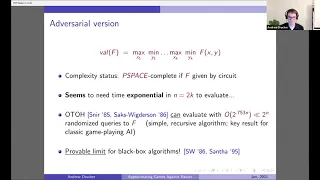

An Improved Exponential-Time Approximation Algorithm for Fully-Alternating Games... - Andrew Drucker

Computer Science/Discrete Mathematics Seminar I Topic: An Improved Exponential-Time Approximation Algorithm for Fully-Alternating Games Against Nature Speaker: Andrew Drucker Affiliation: University of Chicago Date: January 25, 2021 For more video please visit http://video.ias.edu

From playlist Mathematics

Statistical Learning: 8.2 More details on Trees

Statistical Learning, featuring Deep Learning, Survival Analysis and Multiple Testing You are able to take Statistical Learning as an online course on EdX, and you are able to choose a verified path and get a certificate for its completion: https://www.edx.org/course/statistical-learning

From playlist Statistical Learning

Quicksort 2 – Alternative Algorithm

This video describes the principle of the quicksort, which takes a ‘divide and conquer’ approach to the problem of sorting an unordered list. In this particular algorithm, the approach to partitioning a list does not rely on the explicit nomination of a pivot value, but still makes use of

From playlist Sorting Algorithms

👉 Learn how to multiply fractions. To multiply fractions, we need to multiply the numerator by the numerator and multiply the denominator by the denominator. We then reduce the fraction. By reducing the fraction we are writing it in most simplest form. It is very important to understand t

From playlist How to Multiply Fractions

👉 Learn how to multiply fractions. To multiply fractions, we need to multiply the numerator by the numerator and multiply the denominator by the denominator. We then reduce the fraction. By reducing the fraction we are writing it in most simplest form. It is very important to understand t

From playlist How to Multiply Fractions

Stanford Seminar - Song Han of Stanford University

"Deep Compression and EIE: Deep Neural Network Model Compression and Hardware Acceleration" - Song Han of Stanford University Support for the Stanford Colloquium on Computer Systems Seminar Series provided by the Stanford Computer Forum. Speaker Abstract and Bio can be found here: http:/

From playlist Engineering