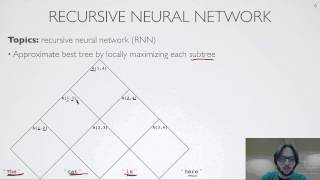

Recursive neural network

A recursive neural network is a kind of deep neural network created by applying the same set of weights recursively over a structured input, to produce a structured prediction over variable-size input structures, or a scalar prediction on it, by traversing a given structure in topological order. Recursive neural networks, sometimes abbreviated as RvNNs, have been successful, for instance, in learning sequence and tree structures in natural language processing, mainly phrase and sentence continuous representations based on word embedding. RvNNs have first been introduced to learn distributed representations of structure, such as logical terms.Models and general frameworks have been developed in further works since the 1990s. (Wikipedia).