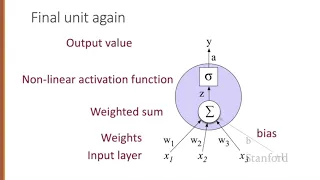

Neural Networks 1 Neural Units

From playlist Week 5: Neural Networks

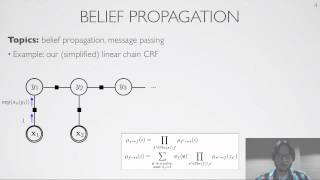

Practical 4.0 – RNN, vectors and sequences

Recurrent Neural Networks – Vectors and sequences Full project: https://github.com/Atcold/torch-Video-Tutorials Links to the paper Vinyals et al. (2016) https://arxiv.org/abs/1609.06647 Zaremba & Sutskever (2015) https://arxiv.org/abs/1410.4615 Cho et al. (2014) https://arxiv.org/abs/1406

From playlist Deep-Learning-Course

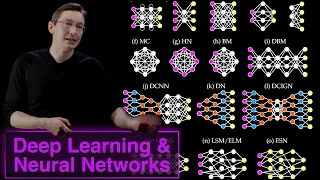

Neural Network Architectures & Deep Learning

This video describes the variety of neural network architectures available to solve various problems in science ad engineering. Examples include convolutional neural networks (CNNs), recurrent neural networks (RNNs), and autoencoders. Book website: http://databookuw.com/ Steve Brunton

From playlist Data Science

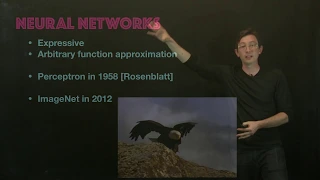

This lecture gives an overview of neural networks, which play an important role in machine learning today. Book website: http://databookuw.com/ Steve Brunton's website: eigensteve.com

From playlist Intro to Data Science

Graph Neural Networks, Session 2: Graph Definition

Types of Graphs Common data structures for storing graphs

From playlist Graph Neural Networks (Hands-on)

In this video, I present some applications of artificial neural networks and describe how such networks are typically structured. My hope is to create another video (soon) in which I describe how neural networks are actually trained from data.

From playlist Machine Learning

Seminar 9: Surya Ganguli - Statistical Physics of Deep Learning

MIT RES.9-003 Brains, Minds and Machines Summer Course, Summer 2015 View the complete course: https://ocw.mit.edu/RES-9-003SU15 Instructor: Surya Ganguli Describes how the application of methods from statistical physics to the analysis of high-dimensional data can provide theoretical insi

From playlist MIT RES.9-003 Brains, Minds and Machines Summer Course, Summer 2015

11.2: Neuroevolution: Crossover and Mutation - The Nature of Code

In this video I begin the process of coding a neuroevolution simulation and copy() and mutate() methods to the neural network library 🎥 Previous Video: https://youtu.be/lu5ul7z4icQ 🔗 Toy-Neural-Network-JS: https://github.com/CodingTrain/Toy-Neural-Network-JS 🔗 Nature of Code: http://natu

From playlist 11: Neuroevolution - The Nature of Code

GRCon21 - An Open Channel Identifier using GNU Radio

Presented by Ashley Beard and Steven Sharp at GNU Radio Conference 2021 In this paper, we address the problem of radio spectrum crowding by using a stochastic gradient descent neural network algorithm on simulated cognitive radio data to identify open and closed channels within a specifie

From playlist GRCon 2021

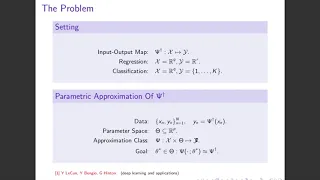

2020.05.28 Andrew Stuart - Supervised Learning between Function Spaces

Consider separable Banach spaces X and Y, and equip X with a probability measure m. Let F: X \to Y be an unknown operator. Given data pairs {x_j,F(x_j)} with {x_j} drawn i.i.d. from m, the goal of supervised learning is to approximate F. The proposed approach is motivated by the recent su

From playlist One World Probability Seminar

Statistical mechanics of deep learning by Surya Ganguli

Statistical Physics Methods in Machine Learning DATE: 26 December 2017 to 30 December 2017 VENUE: Ramanujan Lecture Hall, ICTS, Bengaluru The theme of this Discussion Meeting is the analysis of distributed/networked algorithms in machine learning and theoretical computer science in the

From playlist Statistical Physics Methods in Machine Learning

Frank Noé: "Fundamentals of Artificial Intelligence and Machine Learning" (Part 2/2)

Watch part 1/2 here: https://youtu.be/5f-u0hgiLXw Mathematical Challenges and Opportunities for Autonomous Vehicles Tutorials 2020 "Fundamentals of Artificial Intelligence and Machine Learning" (Part 2/2) Frank Noé - Freie Universität Berlin Institute for Pure and Applied Mathematics, U

From playlist Mathematical Challenges and Opportunities for Autonomous Vehicles 2020

Towards Analyzing Normalizing Flows by Navin Goyal

Program Advances in Applied Probability II (ONLINE) ORGANIZERS Vivek S Borkar (IIT Bombay, India), Sandeep Juneja (TIFR Mumbai, India), Kavita Ramanan (Brown University, Rhode Island), Devavrat Shah (MIT, US) and Piyush Srivastava (TIFR Mumbai, India) DATE & TIME 04 January 2021 to 08 Janu

From playlist Advances in Applied Probability II (Online)

Stochastic Gradient Descent: where optimization meets machine learning- Rachel Ward

2022 Program for Women and Mathematics: The Mathematics of Machine Learning Topic: Stochastic Gradient Descent: where optimization meets machine learning Speaker: Rachel Ward Affiliation: University of Texas, Austin Date: May 26, 2022 Stochastic Gradient Descent (SGD) is the de facto op

From playlist Mathematics

Tom Goldstein: "What do neural loss surfaces look like?"

New Deep Learning Techniques 2018 "What do neural loss surfaces look like?" Tom Goldstein, University of Maryland Abstract: Neural network training relies on our ability to find “good” minimizers of highly non-convex loss functions. It is well known that certain network architecture desi

From playlist New Deep Learning Techniques 2018

Live Stream #124.2 - Linting and Neuroevolution - Part 2

In part 2 of Friday's live stream, I begin discussing the topic "neuroevolution" which will be the subject of chapter 11 of the next edition of the Nature of Code book. (http://natureofcode.com/) 🎥 Live Stream Part 1: https://youtu.be/sIeN74GrYHE 22:54 - Neuroevolution Part 1 53:50 - Neu

From playlist Live Stream Archive