Probability: We define geometric random variables, and find the mean, variance, and moment generating function of such. The key tools are the geometric power series and its derivatives.

From playlist Probability

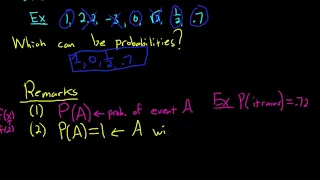

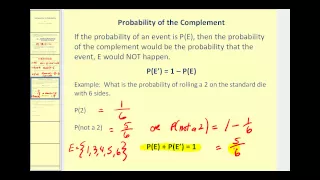

Please Subscribe here, thank you!!! https://goo.gl/JQ8Nys Introduction to Probability

From playlist Statistics

This video introduces probability and determine the probability of basic events. http://mathispower4u.yolasite.com/

From playlist Counting and Probability

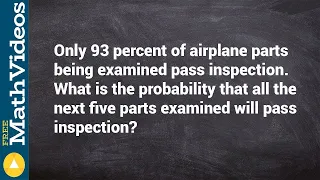

How to find the probability of consecutive events

👉 Learn how to find the conditional probability of an event. Probability is the chance of an event occurring or not occurring. The probability of an event is given by the number of outcomes divided by the total possible outcomes. Conditional probability is the chance of an event occurring

From playlist Probability

Overview of random variables in probability

From playlist Unit 6 Probability B: Random Variables & Binomial Probability & Counting Techniques

(PP 3.1) Random Variables - Definition and CDF

(0:00) Intuitive examples. (1:25) Definition of a random variable. (6:10) CDF of a random variable. (8:28) Distribution of a random variable. A playlist of the Probability Primer series is available here: http://www.youtube.com/view_play_list?p=17567A1A3F5DB5E4

From playlist Probability Theory

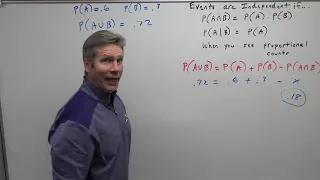

More Help with Independence Part 2Indep FE pt 2

More insight into the probability concept of independence

From playlist Unit 5 Probability A: Basic Probability

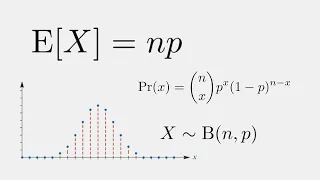

Expected Value of a Binomial Probability Distribution

Today, we derive the formula to find the expected value or the mean of a discrete random variable which follows the binomial probability distribution.

From playlist Probability

Average Treatment Effects: Propensity Scores

Professor Stefan Wager discusses the propensity score, and inverse-propensity weighting.

From playlist Machine Learning & Causal Inference: A Short Course

Probability - Quantum and Classical

The Law of Large Numbers and the Central Limit Theorem. Probability explained with easy to understand 3D animations. Correction: Statement at 13:00 should say "very close" to 50%.

From playlist Physics

17. Reinforcement Learning, Part 2

MIT 6.S897 Machine Learning for Healthcare, Spring 2019 Instructor: David Sontag, Barbra Dickerman View the complete course: https://ocw.mit.edu/6-S897S19 YouTube Playlist: https://www.youtube.com/playlist?list=PLUl4u3cNGP60B0PQXVQyGNdCyCTDU1Q5j In the first half, Prof. Sontag discusses h

From playlist MIT 6.S897 Machine Learning for Healthcare, Spring 2019

Average Treatment Effects: Double Robustness

Professor Stefan Wager talks about inference via double-robustness.

From playlist Machine Learning & Causal Inference: A Short Course

Causal inference with binary outcomes subject to both missingness and misclassification - Grace Yi

Virtual Workshop on Missing Data Challenges in Computation Statistics and Applications Topic: Causal inference with binary outcomes subject to both missingness and misclassification Speaker: Grace Yi Date: September 9, 2020 For more video please visit http://video.ias.edu

From playlist Mathematics

Causal inference in observational studies: Emma McCoy, Imperial College London

Emma McCoy is the Vice-Dean (Education) for the Faculty of Natural Sciences and Professor of Statistics in the Mathematics Department at Imperial College London. Her current research interests are in developing time-series and causal inference methodology for robust estimation of treatment

From playlist Women in data science conference

MIT 6.S897 Machine Learning for Healthcare, Spring 2019 Instructor: David Sontag View the complete course: https://ocw.mit.edu/6-S897S19 YouTube Playlist: https://www.youtube.com/playlist?list=PLUl4u3cNGP60B0PQXVQyGNdCyCTDU1Q5j This is the 2020 version of the lecture delivered via Zoom, d

From playlist MIT 6.S897 Machine Learning for Healthcare, Spring 2019

HTE: Confounding-Robust Estimation

Professor Stefan Wager discusses general principles for the design of robust, machine learning-based algorithms for treatment heterogeneity in observational studies, as well as the application of these principles to design more robust causal forests (as implemented in GRF).

From playlist Machine Learning & Causal Inference: A Short Course

Discrete stochastic simulation of spatially inhomogeneous biochemical systems

Linda Petzold (University of California, Santa Barbara). Plenary Lecture from the 1st PRIMA Congress, 2009. Plenary Lecture 6. Abstract: In microscopic systems formed by living cells, the small numbers of some reactant molecules can result in dynamical behavior that is discrete and stocha

From playlist PRIMA2009

Learn to find the or probability from a tree diagram

👉 Learn how to find the conditional probability of an event. Probability is the chance of an event occurring or not occurring. The probability of an event is given by the number of outcomes divided by the total possible outcomes. Conditional probability is the chance of an event occurring

From playlist Probability

Loss Functions: Policy Learning

Professor Stefan Wager distills best practices for causal inference into loss functions.

From playlist Machine Learning & Causal Inference: A Short Course