How to set up a lesson and add a content page

Shows you the options you need to select to set up a formative lesson with a content page.

From playlist How to create a lesson in your course

How to set a passing grade for a lesson

This video will show you how to only open up the rest of your course once the student gets a passing grade from one lesson.

From playlist How to create a lesson in your course

From playlist Machine Learning

In this video, you’ll learn more about the different types of learning styles, to see which one works best for you! Visit https://www.gcflearnfree.org/ to learn even more. We hope you enjoy!

From playlist Fundamentals of Learning

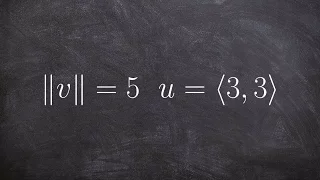

How to find the vector with given magnitude and same direction of another vector

http://www.freemathvideos.com In this video series you will learn multiple math operations. I teach in front of a live classroom showing my students how to solve math problems step by step. My math tutorials should be used to review previous lessons, complete your homework, or study for

From playlist Vectors

Learn Algebra 6 : Rate of Change

New Video Everyday at 1 PM EST!!! [ Click Notification Bell ] In this video I focus on Rate of Change, the Intercept Method and the Point to Point Method with numerous examples. I was asked by a local teacher to create an Algebra course that quickly reviewed all the key Algebra knowledge

From playlist Learn Algebra

New Video Everyday at 1 PM EST!!! [ Click Notification Bell ] I was asked by a local teacher to create an Algebra course that quickly reviewed all the key knowledge required. This course is centered around showing how to solve Algebra problems. For best results copy down the problem, watc

From playlist Machine Learning & Data Science

If Your Parents Didn’t Listen to You Properly...

There are few more important tasks for parents than to be able to listen properly to their children. It’s on the basis of having been listened to with close sympathy and imagination that a child will later on be able to accept themselves. Sign up to our new newsletter and get 10% off your

From playlist SELF

The intuitive idea of a function

Learning Objectives: Express the idea of a function as an "instruction", a "graph" and a "machine" that take inputs and spit out outputs. However there are constraints: every input must have a corresponding output, and more specifically just ONE corresponding output. ********************

From playlist Discrete Math (Full Course: Sets, Logic, Proofs, Probability, Graph Theory, etc)

Nexus Trimester - Ronitt Rubinfeld (MIT and Tel Aviv University) 2/2

Testing properties of distributions over big domains : information theoretic quantities Ronitt Rubinfeld (MIT and Tel Aviv University) march 10, 2016 Abstract: We survey several works regarding the complexity of testing global properties of discrete distributions, when given access to o

From playlist 2016-T1 - Nexus of Information and Computation Theory - CEB Trimester

DeepMind x UCL RL Lecture Series - Multi-step & Off Policy [11/13]

Research Scientist Hado van Hasselt discusses multi-step and off policy algorithms, including various techniques for variance reduction. Slides: https://dpmd.ai/offpolicy Full video lecture series: https://dpmd.ai/DeepMindxUCL21

From playlist Learning resources

Fairness and robustness in machine learning – a formal methods perspective - Aditya Nori, Microsoft

With the range and sensitivity of algorithmic decisions expanding at a break-neck speed, it is imperative that we aggressively investigate fairness and bias in decision-making programs. First, we show that a number of recently proposed formal definitions of fairness can be encoded as proba

From playlist Logic and learning workshop

Zuowei Shen: "Deep Learning: Approximation of functions by composition"

New Deep Learning Techniques 2018 "Deep Learning: Approximation of functions by composition" Zuowei Shen, National University of Singapore, Mathematics Abstract: The primary task in supervised learning is approximating some function x ? f(x) through samples drawn from a probability distr

From playlist New Deep Learning Techniques 2018

From playlist CS294-112 Deep Reinforcement Learning Sp17

Lecture 13.4 — The wake sleep algorithm [Neural Networks for Machine Learning]

Lecture from the course Neural Networks for Machine Learning, as taught by Geoffrey Hinton (University of Toronto) on Coursera in 2012. Link to the course (login required): https://class.coursera.org/neuralnets-2012-001

From playlist [Coursera] Neural Networks for Machine Learning — Geoffrey Hinton

Lecture 13D : The wake-sleep algorithm

Neural Networks for Machine Learning by Geoffrey Hinton [Coursera 2013] Lecture 13D : The wake-sleep algorithm

From playlist Neural Networks for Machine Learning by Professor Geoffrey Hinton [Complete]

0.2.3 Homework and LAFF (2015)

From playlist Week 0 (Spring 2015)