From playlist IR17 Probabilistic Model of IR

Relevance model 1: Bernoulli sets vs. multinomial urns

[http://bit.ly/RModel] Relevance model is the language model of the relevant class. In this video we look at the difference between the multinomial model (the one used in relevance models) and the multiple-Bernoulli model, which forms the basis for the classical probabilistic models.

From playlist IR18 Relevance Model

Relevance model 5: summary of assumptions

[http://bit.ly/RModel] The relevance model ranking is based on the probability ranking principle (PRP). It uses the background (corpus) model as a language model for the non-relevant class (just like the classical model), but has a novel estimate for the relevance model. The estimate is ba

From playlist IR18 Relevance Model

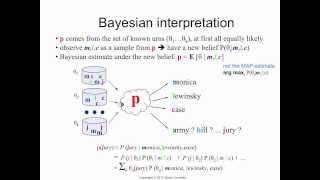

Relevance model 4: Bayesian interpretation

[http://bit.ly/RModel] Another way to interpret the relevance model is via Bayesian estimation: the relevance model could be one of a large set of urns. We know what the urns are, but don't know which one is correct, so we compute the posterior probability for each candidate urn, and combi

From playlist IR18 Relevance Model

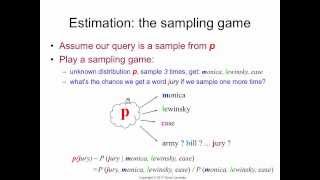

Relevance model 2: the sampling game

[http://bit.ly/RModel] How can we estimate the language model of the relevant class if we have no examples of relevant documents? We play a sampling game as follows. The relevance model is an urn with unknown parameters. We draw several samples from it and observe the query. What is the pr

From playlist IR18 Relevance Model

From playlist IR17 Probabilistic Model of IR

Relevance model 7: ranking functions

[http://bit.ly/RModel] The probability ranking principle (PRP) seems like the obvious ranking function for relevance models, but when we use it in the form of the odds ratio (as in the classical model), we bias our rankings towards the wrong type of document. A better approach is to use th

From playlist IR18 Relevance Model

Crash Course IR - Fundamentals

In this lecture we explore two fundamental building blocks of information retrieval (IR): indexing and ranked retrieval with TF-IDF and BM25 scoring models. Slides & transcripts are available at: https://github.com/sebastian-hofstaetter/teaching 📖 Check out Youtube's CC - we added our hig

From playlist Advanced Information Retrieval 2021 - TU Wien

Probabilistic model 9: BM25 and 2-poisson

[http://bit.ly/BM-25] To reflect the bursty nature of word occurrences, we use the 2-Poisson model of Harter. It uses a mixture of two Poisson distributions: one for the elite terms (those essential to the meaning of the document), the other for the non-elite terms. Estimation is infeasibl

From playlist Probabilistic Model of IR

Dense Retrieval ❤ Knowledge Distillation

In this lecture we learn about the (potential) future of search: dense retrieval. We study the setup, specific models, and how to train DR models. Then we look at how knowledge distillation greatly improves the training of DR models and topic aware sampling to get state-of-the-art results.

From playlist Advanced Information Retrieval 2021 - TU Wien

3 Vector-based Methods for Similarity Search (TF-IDF, BM25, SBERT)

Vector similarity search is one of the fastest-growing domains in AI and machine learning. At its core, it is the process of matching relevant pieces of information together. Similarity search is a complex topic and there are countless techniques for building effective search engines. In

From playlist Vector Similarity Search and Faiss Course

Thought Vectors, Knowledge Graphs, and Curious Death(?) of Keyword Search

The world of information retrieval is changing. BERT, Elmo, and the Sesame Street gang are moving in, shouting the gospel of "thought vectors" as a replacement for traditional keyword search. Meanwhile many search teams are now automatically extracting graph representations of the world, t

From playlist Machine Learning

Neural IR, part 1 | Stanford CS224U Natural Language Understanding | Spring 2021

For more information about Stanford’s Artificial Intelligence professional and graduate programs, visit: https://stanford.io/ai To learn more about this course visit: https://online.stanford.edu/courses/cs224u-natural-language-understanding To follow along with the course schedule and s

From playlist Stanford CS224U: Natural Language Understanding | Spring 2021

Neural IR, part 3 | Stanford CS224U Natural Language Understanding | Spring 2021

For more information about Stanford’s Artificial Intelligence professional and graduate programs, visit: https://stanford.io/ai To learn more about this course visit: https://online.stanford.edu/courses/cs224u-natural-language-understanding To follow along with the course schedule and s

From playlist Stanford CS224U: Natural Language Understanding | Spring 2021

Introduction to Dense Text Representation - Part 3

In the third part, I present advanced applications and training methods to learn dense text representations. Topics included: - Multilingual Text Embeddings - Data Augmentation - Unsupervised Text Embedding learning - Neural Search Slides: https://nils-reimers.de/talks/2021-06-Intro_Dens

From playlist Introduction to Dense Text Representation

Introduction to Neural Re-Ranking

In this lecture we look at the workflow (including training and evaluation) of neural re-ranking models and some basic neural re-ranking architectures. Slides & transcripts are available at: https://github.com/sebastian-hofstaetter/teaching 📖 Check out Youtube's CC - we added our high qua

From playlist Advanced Information Retrieval 2021 - TU Wien

Probabilistic model 3: parameter estimation

[http://bit.ly/BM-25] How do we estimate the parameters for the probabilistic model of IR? When we have examples of relevant and non-relevant documents (relevance feedback) the estimation is very straightforward: we use maximum-likelihood estimates for Bernoulli random variables (relative

From playlist Probabilistic Model of IR

Neural IR, part 2 | Stanford CS224U Natural Language Understanding | Spring 2021

For more information about Stanford’s Artificial Intelligence professional and graduate programs, visit: https://stanford.io/ai To learn more about this course visit: https://online.stanford.edu/courses/cs224u-natural-language-understanding To follow along with the course schedule and s

From playlist Stanford CS224U: Natural Language Understanding | Spring 2021